Update 2016-08: Docker integration with Windows has progressed since this article was written. Check out our RFC post for an exciting update on the direction we are taking with this feature in Octopus Deploy!

Today, the Gu announced that Microsoft is partnering with Docker to bring Docker to Windows.

Microsoft and Docker are integrating the open-source Docker Engine with the next release of Windows Server. This release of Windows Server will include new container isolation technology, and support running both .NET and other application types (Node.js, Java, C++, etc) within these containers. Developers and organizations will be able to use Docker to create distributed, container-based applications for Windows Server that leverage the Docker ecosystem of users, applications and tools.

How exciting! I’ve spent the last few hours drilling into Docker and what this announcement might mean for the future of .NET application deployments. Here are my thoughts so far.

Containers vs. virtual machines

Apart from Scott’s post I can’t find much information about the container support in Windows Server, so I’ll prefix this by saying that this is all speculation, purely on the assumption that they’ll work similar to Linux containers.

Once upon a time, you’d have a single physical server, running IIS with a hundred websites. Now, with the rise of virtualization and cloud computing, we tend to have a single physical server, running dozens of VM’s, each of which runs a single application.

Why do we do it? It’s really about isolation. Each application can run on different operating systems, have different system libraries, different patches, different Windows features (e.g., IIS installed), different versions of the .NET runtime, and so on. More importantly, if one application fails so badly that the OS crashes, or the OS needs to restart for an update, the other applications aren’t affected.

In the past, we’d start to build an application on one version of the .NET framework (say, 3.5,), only to be told there’s no way anyone is putting 3.5 on the production server because there are 49 other applications on that server using 3.0 that might break, and it will take forever to test them all. Virtualization has saved us from these restrictions.

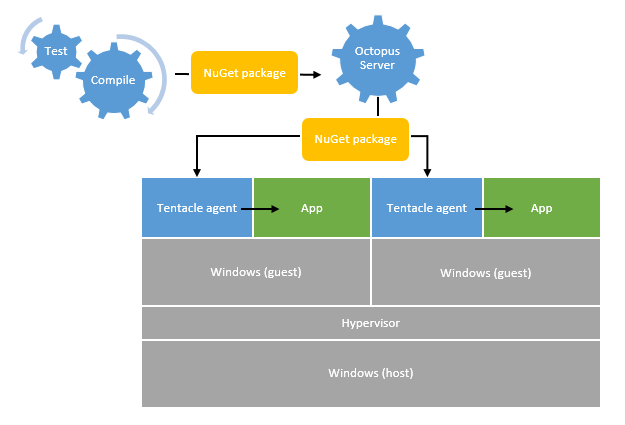

From a deployment automation perspective, a build server compiles code, and produces a package ready to be deployed. The Octopus Deploy server pushes that package to a remote agent, the Tentacle, to deploy it.

So, isolation is great. But the major downside is that we effectively have a single physical server, each running many copies of the same OS kernel. Which is a real shame, since that OS is a server-class OS designed for multitasking. In fact, assuming you run one main application per virtual machine, your physical box is actually running more OS’s than it is running primary applications!

Containers are similar, but different: there’s just one kernel, but each container remains relatively isolated from each other. There’s plenty of debate about just how secure containers are compared to virtual machines, so VM’s might always be preferred for completely different customers sharing the same hardware. However, assuming a basic level of trust exists, containers are a great middle ground.

The What is Docker page provides a nice overview of why containers are different to virtual machines. I’ve not seen much about how the containers in Windows Server will work, but for this post I’ll assume they’ll be pretty similar.

Where Docker fits

Docker provides a layer on top of these containers that makes it easier to build images to run in containers, and to share those images. Docker images are defined using a text-based Dockerfile, which specifies:

- A base OS image to start from

- Commands to prepare/build the image

- Commands to call when the image is “run”

For a Windows Dockerfile, I imagine it will look something like:

- Start with Windows Server 2014 SP1 base image

- Install .NET 4.5.1

- Install IIS with ASP.NET enabled

- Copy the DLL’s, CSS, JS etc. files for your ASP.NET web application

- Configure IIS application pools etc. and start the web site

Since it’s just a small text file, your Dockerfile can be committed to source control. From the command line, you then build an “image” (i.e., execute the Dockerfile), which will download all the binaries and create a disk image that can be executed later. You can then run instances of that image on different machines, or share it with others via Docker’s Hub.

The big advantage of Docker and using containers like this isn’t just in memory/CPU savings, but in making it more likely that the application you’re testing in your test environment will actually work in production, because it will be configured exactly the same way - it is exactly the same image. This is a really good thing, taking building your binaries once to the extreme.

What it means for Octopus

First up, remember that Octopus is a deployment automation tool, and we’re especially geared for teams that are constantly building new versions of the same application. E.g., a team building an in-house web application on two-week sprints, deploying a new release of the application every two weeks.

With that in mind, there are a few different ways that Docker and containers might be used with Octopus.

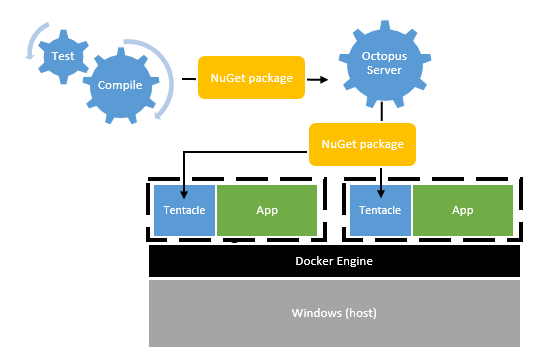

Approach 1: Docker is an infrastructure concern

This is perhaps the most basic approach. The infrastructure team would maintain Dockerfiles, and build images from them and deploy them when new servers are provisioned. This would guarantee that no matter which hosting provider they used, the servers would have a common baseline - the same system libraries, service packs, OS features enabled, and so on.

Instead of including the application as part of the image, the image would simply include our Tentacle service. The result would look similar to how Octopus works now, and in fact would require no changes to Octopus.

This has the benefit of making application deployments fast - we’re just pushing the application binaries around, not whole images. And it still means the applications are isolated from each other, almost as if they were in virtual machines, without the overhead. However, it does allow for cruft to build up in the images over time, so it might not be a very “pure” use of Docker.

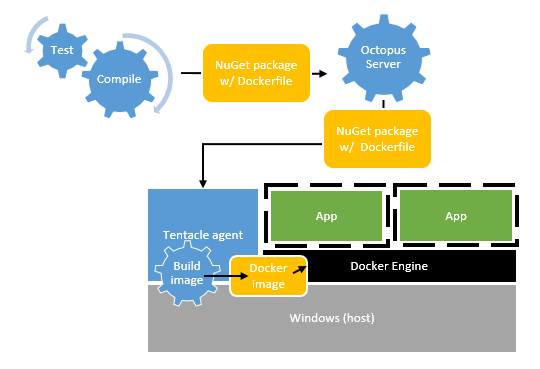

Approach 2: Build a new image per deployment

This approach is quite different. Instead of having lots of copies of Tentacle, we’d just need one on the physical server. On deployment, we’d create new images and run them in Docker.

- Build server builds the code, runs unit tests, etc. and creates a NuGet package

- Included in the package is a Dockerfile containing instructions to build the image

- During deployment, Octopus pushes that NuGet package to the remote machine

- Tentacle runs

docker buildto create an image - Tentacle stops the instance if it is running, then starts the new instance using the new image

The downside of this is that since we’re building a different image each time, we’re losing the consistency aspect of Docker; each web server might end up with a slightly different configuration depending on what the latest version of various libraries was at the time.

On the upside, we do gain some flexibility. Each application might have different web.config settings etc., and Octopus could change these values prior to the files being put in the image.

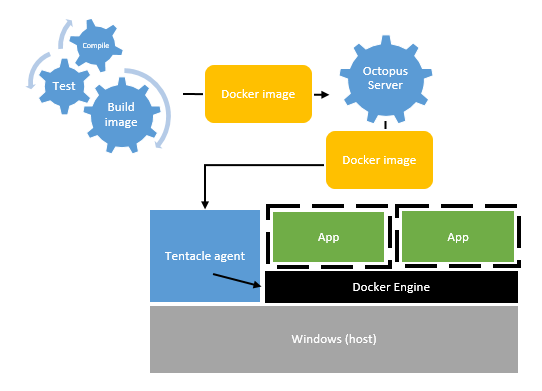

Approach 3: Image per release

A better approach might be to build the Docker image earlier in the process, like at the end of the build, or when a Release is first created in Octopus.

- Build server builds the code, runs unit tests, etc.

- Build server (or maybe Octopus) runs

docker buildand creates an image - The image is pushed, either to Octopus or to Docker Hub

- Octopus deploys that image to the remote machine

- Tentacle stops the instance if it is running, then starts the new instance using the new image

This approach seems to align best with Docker, and provides much more consistency between environments - production will be the same as UAT, because it’s the exact same image running in production as was running in UAT.

There’s one catch: how will we handle configuration changes? For example, how will we deal with different connection strings or API keys in UAT vs. production? Keep in mind that these values tend to change at a different rate than the application binaries or other files that would be snap-shotted in the image.

In the Docker world, these settings seem to be handled by passing environment variables to docker run when the instance of the image is started. And while Node or Java developers might be conditioned to use environment variables, .NET developers rarely use them for configuration - we expect to get settings from web.config or app.config.

There’s some other complexity too; at the moment, when deploying a web application, Octopus deploys the new version side-by-side with the old one, configures it, and then switches the IIS bindings, reducing the overall downtime on the machine. With Docker, we’d need to stop the old instance, start the new one, then configure it. Unless we build a new image with different configuration each time (approach #2), downtime is going to be tricky to manage.

Would Octopus still add value?

Yes, of course!

Docker makes it extremely easy to package an application and all the dependencies needed to run it, and the containers provided by the OS make for great isolation. Octopus isn’t about the mechanics of a single application/machine deployment (Tentacle helps with that, but that’s not the core of Octopus). Octopus is about the whole orchestration.

Where Octopus provides value is for deployments that involve more than a single machine, or more than a single application. For example, prior to deploying your new web application image to Docker, you might want to backup the database. Then deploy it to just one machine, and pause for manual verification, before moving on to the rest of the web servers. Finally, deploy another Docker image for a different application. The order of those steps are important, and some run in parallel and some are blocking. Octopus will provide those high-level orchestration abilities, no matter whether you’re deploying NuGet packages, Azure cloud packages, or Docker images.

Future of Azure cloud service projects?

Speaking of Azure cloud packages, will they even be relevant anymore?

There’s some similarity here. With Azure, there’s web sites (just push some files, and it’s hosted for you on existing VM’s), or you can provision entire VM’s and manage them yourself. And then in the middle, there’s cloud services - web and worker roles - that involve provisioning a fresh VM every deployment, and rely on the application and OS settings being packaged together. To be honest, in a world of Docker on Windows, it’s hard to see there being any use for these kinds of packages.

Conclusion

This is a very exciting change for Windows, and it means that some of the other changes we’re seeing in Windows start to fit together. Docker leans heavily on other tools in the Linux ecosystem, like package managers, to configure the actual images. In the Windows world, that didn’t exist until very recently with OneGet. PowerShell DSC will also be important, although I do feel that the sytax is still too complicated for it to gain real adoption.

How will Octopus fit with Docker? Time will tell, but as you can see we have a few different approaches we could take, with #3 being the most likely (#1 being supported already). As the next Windows Server with Docker gets closer to shipping we’ll keep a close eye on it.