The Model Context Protocol (MCP) is an open-source standard initially developed by Anthropic in late 2024 to connect AI assistants to external tools and data sources. Despite being a relatively new standard, MCP has been adopted by all major technology and AI companies, including OpenAI, Google, Microsoft, and AWS. Octopus, too, has an MCP server that exposes an Octopus instance via MCP.

In this post, we’ll explore what MCP is, how it works, and why it’s essential for the future of AI.

What problem does MCP solve?

Most of us are familiar with tools like ChatGPT and code generation assistants like GitHub Copilot. These tools are built on Large Language Models (LLMs) that encapsulate a vast amount of knowledge and are used to answer questions, generate text, and write code. However, LLMs only know the state of the world up to their training cut-off date. Anita Lewis puts it like this in The MCP Revolution: AWS Team’s Journey from Internal Tools to Open Source AI Infrastructure:

We have these incredibly powerful AI models, but they’ve essentially been living in these isolated bubbles. The knowledge cut-offs can range from anywhere between 6 months to two plus years.

In addition, LLMs cannot natively access external data sources or tools. This means LLMs don’t have knowledge of your internal systems, databases, or APIs. This quote from AWS re

A really good analogy that I found online about this is that AI before MCP is like computers before the internet, right? They were isolated but really powerful. Now with MCPs, the potential for AI is really limitless, and MCP is a key enabler for what we’ve come to know as agentic AI platforms.

Another way to think about MCP is provided by GitHub Rubber Duck Thursday - let’s hack:

I would liken [MCP] to Chrome extensions. So you know how you can install Chrome extensions on your browser to do different things with your browser? So you can install MCP servers into your LLMs to do different things on your behalf like we use Chrome extensions.

In short, MCP is a common standard that platforms implement to grant LLMs access to external data sources and perform actions on behalf of users.

How does MCP compare to REST, GraphQL, gRPC?

We’ve had common web-based protocols and standards for years now. Representational State Transfer (REST) has been a popular architectural style for designing networked applications for decades. GraphQL is an open-source query language for APIs providing a structured approach to data fetching across multiple data sources, and gRPC Remote Procedure Calls (with the recursive acronym gRPC) is a high-performance, open-source framework developed by Google that enables remote procedure calls between distributed systems.

All these protocols and standards have been successfully used at scale for many years. So why do we need MCP?

MCP is explicitly designed for AI models and their unique requirements. While the functionality provided by MCP overlaps with REST, GraphQL, and gRPC, MCP introduces several features to enable LLMs to interact with external systems more naturally and efficiently.

MCP servers can provide three main types of capabilities:

- Resources: File-like data that can be read by clients (like API responses or file contents)

- Tools: Functions that can be called by the LLM (with user approval)

- Prompts: Pre-written templates that help users accomplish specific tasks

Resources are much like HTTP GET operations. A resource returns data to be consumed by an LLM without side effects.

Tools are more like HTTP POST operations. A tool performs an action on behalf of the LLM and may have side effects.

Prompts are a unique feature of MCP and demonstrate how MCP is designed specifically for LLMs. While you can execute simple operations with concise prompts, more complex operations usually require verbose and explicit prompts. The prompts may also need to use specific language to refer to the resources and tools provided by an MCP server. By exposing prompts as a first-class concept, MCP servers can guide end users as they interact with LLMs.

Another benefit of MCP is that it provides a consistent standard for LLMs to interact with multiple external systems.

REST APIs can vary widely in their design and implementation, with standards like JSON API and HAL offering unique approaches to designing REST APIs. GraphQL is more consistent, but exposing multiple data sources via a single GraphQL endpoint is a non-trivial task that usually requires developing a custom server. gRPC effectively abstracts away the networking layer and generates client and server classes for multiple languages, but focuses on a code-first approach that isn’t tailored to general-purpose clients like LLMs.

MCPs to allow a general-purpose client to execute arbitrary operations across multiple servers defined in a simple JSON file. This is important because much of the value from MCP-based workflows is the ability to combine many data sources and tools to accomplish complex tasks. The Qodo report: 2025 State of AI Code Quality notes that:

Agentic chat [uses] an average of 2.5 MCP tools per user message.

Explore Model Context Protocol (MCP) on AWS! | AWS Show and Tell - Generative AI | S1 E9 goes further, saying:

There’s been people using 40 - 50 MCP servers in one go which is pretty wild.

The biggest challenge with existing standards is that the platforms you want to work with almost certainly implement a mix of REST, GraphQL, and gRPC. This creates a massive headache for consumers who are forced to implement glue logic to consume each system from an AI assistant. MCP represents an opportunity for platforms to standardize on a single protocol for AI assistants, making it easier for users to integrate with multiple systems.

How do you use MCP?

To make use of MCP, you need two things:

- An MCP client. The client will typically expose a chat-based interface to the user and use an LLM to process user input and generate responses.

- An MCP server. The server exposes resources, tools, and prompts to the client.

To demonstrate how MCP works, we’ll use Octopus as the MCP server and Copilot Chat in IntelliJ as the MCP client.

In Intellij (or any other Jetbrains IDE), install the Copilot addon, open the Copilot chat window, and select Agent in the chat toolbar:

Click the Configure tools icon and then click Add more tools. This opens the mcp.json file.

Add the following server definition to the mcp.json file, replacing the API key and server URL with your own values:

{

"servers": {

"octopusdeploy": {

"command": "npx",

"args": [

"-y",

"@octopusdeploy/mcp-server",

"--api-key",

"API-ABCDEFGHIJKLMNOP",

"--server-url",

"https://yourinstance.octopus.app"]

}

}

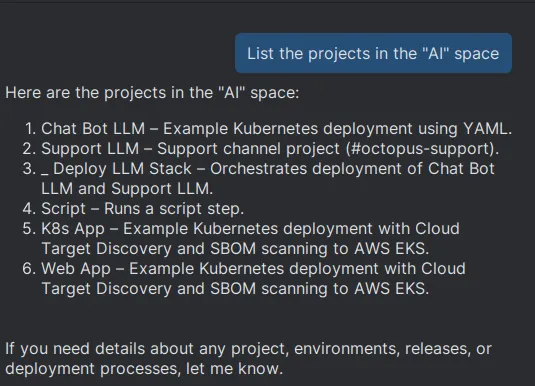

}You can now enter a prompt like List the projects in the "AI" space. Copilot will use the Octopus MCP server to retrieve the list of spaces from your Octopus instance:

And that’s it! With a few minutes and a few lines of JSON, you can start chatting with your Octopus instance.

Conclusion

MCP empowers AI agents to interact with multiple external systems through a natural language interface. Systems that previously required complex API integrations implemented with custom code are now accessible to anyone with knowledge of prompt engineering.

While MCP is a young standard, it has already been widely adopted by major technology companies and is poised to become the de facto standard for AI agent interoperability. Or, as Claude 4 + Claude Code + Strands Agents in Action | AWS Show & Tell puts it:

What really excited [me] about MCP is that all these enterprises have so many data silos, you know, structured, unstructured, semi-structured, just everywhere, right? And I feel like MCP is finally the way to break those data silos down in an effort to make it accessible to AI.

Get started with the Octopus MCP server today with the instructions in the Octopus documentation.

Happy deployments!