Our Platform Engineering Pulse report is coming soon, containing insights, strategies, and real-world data on how organizations adopt and succeed with Platform Engineering. We’ll also launch a survey to deepen our understanding of the patterns, challenges, and future direction of successful platform building.

One of the areas we explored in the Platform Engineering Pulse report was how organizations measure their internal developer platforms, with results that varied from technical measures to not collecting any metrics at all.

This post looks at which metrics were popular and how you can use them to structure your measurement approach to avoid the zero-metric illusion.

Measurement is essential

At the start of a Platform Engineering initiative, the immediate problems take precedence, and measurement becomes an afterthought. This leaves platform teams without a pre-platform baseline, which makes it challenging to demonstrate the platform’s impact.

The lack of measurement also increases the risk of platforms adding features that don’t align with the organization’s core motivations. For instance, a platform team might focus on standardization, only to discover that the primary driver for investing in Platform Engineering was to improve developer experience.

Platforms often fail not because they are inherently bad, but because they target the wrong personas and attempt to solve the wrong problems.

Even successful platforms struggle with ongoing justification and optimization when they lack clear goals, a robust measurement system that reflects those goals, and baseline data. The platform team may find leadership challenges continued investment if they can’t show the platform’s value.

Therefore, it is crucial to measure platform performance against its established goals. But how are organizations currently approaching this?

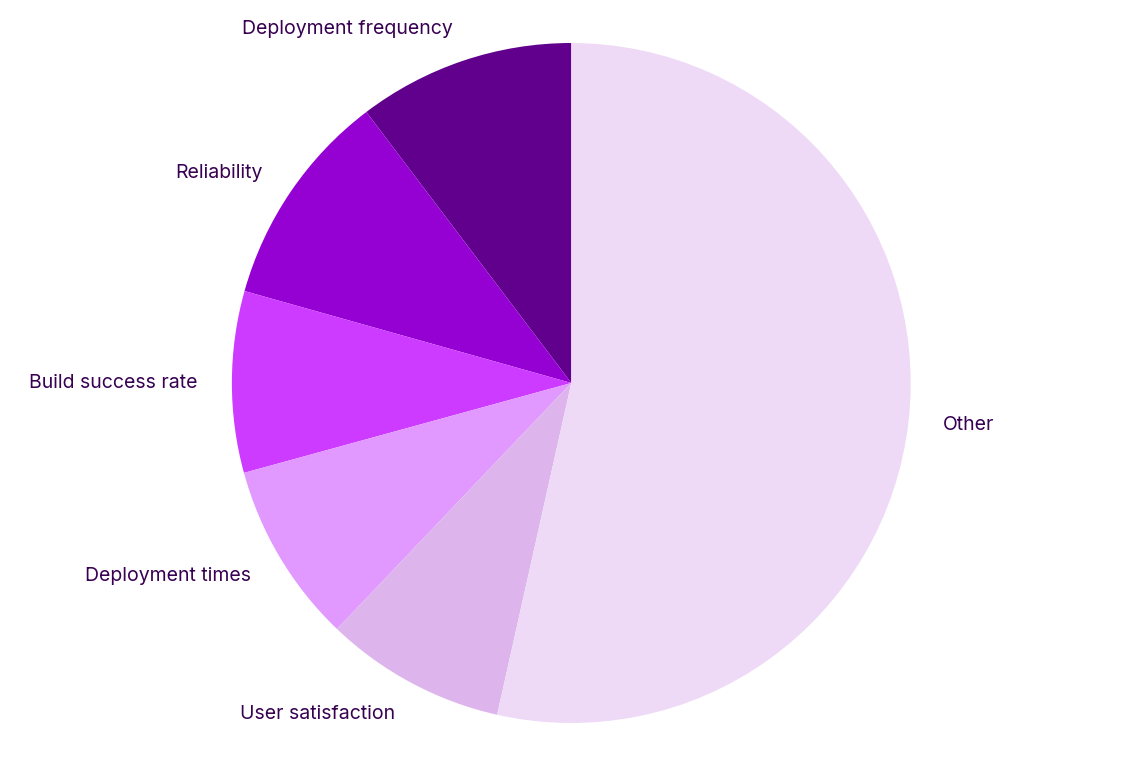

What organizations measure

The survey found organizations focused on 3 key areas for measurement: Software delivery, operational performance, and user experience. The variety of metrics likely reflects the variety of contexts that platforms can assist, though is also evidence that metric systems are skewed to the elusive productivity problem rather than true developer experience and engagement.

Software delivery metrics

The software delivery metrics assess the speed, quality, and throughput of development teams using the platform. They often align with DORA measurements, highlighting how platforms are seen as a route to shipping software more often and at higher quality. The metrics encompass throughput (e.g., deployment frequency, build time, features delivered) and stability (e.g., change failure rate, build success rate).

- Deployment frequency

- Deployment times

- Change failure rate

- Recovery time

- Build success rate

- Build time

- Features delivered

While valuable for understanding delivery performance, these metrics primarily reflect downstream outcomes rather than the platform’s direct contribution to developer productivity. They are most effective when measured before and after platform adoption to demonstrate improvement.

Operational performance metrics

Metrics for operational performance track the cost, performance, and efficiency of applications that use the platform. They combine traditional infrastructure monitoring (e.g., reliability, error rates, system performance) with resource optimization (e.g., usage efficiency, cost management). Project count is a basic adoption indicator, but it lacks the context of the addressable market size.

- Reliability

- Error rates

- System performance

- Resource usage

- Infrastructure cost

- Project count

These metrics are crucial for demonstrating that the platform is operationally sound and cost-effective, but they don’t necessarily indicate developer value or ease of use.

User experience metrics

These metrics directly measure how developers and teams perceive and interact with the platform, treating internal developers as customers. They focus on satisfaction and onboarding journeys. Net promoter score (NPS) offers benchmarkable sentiment data, while user satisfaction provides broader feedback. Onboarding time is critical as it represents the first impression and adoption barrier.

- User satisfaction

- Net promoter score (NPS)

- Onboarding time

These metrics are vital for platforms run as products with optional adoption, indicating whether the platform is compelling enough for developers to choose and continue using voluntarily.

Most organizations prioritize technical and delivery metrics, with fewer focusing on user experience or business outcomes. This suggests a risk that platform teams are measuring what’s easy to collect rather than what truly demonstrates business value. The heavy emphasis on technical metrics indicates that many organizations still measure platforms like infrastructure rather than products serving internal customers.

How measurement improves platform performance

For metrics to improve platform performance, you need to measure multiple dimensions, not just one. Internal developer platforms offer many benefits, so the measurement system should cover all platform goals.

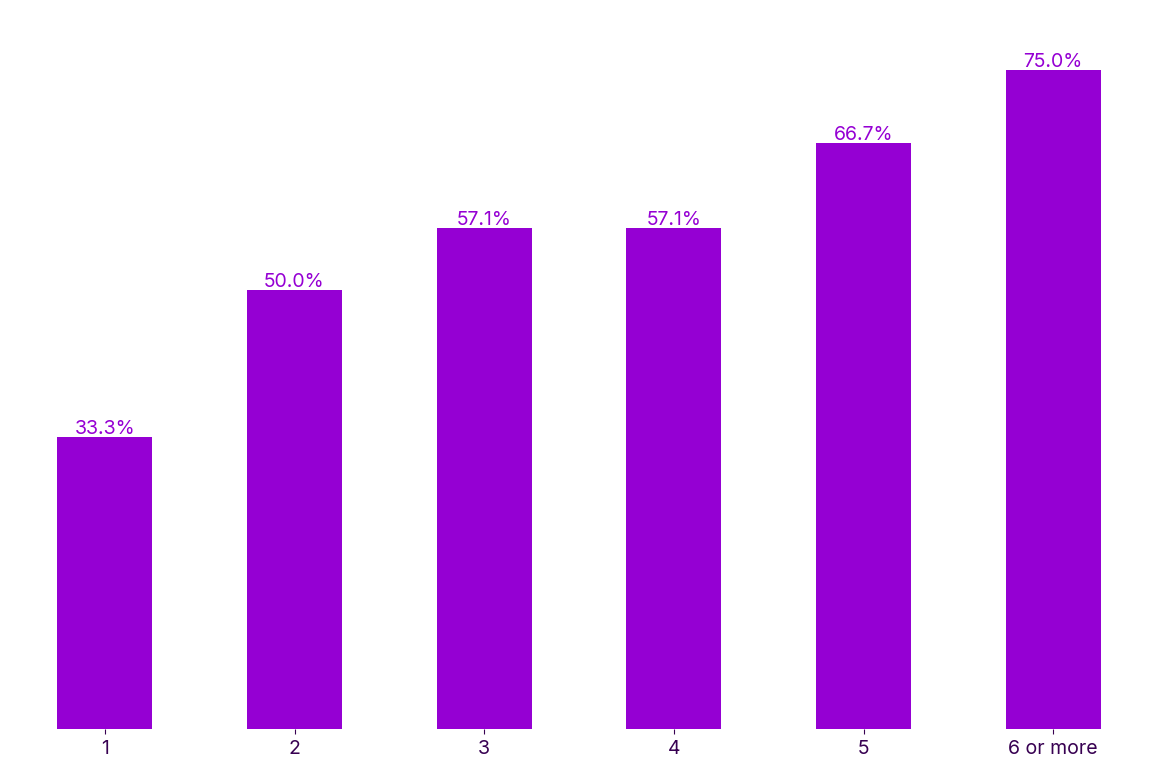

We found organizations that measure more dimensions were more likely to be successful. A single metric gives you a one-in-three chance of creating a successful platform, while 2 metrics make it 50/50. Organizations measuring 6 or more metrics were most likely to be successful.

Platform sponsors may expect you to deliver technical reliability, boost developer productivity, offer a positive user experience, manage costs, encourage adoption, and align with business objectives. Relying on just a handful of metrics cannot adequately capture the interplay of all these dimensions.

Breaking the success illusion

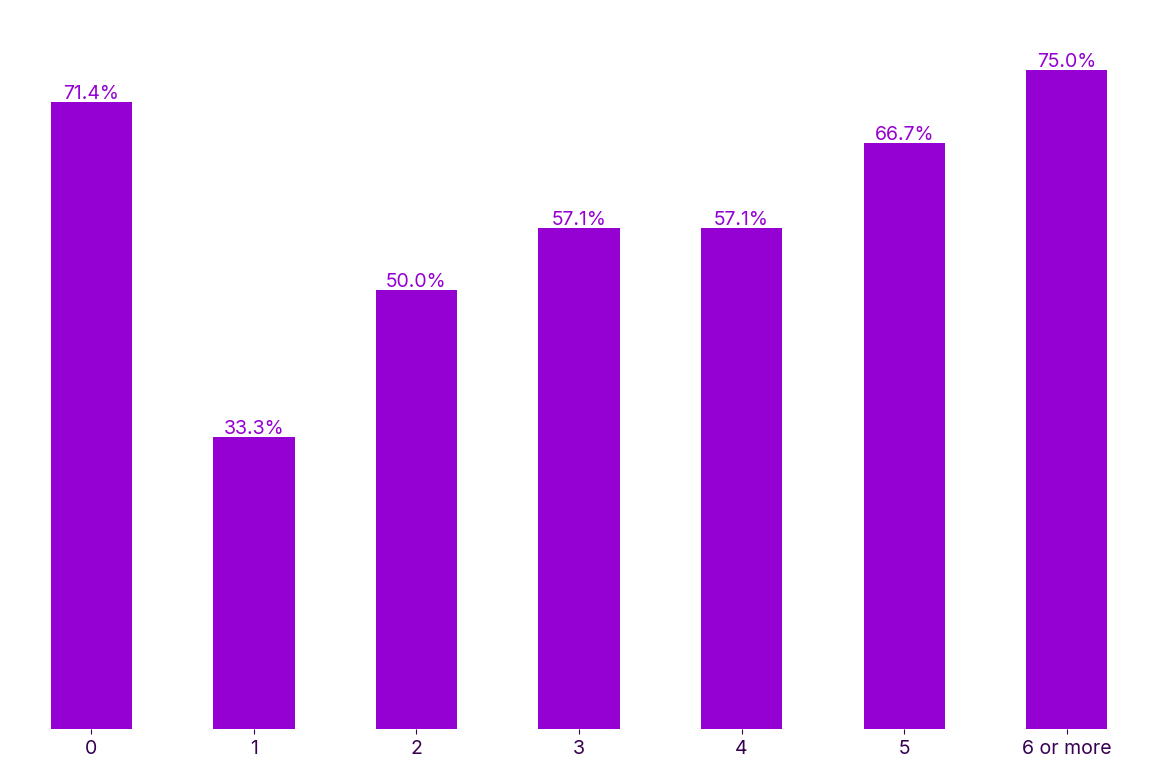

The data shows a zero-metric high-success effect. This occurs when organizations that don’t collect any concrete measures of the Platform Engineering effort report high success rates. This phenomenon comes from two very different situations masquerading as the same outcome.

There may be easy and obvious success criteria the platform can address, such as a critical and evident problem where success is undeniable without formal measurement. Where the effectiveness is apparent, formal measurements may be unnecessary. A more likely explanation is that there’s an illusion of success caused by a lack of measurement.

Without concrete metrics, platform teams can focus on outputs (e.g., features built, no outages) rather than actual outcomes (e.g., increased developer productivity, achievement of business goals). Stakeholders may mistake activity for impact, especially if the team is busy and there are no apparent failures.

This also helps explain the dramatic increase in success rates observed when moving from one metric to three or more. Teams relying on a single metric might choose one that doesn’t capture holistic success, leading to a false sense of achievement. However, when multiple dimensions are measured, maintaining illusions becomes difficult. For instance, you can’t claim unqualified success if deployment frequency is high but Net Promoter Score (NPS) is low.

The data also suggests a dangerous middle ground where minimal measurement can create more problems than no measurement. It provides false confidence without the comprehensive feedback necessary for genuine improvement.

Using MONK metrics

MONK metrics offer a balanced approach to measuring Platform Engineering success, combining external validation and internal alignment. This framework is more adaptable than purely technical metrics, yet still provides a standardized basis for comparison and improvement.

The MONK metrics are:

- Market share

- Onboarding times

- Net Promoter Score (NPS)

- Key customer metrics

The first three, market share, onboarding times, and NPS, form a benchmarkable trio. These metrics allow for comparison against industry standards and are broadly applicable to Platform Engineering initiatives, serving as a starting point to understand high performance in other organizations. For example, if your onboarding times are slower than those of your industry peers, you can investigate their methods and implement similar improvements.

Including “key customer metrics” is crucial for preventing the measurement framework from losing touch with business realities. Organizations kick off Platform Engineering to solve specific organizational problems, but their success is measured using generic technical metrics that don’t reflect the original investment’s purpose. Instead, it’s essential to translate the motivations behind adopting Platform Engineering into the key customer metrics to track progress towards the platform’s objectives effectively.

MONK metrics address the common issue of platform teams optimizing for metrics that appear favorable but don’t contribute to actual business value. For instance, if you introduce Platform Engineering to reduce time-to-market, measures of infrastructure uptime or build failures fail to track tangible improvements in delivery flow.

Lack of measurement makes your platform vulnerable

Without measurement, platform teams end up in a vulnerable position. They can’t demonstrate their value when budget discussions come up, they can’t identify what’s working versus what needs improvement, and they can’t make compelling cases for continued investment or expansion.

The absence of data also hinders course correction. Platform Engineering involves complex trade-offs between developer experience, operational efficiency, security, and cost. Without clear metrics, teams may prioritize visible or politically expedient solutions over those that deliver actual value.

MONK metrics offer a practical solution. They are accessible, enabling organizations to begin measuring with minimal tooling or data infrastructure. You can gather basic market share data through surveys, track onboarding times simply, and get NPS using lightweight feedback tools.

Their benchmarkable nature also helps address the common “what’s good enough?” dilemma. Instead of abstractly debating whether a two-day onboarding time is acceptable, teams can compare their performance to similar organizations.

Happy deployments!