Artificial intelligence, and GenAI technologies in particular, are transforming our technology landscape.

For Continuous Delivery, AI allows us to solve previously-unsolvable problems. Parsing complex log files and diagnosing root cause failures and providing intelligent remediation; natural-language exploration of your software landscape, simplifying auditing, compliance, and standardization; agentic workflows providing intelligent glue between your essential software services.

At Octopus, we are bringing these capabilities to the best Continuous Delivery tool on the market, lowering risk, improving efficiency, and accelerating your software delivery.

Our most recent AI-powered capability is the Octopus MCP Server.

What is MCP?

Model Context Protocol (MCP) allows the AI assistants you use in your day-to-day work, like VS Code, Claude, or ChatGPT, to connect to the systems and services you own in a standardized fashion, allowing them to pull information from those systems and services to answer questions and perform tasks.

The Octopus MCP Server

The Octopus MCP Server provides your AI assistant with powerful tools that allow it to explore deployments, inspect configuration, and diagnose problems within your Octopus instance, transforming it into your ultimate DevOps wingmate. For a list of supported use-cases and sample prompts, see our documentation.

The MCP server architecture ensures that your deployment data remains secure while enabling powerful AI-assisted workflows. All interactions are logged and auditable, maintaining the compliance and governance standards your organization requires.

The initial release of the Octopus MCP Server is a local MCP server - this means it runs on your local machine, and communicates with your Octopus instance via secure HTTPS. If you are interested in remote MCP, in which the MCP server is embedded within your Octopus instance, please register your interest on our roadmap.

Breaking down DevOps silos

One of the strengths of MCP is that it allows your AI assistant to perform tasks across your DevOps ecosystem. It can work alongside other MCP servers to accomplish more complex orchestrations across Octopus and your other essential DevOps services.

With Continuous Delivery, one of the challenges that arises is understanding the continual flow of changes to production, and monitoring to ensure they are happy and healthy. Typically, you need to work across a number of systems to answer questions within this space. Let’s explore how MCP supercharges our ability to do this all in one place - our AI client of choice.

What has just shipped, and is it healthy?

This example uses Claude Desktop, with Sonnet 4 as the model.

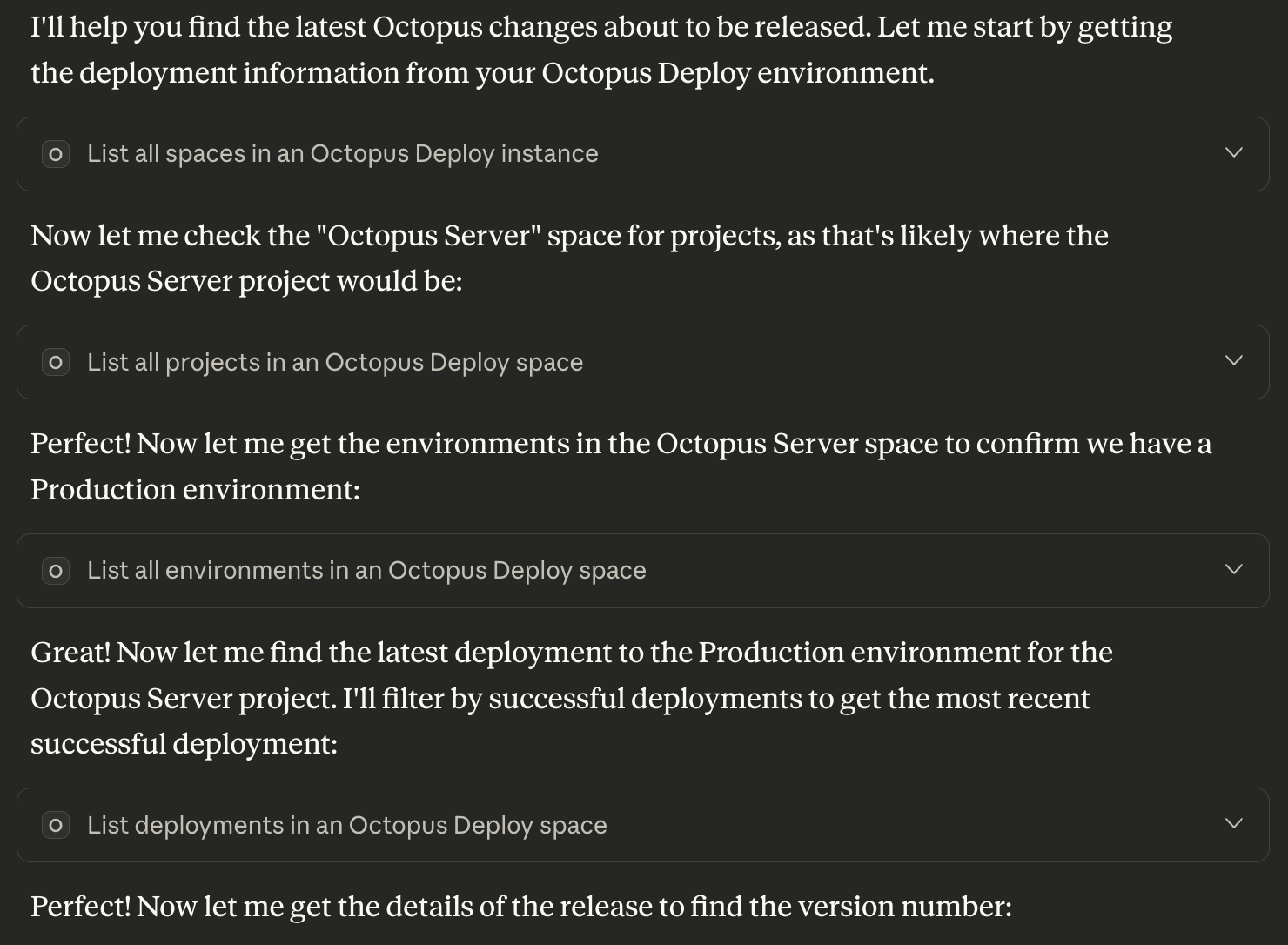

I’d like to know what Octopus changes are just about to be released to our customers. Find the latest untenanted deployment of the Octopus Server project to the Production environment, and then find a commit in GitHub in OctopusDeploy/OctopusDeploy with a tag matching the version number of the deployment, and tell me the commit details so I can understand what is being shipped.

Claude has used the Octopus MCP server to explore our Octopus instance (of course we use Octopus to ship Octopus!) and find the latest release to our Production environment. It then digs into the release to find the version number of the release.

Next it uses the Github MCP server to find a commit tagged with that version. Traceability is essential in Continuous Delivery - you have to know what changes in your source control correspond to what released versions of your software.

It finds the tagged commit, digs into the details, and gives us a summary of the change - in this case it looks like a small bugfix to ensure backwards compatibility for API clients. Great!

Now, has the deployment gone smoothly? The deployment itself is green, but we know that is only the start of the story - let’s provide another prompt in the same chat to see how it is behaving out in the real world.

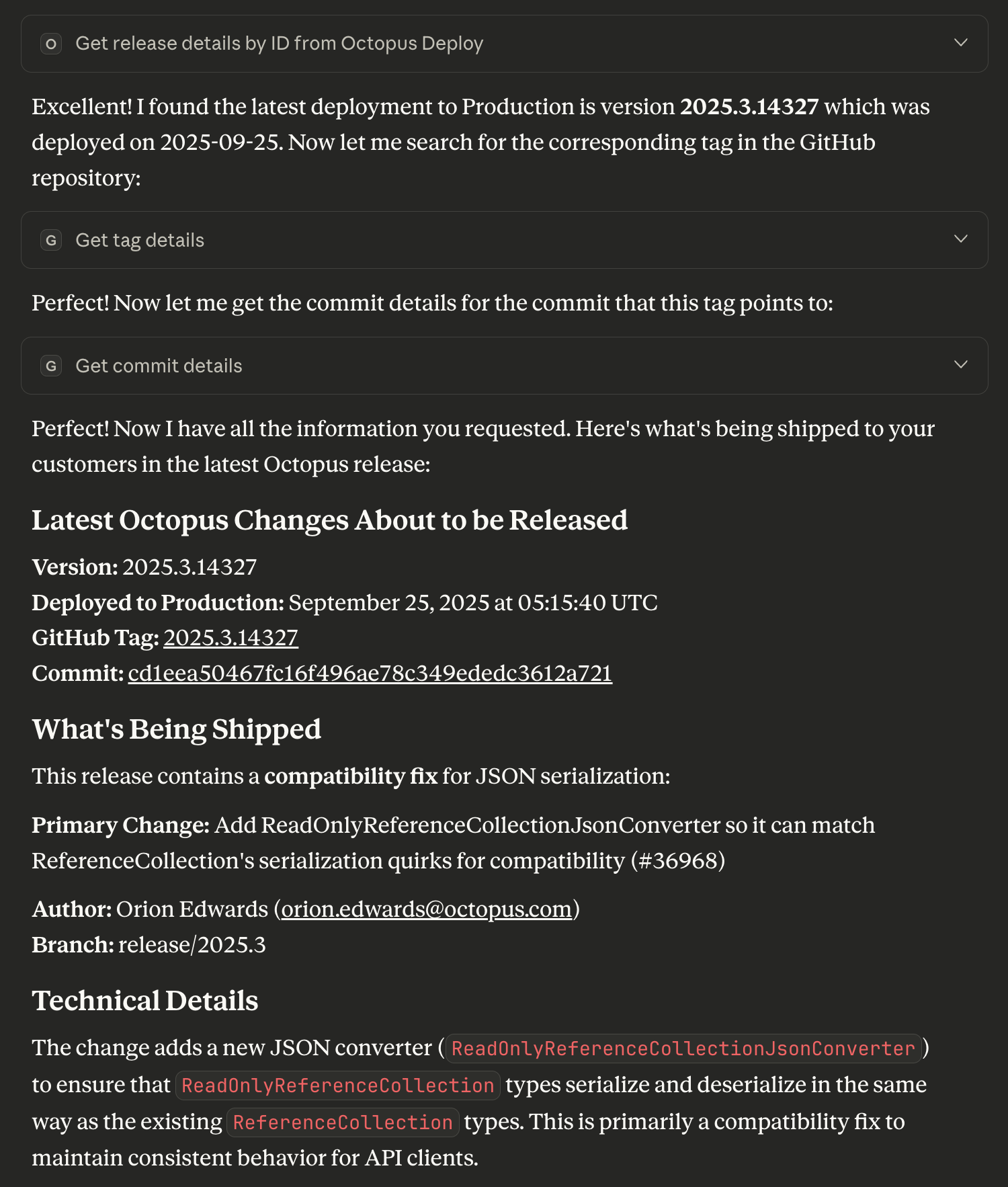

Can you see any errors in Honeycomb regarding this release?

We use Honeycomb as a key part of our observability stack at Octopus, helping us monitor our Production environment to identify, diagnose, and remediate problems as they come up.

LLMs can be quite persistent in their pursuit of an answer. In this case, Claude keeps looking for more specific examples of errors that might indicate a serialization problem, as it has understood that this is what the change was intended to fix. Very clever!

Getting started

Begin your AI-powered DevOps journey today with the Octopus MCP Server. As an early access participant, you’ll help shape these features while gaining early access to capabilities that will transform how teams deploy software.

Get Started with the Octopus MCP Server