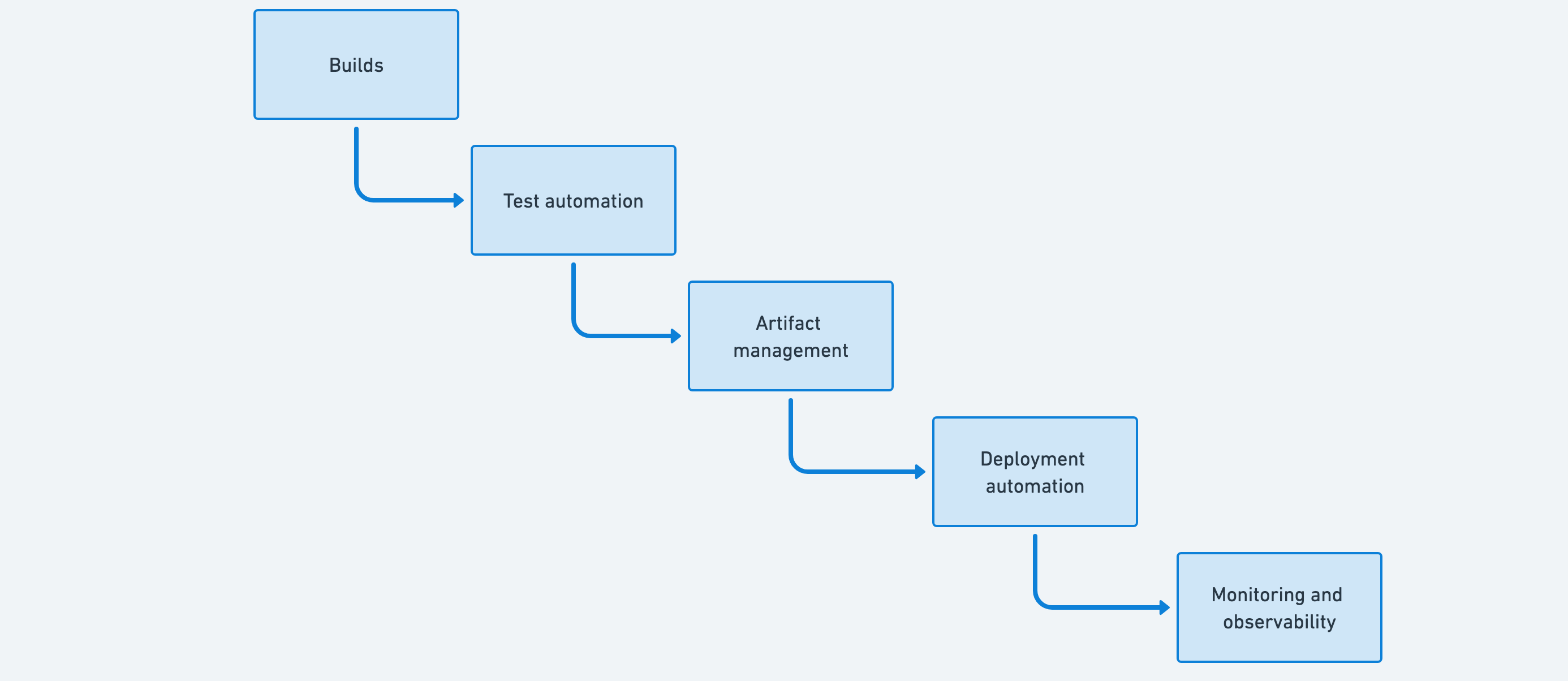

Our Platform Engineering Pulse report gathered a list of features organizations commonly add to their internal developer platforms. We grouped sample platforms into common feature collections and found that platform teams follow similar patterns when implementing deployment pipelines.

They begin by creating an end-to-end deployment pipeline that automates the flow of changes to production and establishes monitoring. Next, they add security concerns into the pipeline to scan for vulnerabilities and manage secrets. This eventually leads to a DevOps pipeline, which adds documentation and additional automation.

You can use this pragmatic evolution of CI/CD pipelines as a benchmark and a source of inspiration for platform teams. It’s like a natural maturity model that has been discovered through practice, rather than one that has been designed upfront.

Stage 1: Deployment pipeline

The initial concern for platform teams is to establish a complete deployment pipeline, allowing changes to flow to production with high levels of automation. Although the goal is a complete yet minimal CI/CD process, it’s reassuring to see that both test automation and monitoring are frequently present at this early stage.

Early deployment pipelines involve integrating several tools, but these tools are designed to work together, making the integration quick and easy. Build servers, artifact management tools, deployment tools, and monitoring tools have low barriers to entry and lightweight touchpoints, so they feel very unified, even when provided by a mix of different tool vendors or open-source options.

In fact, when teams attempt to simplify the toolchain by using a single tool for everything, they often end up with more complexity, as tool separation enforces a good pipeline architecture.

Builds

Building an application from source code involves compiling code, linking libraries, and bundling resources so you can run the software on a target platform. While this may not be the most challenging task for software teams, build processes can become complex and require troubleshooting that takes time away from feature development.

When a team rarely changes its build process, it tends to be less familiar with the tools it uses. It may not be aware of features that could improve build performance, such as dependency caching or parallelization.

Test automation

To shorten feedback loops, it is essential to undertake all types of testing continuously. This means you need fast and reliable test automation suites. You should cover functional, security, and performance tests within your deployment pipeline.

You must also consider how to manage test data as part of your test automation strategy. The ability to set up data in a known state will help you make tests less flaky. Test automation enables developers to identify issues early, reduces team burnout, and enhances software stability.

Artifact management

Your Continuous Integration (CI) process creates a validated build artifact that should be the canonical representation of the software version. An artifact repository ensures only one artifact exists for each version and allows tools to retrieve that version when needed.

Deployment automation

Even at the easier end of the complexity scale, deployments are risky and often stressful. Copying the artifact, updating the configuration, migrating the database, and performing related tasks present numerous opportunities for mistakes or unexpected outcomes.

When teams have more complex deployments or need to deploy at scale, the risk, impact, and stress increase.

Monitoring and observability

While test automation covers a suite of expected scenarios, monitoring and observability help you expand your view to the entirety of your real-world software use. Monitoring implementations tend to start with resource usage metrics, but mature into measuring software from the customer and business perspective.

The ability to view information-rich logs can help you understand how faults occur, allowing you to design a more robust system.

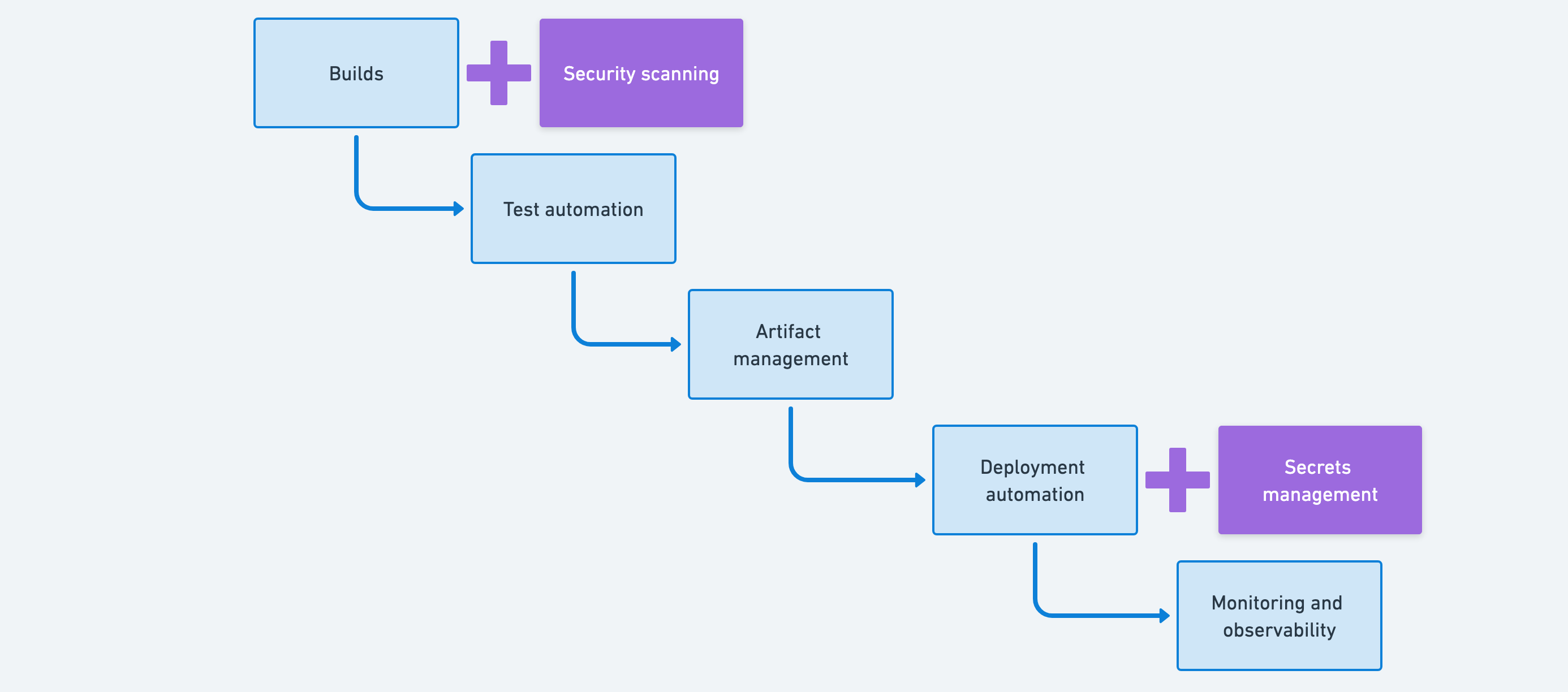

Stage 2: Secure pipeline

The natural next step for platform teams is to integrate security directly into the deployment pipeline. At this stage, teams add security scanning to automatically check for code weaknesses and vulnerable dependencies, alongside secrets management to consolidate how credentials and API keys are stored and rotated.

This shift is significant because security measures are now addressed earlier in the pipeline, reducing the risk of incidents in production. Rather than treating security as a separate concern that happens after development, it becomes part of the continuous feedback loop.

Security integration at this stage typically involves adding new tools to the existing pipeline, with well-defined touchpoints and clear interfaces. Security scanners and secrets management tools are designed to integrate with CI/CD systems, making the additions feel like natural extensions of the deployment pipeline rather than disruptive changes.

Security scanning

While everyone should take responsibility for software security, having automated scanning available within a deployment pipeline can help ensure security isn’t forgotten or delayed. Automated scanning can provide developers with rapid feedback.

You can supplement automated scanning with security reviews and close collaboration with information security teams.

Secrets management

Most software systems must connect securely to data stores, APIs, and other services. The ability to store secrets in a single location prevents the ripple effect when a secret is rotated. Instead of updating many tools with a new API key, you can manage the change centrally with a secret store.

When you deploy an application, you usually have to apply the correct secrets based on the environment or other characteristics of the deployment target.

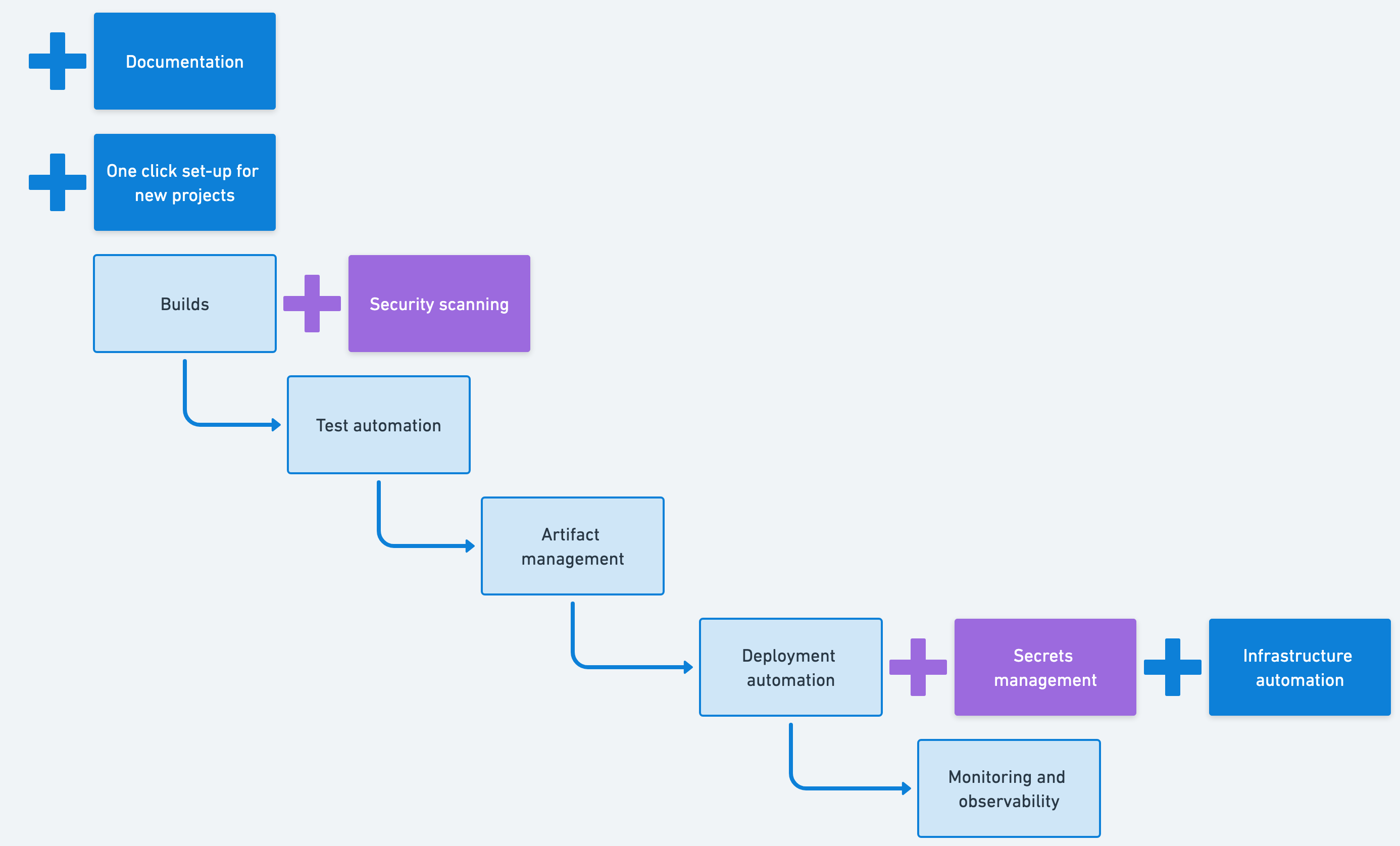

Stage 3: DevOps pipeline

The DevOps pipeline represents a shift from building deployment infrastructure to accelerating developer productivity. At this stage, platform teams add documentation capabilities, infrastructure automation, and one-click setup for new projects. These features focus on removing friction from the developer experience.

The impact of this stage is felt most strongly at the start of new projects and when onboarding new team members. Instead of spending days or weeks on boilerplate setup, teams get a walking skeleton that fast-forwards them directly to writing their first unit test.

While the earlier stages focused on moving code through the pipeline efficiently and securely, this stage is about making the pipeline itself easy to replicate and understand. The automation added here helps teams maintain consistency across projects while giving developers the freedom to focus on features rather than configuration.

Documentation

To provide documentation as a service to teams, you may either supply a platform for storing and finding documentation or use automation to extract documentation from APIs, creating a service directory for your organization.

For documentation to be successful, it must be clear, well-organized, up-to-date, and easily accessible.

One-click setup for new projects

When setting up a new project, several boilerplate tasks are required to configure a source code repository, establish a project template, configure deployment pipelines, and set up associated tools. Teams often have established standards, but manual setup means projects unintentionally drift from the target setup.

One-click automation helps teams set up a walking skeleton with sample test projects, builds, and deployment automation. This ensures a consistent baseline and speeds up the time to start writing meaningful code.

Infrastructure automation

Traditional ClickOps infrastructure is hand-crafted and often drifts from the intended configuration over time. Environments may be set up differently, which means problems surface only in one environment and not another. Equally, two servers in the same environment with the same intended purpose may be configured differently, making troubleshooting problems more challenging.

Infrastructure automation solves these problems, making it easier to create new environments, spin up and tear down ephemeral (temporary) environments, and recover from major faults.

Evolving your platform’s pipelines

Whether you choose to introduce features according to this pattern or decide to approach things differently, it is advisable to take an evolutionary approach. Delivering a working solution that covers the flow of changes from commit to production brings early value. The evolution of the platform enhances the flow and incorporates broader concerns.

Your organization may have additional compliance or regulatory requirements that could become part of a “compliant pipeline”, or you may have a heavyweight change approval process you could streamline with an “approved pipeline”.

Regardless of the requirements you choose to bring under the platform’s capabilities, you’ll be more successful if you deliver a working pipeline and evolve it to add additional features.

Happy deployments!