Our upcoming report examines how organizations adopt and succeed with Platform Engineering. We’ll be launching a broader survey soon to dive deeper into patterns and practices of Platform Engineering, but one of the areas that surfaced was the platform adoption strategy; whether companies make the platform optional or mandatory, and perceived success factors for each approach.

The Platform Engineering community has been pretty clear on this one. Treat your platform like a product, let it compete for internal market share, and generally, don’t mandate adoption. After all, if your platform genuinely provides a path to success and compliance with the organization’s standards, developers should naturally gravitate toward it, right?

This philosophy draws heavily from product management thinking. Just as external products succeed by solving customer problems better than alternatives, internal platforms should win developer adoption by genuinely improving their daily experience. The theory suggests that when platforms must compete for users, they naturally evolve to be more user-focused, innovative, and valuable.

Mandatory adoption

Let’s be honest about why mandatory platforms are so appealing. They work. At least from an organizational standpoint. When leadership mandates platform adoption, it’s because the platform initiative is created and funded to solve specific business problems. Our research shows that the top 3 reasons for adopting Platform Engineering are to:

- Improve efficiency

- Standardize processes

- Increase developer productivity

From a business perspective, these are legitimate wins.

The budget numbers back up the mandatory approach too. Our research found that mandatory platforms report almost 2.5x higher confidence that their funding will remain secure over the next five years.

Optional adoption

Optional platforms can face real challenges. They struggle with budget uncertainty (2x more likely to worry about funding cuts) compared to mandatory platforms, but when platforms must compete for users, something important happens. They hyper-focus on solving real developer problems because frustrated users will simply choose alternatives if they don’t.

Organizations often undermine their own platform adoption by inconsistently enforcing standards. When teams can bypass organizational requirements for security, compliance, and operational practices, they see little value in adopting an internal developer platform. These teams happily create their own build pipelines, deploy infrastructure manually through portals, and sidestep approval processes. After all, they’re avoiding both the platform and the problems it was designed to solve.

The dynamic shifts dramatically when organizations enforce standards uniformly. When teams must meet security, compliance, and operational requirements regardless of whether they use the platform, the platform transforms from an obstacle into the obvious solution. Rather than struggling to meet complex requirements independently, teams naturally gravitate toward a platform that makes compliance straightforward and built-in.

Users choose these platforms because they genuinely make work easier while allowing them to take actions without triple-checking whether they comply with the organization’s rules.

The perception gap between builders and users

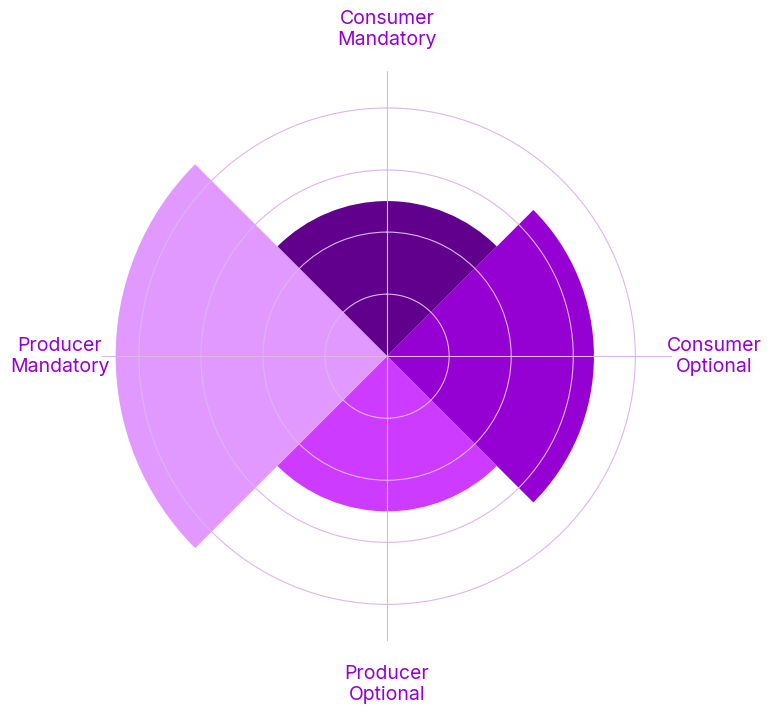

This is where our research gets very interesting. We found a significant disconnect between how platform teams (producers) measure success, what consumers experience, and how the platform’s sponsors view the platform’s success.

For mandatory platforms, 87.5% of producers said the platform met many or all of its goals; however, only 50% of those consuming the platform agreed.

For optional platforms, 50% of producers rate them highly successful, saying they meet many or all goals, compared to 67% of consumers rating the platform as successful.

Producers think mandatory platforms are more successful, whereas consumers are more likely to rate an optional platform as successful. Mandatory platforms may suffer from producer bias, where producers overestimate the value of the platform they are building. That’s why moving beyond assumptions and measuring multiple aspects of the Platform is crucial to tracking success.

The executives and budget holders who sponsor platform initiatives overwhelmingly find that only some goals have been met, regardless of adoption strategy. This suggests that even when platforms achieve technical success, they may not deliver the business outcomes that justify their investment. This disconnect can stem from unclear or misaligned platform goals. When platform teams aren’t certain what business problems they should solve, they default to technical achievements rather than user or business outcomes.

Measuring platform success

Whether the platform is mandatory or optional, you’ll hear people discuss platform adoption metrics, usually to help support how successful the platform is. However, focusing purely on adoption tells you almost nothing about whether the platform is successful. Mandatory platforms should, by definition, have high adoption rates. For optional platforms, low adoption rates could be one signal to indicate a problem with the platform that needs further investigation, but it doesn’t tell the whole story. Either way, looking at adoption alone won’t tell you whether the business is getting value, if users are satisfied, or if the platform is meeting the goals it was set out to achieve.

In fact, one metric alone won’t tell you much about the goals and success of a platform, which is why it’s crucial to capture metrics that tie back to the platform’s goals. Platform sponsors want to know whether the money and time being invested are making an impact. Capturing and presenting vanity metrics like “90% adoption rate” or “5 nines of uptime” might make platform teams feel good, but they don’t answer a fundamental question. Is this platform helping our developers be more productive and our business more successful?

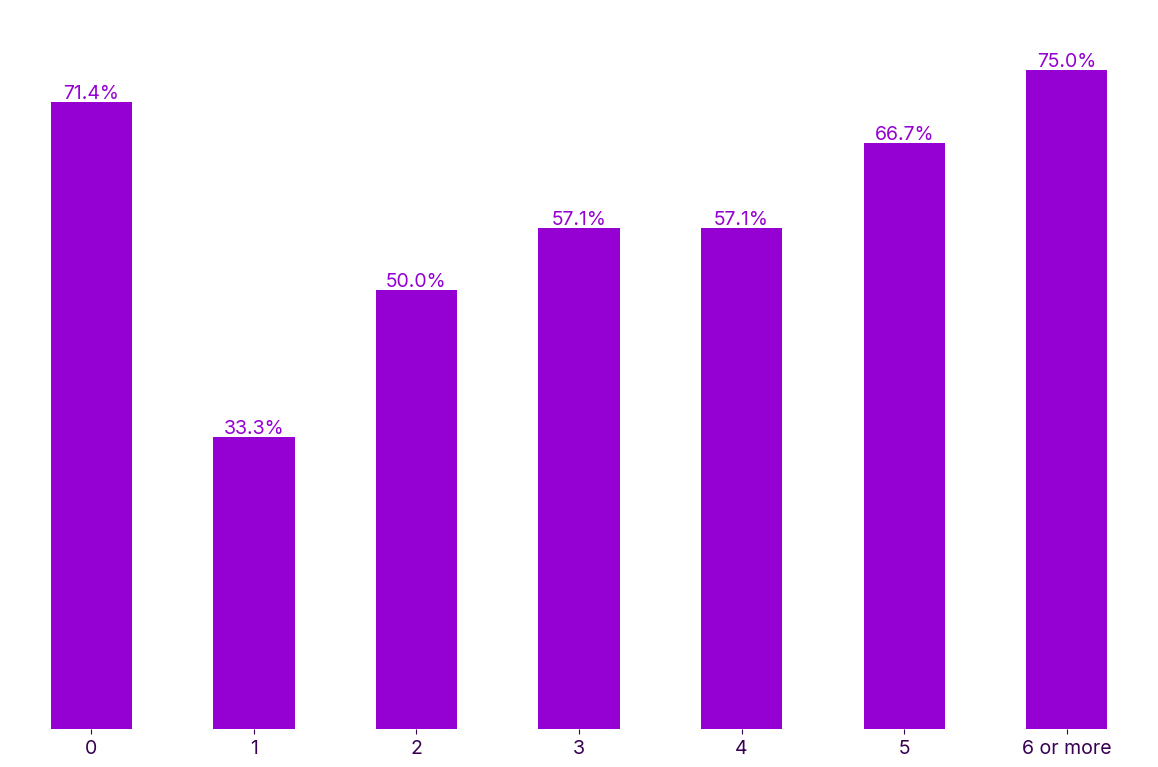

We found that organizations that measure more dimensions of the Platform were more likely to be successful. In fact, there’s a clear correlation between the number of metrics tracked and platform success rates. Organizations measuring 6 or more different aspects of their platform reported the highest success rates.

We found that 23% of organizations don’t use metrics and instead rely on intuitive or subjective assessments. In their state of Platform Engineering report, Humanitec found 44% of organizations don’t measure any metrics. Not measuring anything creates a zero-metric success illusion where platform teams report high success rates simply because they’re not collecting data that might contradict their assumptions. Among organizations that do measure, many rely on technical metrics that make platform teams feel good but completely miss user satisfaction and business impact.

The solution is user-centric measurement. You can use the MONK metrics to measure your Platform Engineering initiative, which provides a balanced approach of benchmarkable metrics with user satisfaction that can help track the platform’s progress against business objectives.

When optional tooling naturally wins

Many years ago, I worked at a University, and the team I was on managed, among hundreds of other applications, the Microsoft Exchange environment for thousands of staff members. Creating shared resources like distribution lists, rooms, or shared mailboxes required several teams to be involved.

The ticket went from the support desk to team 1, to team 2, back to team 1, back to the service desk, and finally back to the user. It took an even longer path if information was missing or something needed clarification. A request from an end user for a new resource would take days, sometimes weeks. Many rules were required regarding resource names, email address formats, mail routing configurations, permissions, etc.

It was a frustrating experience for everyone involved, and we got dozens of these requests every month, sometimes more. An independent consultant surveyed the business, and at the time, the email system was the #1 critical tool being used day-to-day. We had buy-in from our managers to improve the process because it distracted systems teams from strategic work, and resulted in frustrated customers who were waiting days for their requests to be completed.

We decided to write a tool to automate the process. Initially, this was purely selfish, as we wanted to eliminate the manual work and reduce errors when we received the ticket. However, as we developed it, we realized we could give this tool directly to the service desk staff, who received the initial requests from the end users. We engaged with the service desk team, informed them of what we were planning, and listened to their requirements if they were to use the tool.

We didn’t mandate the use of the tool. The service desk team could still assign the ticket to us, and we would use the tool to create the resource. However, we found that the service desk team used the tool 100% of the time, and only issues with the tool itself were escalated to our team.

Everyone was happier. The service desk could provision resources immediately instead of waiting days for our team to get to the ticket. They had confidence that they were doing it correctly because the tool guided them through the process and validated all the inputs. Users were delighted because their requests were fulfilled instantly. We were happier as our team could focus on more strategic work instead of routine provisioning tasks, which were viewed as tedious administrative work.

I get it - this is a bespoke example, and it’s not an entire platform. But that’s kind of the point. Don’t try to build the whole platform on day 1. Find genuine pain points in existing workflows today, and start there, not with abstract goals like “improve security” that are challenging for platform creators to interpret, implement, and measure. My aim with this story isn’t to tell you about specific technology or even automation. It’s about what happens when you build something that genuinely improves the experience for everyone involved. When platforms deliver real value, adoption becomes natural rather than forced.

Building platforms worth choosing

While our initial research shows that mandatory platforms achieve specific organizational metrics and enjoy better budget security, it also reveals why the Platform Engineering community advocates so strongly for optionality. The most successful platforms we studied had something in common: they would thrive even if they weren’t mandated. Consumers chose them not because they had to, but because they were the best tool for getting work done quickly and aligning with organizational standards.

So before you decide on your adoption strategy, ask yourself this question: If this platform weren’t required, would consumers still choose it? If the answer is no, you might have a platform problem, not an adoption problem. And that’s precisely the problem that Platform Engineering, done well, is designed to solve.

Happy Deployments!