If you’ve ever asked a platform like ChatGPT to book your next holiday, order groceries, or schedule a meeting, you’ll understand the potential of large language models (LLMs), and likely have been frustrated by a response like I'm not able to book flights directly.

By default, LLMs are disconnected from the internet and can’t perform tasks on your behalf. But they feel so tantalizingly close to being your own personal assistant.

The model context protocol (MCP) is an open standard that enables LLMs to connect to external tools and data sources. When an LLM is connected to an MCP server, it gains the ability to perform actions on your behalf, transforming it from a passive information source into an active agent.

Agentic AI systems go one step further with the creation of AI agents with specific instructions to perform tasks autonomously, as this quote from IBM describes:

Agentic AI is an artificial intelligence system that can accomplish a specific goal with limited supervision. It consists of AI agents—machine learning models that mimic human decision-making to solve problems in real time.

In this post, we’ll explore how to create a simple AI agent connecting the Octopus and GitHub MCP servers to implement an agentic AI system.

Prerequisites

The sample application demonstrated in this post is written in Python. You can download Python from python.org.

We’ll also use uv to manage our virtual environment. You can install uv by following the documentation.

Dependencies

Our AI agent will use LangChain, a popular framework for building AI applications.

Save the following dependencies to a requirements.txt file:

langchain-mcp-adapters

langchain-ollama

langchain-azure-ai

langgraphCreate a virtual environment and install the dependencies:

uv venv

# This instruction is provided by the previous command and is specific to your OS

source .venv/bin/activate

uv pip install -r requirements.txtText processing functions

A big part of working with LLMs is cleaning and processing text. AI agents sit at the intersection between LLMs consuming and generating natural language and imperative code that requires predictable inputs and outputs. In practice, this means AI agents spend a lot of time manipulating strings.

We start with a function to trim the leading and trailing whitespace from each line in a block of text:

def remove_line_padding(text):

"""

Remove leading and trailing whitespace from each line in the text.

:param text: The text to process.

:return: The text with leading and trailing whitespace removed from each line.

"""

return "\n".join(line.strip() for line in text.splitlines() if line.strip())Next, we have a function to remove <think>...</think> tags and their content from the text. The <think> tag is an informal convention used by reasoning LLMs to wrap the model’s internal reasoning steps.

OpenAI describes reasoning models like this:

Reasoning models think before they answer, producing a long internal chain of thought before responding to the user.

We typically don’t want to display the internal chain of thought in the final output, so we remove it:

def remove_thinking(text):

"""

Remove <think>...</think> tags and their content from the text.

:param text: The text to process.

:return: The text with <think>...</think> tags and their content removed.

"""

stripped_text = text.strip()

if stripped_text.startswith("<think>") and "</think>" in stripped_text:

return re.sub(r"<think>.*?</think>", "", stripped_text, flags=re.DOTALL)

return stripped_textExtracting responses

LangChain provides a common abstraction over many AI platforms. These platforms will often have their own specific APIs. Consequently, LangChain functions frequently return generic dictionary objects containing the response to API calls. It is our responsibility to access the required data from these dictionaries.

We’ll be using the Azure AI Foundry service for this demo, and making use of the Chat completions API.

The response from the Chat completion API is a dictionary with a messages key containing a list of message objects. Here we extract the list of messages and return the content of the last message, or an empty string if no messages are present:

def response_to_text(response):

"""

Extract the content from the last message in the response.

:param response: The response dictionary containing messages.

:return: The content of the last message, or an empty string if no messages are present.

"""

messages = response.get("messages", [])

if not messages or len(messages) == 0:

return ""

return messages.pop().contentSetting up MCP servers

We can now start building the core functionality of our AI agent.

Our agent will use two MCP servers: the Octopus MCP server to interact with an Octopus instance, and the GitHub MCP server to interact with GitHub repositories.

These MCP servers are represented by the MultiServerMCPClient class, which is a client for connecting to multiple MCP servers:

async def main():

"""

The entrypoint to our AI agent.

"""

client = MultiServerMCPClient(

{

"octopus": {

"command": "npx",

"args": [

"-y",

"@octopusdeploy/mcp-server",

"--api-key",

os.getenv("OCTOPUS_CLI_API_KEY"),

"--server-url",

os.getenv("OCTOPUS_CLI_SERVER"),

],

"transport": "stdio",

},

"github": {

"url": "https://api.githubcopilot.com/mcp/",

"headers": {"Authorization": f"Bearer {os.getenv('GITHUB_PAT')}"},

"transport": "streamable_http",

},

}

)We then create an instance of the AzureAIChatCompletionsModel class to allow us to interact with the Azure AI service. This class is responsible for building the Azure AI API requests and processing the responses:

LangChain provides a variety of classes to interact with different AI platforms. The ability to swap out one AI platform for another is a key benefit of frameworks like LangChain.

# Use an Azure AI model

llm = AzureAIChatCompletionsModel(

endpoint=os.getenv("AZURE_AI_URL"),

credential=os.getenv("AZURE_AI_APIKEY"),

model="gpt-5-mini",

)The MultiServerMCPClient provides access to the tools exposed by each MCP server:

Tools are typically self-describing functions that an LLM can call. It is possible to create tools manually. However, the benefit of using MCP servers is that they expose tools in a consistent way that any MCP client can consume.

tools = await client.get_tools()The llm (which is the interface to the Azure AI service) and the tools (which are the functions exposed by the MCP servers) can then be used to create a ReAct agent:

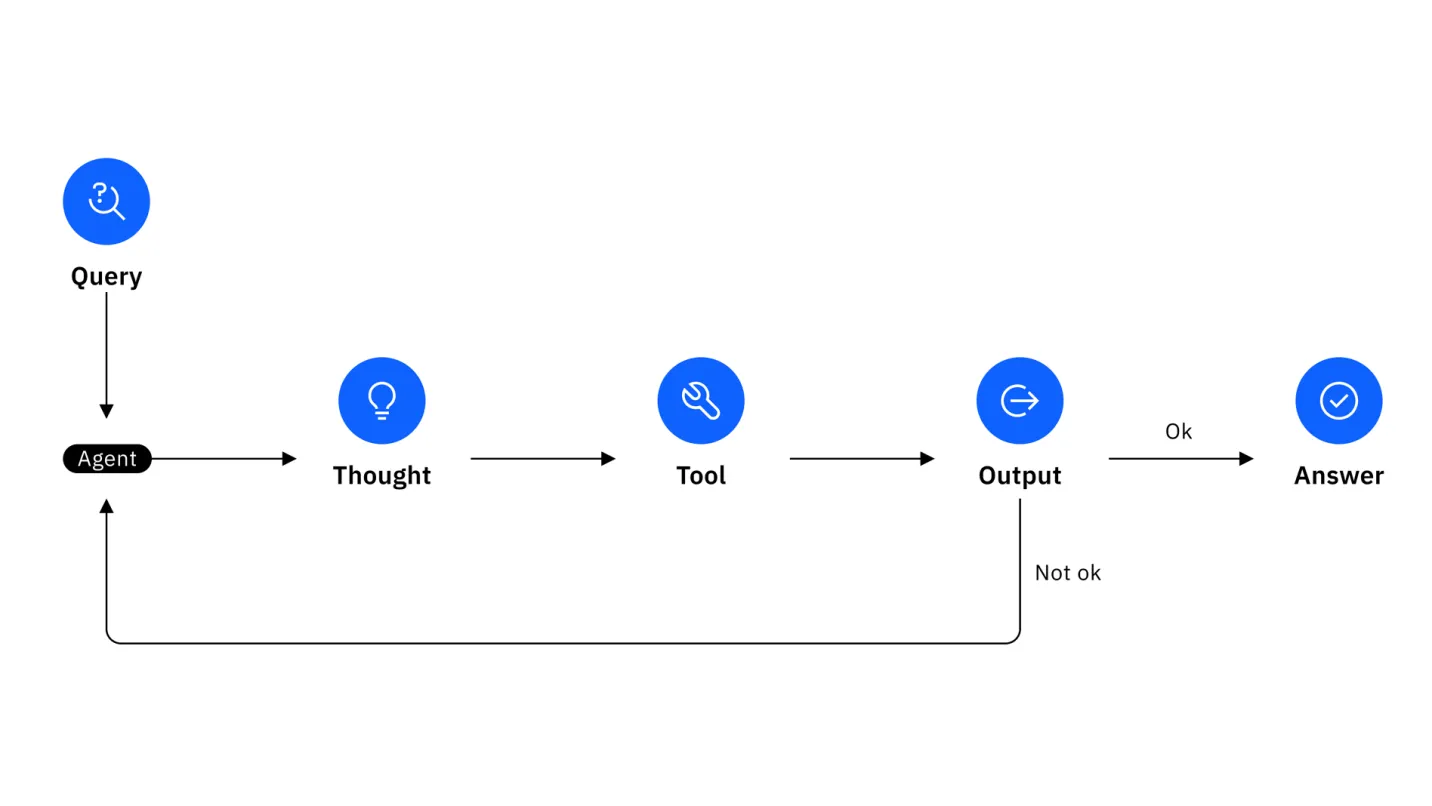

ReAct agents are described in the ReAct paper and implement a feedback loop to iteratively reason and act:

agent = create_react_agent(llm, tools)We now have everything we need to instruct our AI agent to perform a task. Here, we ask the agent to provide a risk assessment of changes in the latest releases of an Octopus project. This works because this project implements build information to associate Git commits with each Octopus release:

You’ll need to replace the name of the Octopus space in the prompt with a space that exists in your Octopus instance.

response = await agent.ainvoke(

{

"messages": remove_line_padding(

"""

In Octopus, get all the projects from the "Octopus Copilot" space.

In Octopus, for each project, get the latest release.

In GitHub, for each release, get the git diff from the Git commit.

Scan the diff and provide a summary-level risk assessment.

You will be penalized for asking for user input.

"""

)

}

)The prompt provided to the agent highlights the power of agentic AI. It is written in natural language and describes a non-trivial set of steps the same way you might describe the task to a developer.

If you’re unfamiliar with prompt engineering, the instructions “penalizing” specific behavior might seem odd. This prompting style addresses a common limitation of LLMs, where they struggle with being told what not to do. The particular phrasing used here comes from the paper Principled Instructions Are All You Need for Questioning, which provides several common prompt engineering patterns.

The response from the agent is processed and printed to the console:

print(remove_thinking(response_to_text(response)))The final step is to call the main function asynchronously:

asyncio.run(main())Running the agent

Run the agent with the command:

python3 main.pyIf all goes well, you should see a report detailing the risk assessment of the changes in the latest releases of each project in the “Octopus Copilot” space.

The complete application

This is the complete script:

import asyncio

import os

import re

from langchain_mcp_adapters.client import MultiServerMCPClient

from langchain_azure_ai.chat_models import AzureAIChatCompletionsModel

from langgraph.prebuilt import create_react_agent

def remove_line_padding(text):

"""

Remove leading and trailing whitespace from each line in the text.

:param text: The text to process.

:return: The text with leading and trailing whitespace removed from each line.

"""

return "\n".join(line.strip() for line in text.splitlines() if line.strip())

def remove_thinking(text):

"""

Remove <think>...</think> tags and their content from the text.

:param text: The text to process.

:return: The text with <think>...</think> tags and their content removed.

"""

stripped_text = text.strip()

if stripped_text.startswith("<think>") and "</think>" in stripped_text:

return re.sub(r"<think>.*?</think>", "", stripped_text, flags=re.DOTALL)

return stripped_text

def response_to_text(response):

"""

Extract the content from the last message in the response.

:param response: The response dictionary containing messages.

:return: The content of the last message, or an empty string if no messages are present.

"""

messages = response.get("messages", [])

if not messages or len(messages) == 0:

return ""

return messages.pop().content

async def main():

"""

The entrypoint to our AI agent.

"""

client = MultiServerMCPClient(

{

"octopus": {

"command": "npx",

"args": [

"-y",

"@octopusdeploy/mcp-server",

"--api-key",

os.getenv("OCTOPUS_CLI_API_KEY"),

"--server-url",

os.getenv("OCTOPUS_CLI_SERVER"),

],

"transport": "stdio",

},

"github": {

"url": "https://api.githubcopilot.com/mcp/",

"headers": {"Authorization": f"Bearer {os.getenv('GITHUB_PAT')}"},

"transport": "streamable_http",

},

}

)

# Use an Azure AI model

llm = AzureAIChatCompletionsModel(

endpoint=os.getenv("AZURE_AI_URL"),

credential=os.getenv("AZURE_AI_APIKEY"),

model="gpt-5-mini",

)

tools = await client.get_tools()

agent = create_react_agent(llm, tools)

response = await agent.ainvoke(

{

"messages": remove_line_padding(

"""

In Octopus, get all the projects from the "Octopus Copilot" space.

In Octopus, for each project, get the latest release.

In GitHub, for each release, get the git diff from the GitHub Commit.

Scan the diff and provide a summary-level risk assessment.

You will be penalized for asking for user input.

"""

)

}

)

print(remove_thinking(response_to_text(response)))

asyncio.run(main())Challenges with agentic AI

Describing complex tasks in natural language is a powerful capability, but it can mask some of the challenges with agentic AI.

The ability to correctly execute the instructions depends a great deal on the model used. We used the gpt-5-mini model from Azure AI Foundry in this example. I selected this model after some trial and error with different models that failed to select the correct tools to complete the task. For instance, GPT 4.1 continually attempted to load Octopus project details from GitHub. Other models can produce very different results for exactly the same code and prompt, and it is often unclear why.

The quality of the prompt is also important. LLMs are not universally advanced enough today to correctly interpret all prompts. Prompt engineering is still required to allow AI agents to perform complex tasks.

Next steps

This example provides the ability to generate one-off reports. A more complete solution would run as a long-lived process responding to events such as those generated by Octopus subscriptions to generate reports automatically when new releases are created. You’d also likely post the results to an email MCP server or an MCP server for one of the many chat platforms available today.

Conclusion

It is early days for agentic AI, but the potential is clear. Agentic AI may finally deliver on the promise of low/no-code solutions that allow non-developers to automate complex tasks.

Agentic AI is not without its challenges. You still need a good understanding of the capabilities and limitations of LLMs, and prompt engineering is still required to get the best results.

However, once you strip away all the boilerplate code to interact with multiple MCP servers, it is possible to orchestrate complex tasks with just a few lines of code and a well-crafted prompt.

Learn more about the Octopus MCP server in our documentation.

Happy deployments!