The DevOps Research and Assessment (DORA) annual report captures input from thousands of professionals worldwide, and ranks the performance of software development teams using four key metrics:

- Deployment Frequency - How often an organization successfully releases to production.

- Lead Time for Changes - The amount of time it takes a commit to get into production.

- Change Failure Rate - The percentage of deployments causing a failure in production.

- Time to Restore Service - How long it takes an organization to recover from a failure in production.

A challenge for teams is then how to calculate these metrics. Fortunately, Octopus captures most of the raw information required to generate these metrics.

In this post, you learn how to query the Octopus API to produce a DORA scorecard with a custom runbook.

Getting started

This post uses GitHub Actions as a CI server. GitHub Actions is free for public git repositories, so you only need a GitHub account to get started.

The sample runbook script is written against Python 3, which can be downloaded from the Python website.

The example runbook source code can be found on GitHub. Tweaks and updates to the script can be found on the GitHub repo, so be sure to check for the latest version.

Producing build information

Build information provides additional metadata for packages referenced in an Octopus deployment or runbook. Build information packages are separate artifacts stored on the Octopus server with the same package ID and version as the package they represent. This allows Octopus to track metadata for all kinds of packages, whether stored in the built-in feed or hosted on external repositories.

Build information captures information such as the commits that are included in the compiled artifact and lists of work items (known as issues in GitHub) that are closed.

The xo-energy/action-octopus-build-information action provides the ability to create and upload a build information package. The step below shows an example of the action:

- name: Generate Octopus Deploy build information

uses: xo-energy/action-octopus-build-information@v1.1.2

with:

octopus_api_key: ${{ inputs.octopus_api_token }}

octopus_project: Products Service

octopus_server: ${{ inputs.octopus_server_url }}

push_version: 0.1.${{ inputs.run_number }}${{ env.BRANCH_NAME != 'master' && format('-{0}', env.BRANCH_NAME) || '' }}

push_package_ids: com.octopus.octopub:products-service

push_overwrite_mode: OverwriteExisting

output_path: octopus

octopus_space: "Octopub"

octopus_environment: "Development"Pushing the build information package is all that Octopus needs to link the metadata to a release. The build information is linked to the release when the build information package ID and version matches a package used in an Octopus step.

With commits and work items now associated with each Octopus release, the next task is determining how this information can be used to measure the four key metrics.

Interpreting the DORA metrics

DORA metrics are high level and don’t define specific rules for how they’re measured. This makes sense, because every team and toolchain has slightly different interpretations of what a deployment is, or what a production failure is.

So to calculate the metrics, you must first decide exactly how to measure them with the data you have available.

For the purpose of this post, the DORA metrics are calculated as follows:

- Deployment Frequency - The frequency of deployments to the production environment.

- Lead Time for Changes - The time between the earliest commit associated with a release and the deployment to the production environment.

- Change Failure Rate - The percentage of deployments to the production environment that resolved an issue.

- Time to Restore Service - The time between an issue being opened and closed.

You’ll note that some of these measurements have been simplified for convenience.

For example, the change failure rate metric technically tracks deployments that caused an issue, not deployments that resolved an issue, as we define it here. However, the data exposed by build information packages makes it easy to track the resolved issues in a given release, and we assume the rate at which deployments resolve issues is a good proxy for the rate at which deployments introduce them.

Also, the time to restore service metric assumes all issues represent bugs or regressions deployed to production. In reality, issues tend to track a wide range of changes from bugs to enhancements. The solution presented here doesn’t make this distinction though.

It’s certainly possible to track which deployments resulted in production issues, so long as you create the required custom fields in your issue tracking platform and you’re diligent about populating them. It’s also possible to distinguish between issues that document bugs and those that represent enhancements. However, this level of tracking won’t be discussed in this post.

Calculating the metrics

The complete script you use to generate DORA metrics is shown below:

import sys

from datetime import datetime

from functools import cmp_to_key

from requests.auth import HTTPBasicAuth

from requests import get

import argparse

import pytz

parser = argparse.ArgumentParser(description='Calculate the DORA metrics.')

parser.add_argument('--octopusUrl', dest='octopus_url', action='store', help='The Octopus server URL',

required=True)

parser.add_argument('--octopusApiKey', dest='octopus_api_key', action='store', help='The Octopus API key',

required=True)

parser.add_argument('--githubUser', dest='github_user', action='store', help='The GitHub username',

required=True)

parser.add_argument('--githubToken', dest='github_token', action='store', help='The GitHub token/password',

required=True)

parser.add_argument('--octopusSpace', dest='octopus_space', action='store', help='The Octopus space',

required=True)

parser.add_argument('--octopusProject', dest='octopus_project', action='store',

help='A comma separated list of Octopus projects', required=True)

parser.add_argument('--octopusEnvironment', dest='octopus_environment', action='store', help='The Octopus environment',

required=True)

args = parser.parse_args()

headers = {"X-Octopus-ApiKey": args.octopus_api_key}

github_auth = HTTPBasicAuth(args.github_user, args.github_token)

def parse_github_date(date_string):

if date_string is None:

return None

return datetime.strptime(date_string.replace("Z", "+0000"), '%Y-%m-%dT%H:%M:%S%z')

def parse_octopus_date(date_string):

if date_string is None:

return None

return datetime.strptime(date_string[:-3] + date_string[-2:], '%Y-%m-%dT%H:%M:%S.%f%z')

def compare_dates(date1, date2):

date1_parsed = parse_octopus_date(date1["Created"])

date2_parsed = parse_octopus_date(date2["Created"])

if date1_parsed < date2_parsed:

return -1

if date1_parsed == date2_parsed:

return 0

return 1

def get_space_id(space_name):

url = args.octopus_url + "/api/spaces?partialName=" + space_name.strip() + "&take=1000"

response = get(url, headers=headers)

spaces_json = response.json()

filtered_items = [a for a in spaces_json["Items"] if a["Name"] == space_name.strip()]

if len(filtered_items) == 0:

sys.stderr.write("The space called " + space_name + " could not be found.\n")

return None

first_id = filtered_items[0]["Id"]

return first_id

def get_resource_id(space_id, resource_type, resource_name):

if space_id is None:

return None

url = args.octopus_url + "/api/" + space_id + "/" + resource_type + "?partialName=" \

+ resource_name.strip() + "&take=1000"

response = get(url, headers=headers)

json = response.json()

filtered_items = [a for a in json["Items"] if a["Name"] == resource_name.strip()]

if len(filtered_items) == 0:

sys.stderr.write("The resource called " + resource_name + " could not be found in space " + space_id + ".\n")

return None

first_id = filtered_items[0]["Id"]

return first_id

def get_resource(space_id, resource_type, resource_id):

if space_id is None:

return None

url = args.octopus_url + "/api/" + space_id + "/" + resource_type + "/" + resource_id

response = get(url, headers=headers)

json = response.json()

return json

def get_deployments(space_id, environment_id, project_id):

if space_id is None or environment_id is None or project_id is None:

return None

url = args.octopus_url + "/api/" + space_id + "/deployments?environments=" + environment_id + "&take=1000"

response = get(url, headers=headers)

json = response.json()

filtered_items = [a for a in json["Items"] if a["ProjectId"] == project_id]

if len(filtered_items) == 0:

sys.stderr.write("The project id " + project_id + " did not have a deployment in " + space_id + ".\n")

return None

sorted_list = sorted(filtered_items, key=cmp_to_key(compare_dates), reverse=True)

return sorted_list

def get_change_lead_time():

change_lead_times = []

space_id = get_space_id(args.octopus_space)

environment_id = get_resource_id(space_id, "environments", args.octopus_environment)

for project in args.octopus_project.split(","):

project_id = get_resource_id(space_id, "projects", project)

deployments = get_deployments(space_id, environment_id, project_id)

for deployment in deployments:

earliest_commit = None

release = get_resource(space_id, "releases", deployment["ReleaseId"])

for buildInfo in release["BuildInformation"]:

for commit in buildInfo["Commits"]:

api_url = commit["LinkUrl"].replace("github.com", "api.github.com/repos") \

.replace("commit", "commits")

commit_response = get(api_url, auth=github_auth)

date_parsed = parse_github_date(commit_response.json()["commit"]["committer"]["date"])

if earliest_commit is None or earliest_commit > date_parsed:

earliest_commit = date_parsed

if earliest_commit is not None:

change_lead_times.append((parse_octopus_date(deployment["Created"]) - earliest_commit).total_seconds())

if len(change_lead_times) != 0:

return sum(change_lead_times) / len(change_lead_times)

return None

def get_time_to_restore_service():

restore_service_times = []

space_id = get_space_id(args.octopus_space)

environment_id = get_resource_id(space_id, "environments", args.octopus_environment)

for project in args.octopus_project.split(","):

project_id = get_resource_id(space_id, "projects", project)

deployments = get_deployments(space_id, environment_id, project_id)

for deployment in deployments:

deployment_date = parse_octopus_date(deployment["Created"])

release = get_resource(space_id, "releases", deployment["ReleaseId"])

for buildInfo in release["BuildInformation"]:

for work_item in buildInfo["WorkItems"]:

api_url = work_item["LinkUrl"].replace("github.com", "api.github.com/repos")

commit_response = get(api_url, auth=github_auth)

created_date = parse_github_date(commit_response.json()["created_at"])

if created_date is not None:

restore_service_times.append((deployment_date - created_date).total_seconds())

if len(restore_service_times) != 0:

return sum(restore_service_times) / len(restore_service_times)

return None

def get_deployment_frequency():

deployment_count = 0

earliest_deployment = None

latest_deployment = None

space_id = get_space_id(args.octopus_space)

environment_id = get_resource_id(space_id, "environments", args.octopus_environment)

for project in args.octopus_project.split(","):

project_id = get_resource_id(space_id, "projects", project)

deployments = get_deployments(space_id, environment_id, project_id)

deployment_count = deployment_count + len(deployments)

for deployment in deployments:

created = parse_octopus_date(deployment["Created"])

if earliest_deployment is None or earliest_deployment > created:

earliest_deployment = created

if latest_deployment is None or latest_deployment < created:

latest_deployment = created

if latest_deployment is not None:

# return average seconds / deployment from the earliest deployment to now

return (datetime.now(pytz.utc) - earliest_deployment).total_seconds() / deployment_count

# You could also return the frequency between the first and last deployment

# return (latest_deployment - earliest_deployment).total_seconds() / deployment_count

return None

def get_change_failure_rate():

releases_with_issues = 0

deployment_count = 0

space_id = get_space_id(args.octopus_space)

environment_id = get_resource_id(space_id, "environments", args.octopus_environment)

for project in args.octopus_project.split(","):

project_id = get_resource_id(space_id, "projects", project)

deployments = get_deployments(space_id, environment_id, project_id)

deployment_count = deployment_count + len(deployments)

for deployment in deployments:

release = get_resource(space_id, "releases", deployment["ReleaseId"])

for buildInfo in release["BuildInformation"]:

if len(buildInfo["WorkItems"]) != 0:

# Note this measurement is not quite correct. Technically, the change failure rate

# measures deployments that result in a degraded service. We're measuring

# deployments that included fixes. If you made 4 deployments with issues,

# and fixed all 4 with a single subsequent deployment, this logic only detects one

# "failed" deployment instead of 4.

#

# To do a true measurement, issues must track the deployments that introduced the issue.

# There is no such out of the box field in GitHub actions though, so for simplicity

# we assume the rate at which fixes are deployed is a good proxy for measuring the

# rate at which bugs are introduced.

releases_with_issues = releases_with_issues + 1

if releases_with_issues != 0 and deployment_count != 0:

return releases_with_issues / deployment_count

return None

def get_change_lead_time_summary(lead_time):

if lead_time is None:

print("Change lead time: N/A (no deployments or commits)")

# One hour

elif lead_time < 60 * 60:

print("Change lead time: Elite (Average " + str(round(lead_time / 60 / 60, 2))

+ " hours between commit and deploy)")

# Every week

elif lead_time < 60 * 60 * 24 * 7:

print("Change lead time: High (Average " + str(round(lead_time / 60 / 60 / 24, 2))

+ " days between commit and deploy)")

# Every six months

elif lead_time < 60 * 60 * 24 * 31 * 6:

print("Change lead time: Medium (Average " + str(round(lead_time / 60 / 60 / 24 / 31, 2))

+ " months between commit and deploy)")

# Longer than six months

else:

print("Change lead time: Low (Average " + str(round(lead_time / 60 / 60 / 24 / 31, 2))

+ " months between commit and deploy)")

def get_deployment_frequency_summary(deployment_frequency):

if deployment_frequency is None:

print("Deployment frequency: N/A (no deployments found)")

# Multiple times per day

elif deployment_frequency < 60 * 60 * 12:

print("Deployment frequency: Elite (Average " + str(round(deployment_frequency / 60 / 60, 2))

+ " hours between deployments)")

# Every month

elif deployment_frequency < 60 * 60 * 24 * 31:

print("Deployment frequency: High (Average " + str(round(deployment_frequency / 60 / 60 / 24, 2))

+ " days between deployments)")

# Every six months

elif deployment_frequency < 60 * 60 * 24 * 31 * 6:

print("Deployment frequency: Medium (Average " + str(round(deployment_frequency / 60 / 60 / 24 / 31, 2))

+ " months between deployments)")

# Longer than six months

else:

print("Deployment frequency: Low (Average " + str(round(deployment_frequency / 60 / 60 / 24 / 31, 2))

+ " months between commit and deploy)")

def get_change_failure_rate_summary(failure_percent):

if failure_percent is None:

print("Change failure rate: N/A (no issues or deployments found)")

# 15% or less

elif failure_percent <= 0.15:

print("Change failure rate: Elite (" + str(round(failure_percent * 100, 0)) + "%)")

# Interestingly, everything else is reported as High to Low

else:

print("Change failure rate: Low (" + str(round(failure_percent * 100, 0)) + "%)")

def get_time_to_restore_service_summary(restore_time):

if restore_time is None:

print("Time to restore service: N/A (no issues or deployments found)")

# One hour

elif restore_time < 60 * 60:

print("Time to restore service: Elite (Average " + str(round(restore_time / 60 / 60, 2))

+ " hours between issue opened and deployment)")

# Every month

elif restore_time < 60 * 60 * 24:

print("Time to restore service: High (Average " + str(round(restore_time / 60 / 60, 2))

+ " hours between issue opened and deployment)")

# Every six months

elif restore_time < 60 * 60 * 24 * 7:

print("Time to restore service: Medium (Average " + str(round(restore_time / 60 / 60 / 24, 2))

+ " hours between issue opened and deployment)")

# Technically the report says longer than six months is low, but there is no measurement

# between week and six months, so we'll say longer than a week is low.

else:

print("Deployment frequency: Low (Average " + str(round(restore_time / 60 / 60 / 24, 2))

+ " hours between issue opened and deployment)")

print("DORA stats for project(s) " + args.octopus_project + " in " + args.octopus_environment)

get_change_lead_time_summary(get_change_lead_time())

get_deployment_frequency_summary(get_deployment_frequency())

get_change_failure_rate_summary(get_change_failure_rate())

get_time_to_restore_service_summary(get_time_to_restore_service())Let’s break this code down to understand what it’s doing.

Processing arguments

Your script accepts parameters from command-line arguments to make it reusable across multiple Octopus instances and spaces. The arguments are parsed by the argparse module. You can find more information about using argparse in Real Python’s post on the topic:

parser = argparse.ArgumentParser(description='Calculate the DORA metrics.')

parser.add_argument('--octopusUrl', dest='octopus_url', action='store', help='The Octopus server URL',

required=True)

parser.add_argument('--octopusApiKey', dest='octopus_api_key', action='store', help='The Octopus API key',

required=True)

parser.add_argument('--githubUser', dest='github_user', action='store', help='The GitHub username',

required=True)

parser.add_argument('--githubToken', dest='github_token', action='store', help='The GitHub token/password',

required=True)

parser.add_argument('--octopusSpace', dest='octopus_space', action='store', help='The Octopus space',

required=True)

parser.add_argument('--octopusProject', dest='octopus_project', action='store',

help='A comma separated list of Octopus projects', required=True)

parser.add_argument('--octopusEnvironment', dest='octopus_environment', action='store', help='The Octopus environment',

required=True)

args = parser.parse_args()API authentication

The script makes many requests to the Octopus and GitHub APIs, and all of the requests require authentication.

The Octopus API uses the X-Octopus-ApiKey header to pass the API key used to authenticate requests. You can find more information on how to create an API in the Octopus documentation.

The GitHub API uses standard HTTP basic authentication with a personal access token for the password. The GitHub documentation provides details on creating tokens.

The code below captures the objects containing the credentials passed with each API request throughout the rest of the script:

headers = {"X-Octopus-ApiKey": args.octopus_api_key}

github_auth = HTTPBasicAuth(args.github_user, args.github_token)Date processing

The GitHub API returns dates in a specific format. The parse_github_date function takes these dates and converts them into Python datetime objects:

def parse_github_date(date_string):

if date_string is None:

return None

return datetime.strptime(date_string.replace("Z", "+0000"), '%Y-%m-%dT%H:%M:%S%z')The Octopus API returns dates in its own specific format. The parse_octopus_date function converts Octopus dates into Python datetime objects.

The Octopus API returns dates in the ISO 8601 format, which looks like 2022-01-04T04:23:02.941+00:00. Unfortunately, Python 3.6 does not support timezone offsets that include colons, so you need to strip them out before parsing and comparing the dates:

def parse_octopus_date(date_string):

if date_string is None:

return None

return datetime.strptime(date_string[:-3] + date_string[-2:], '%Y-%m-%dT%H:%M:%S.%f%z')The compare_dates function takes two dates as strings, parses them as datetime objects, and returns a value of 1, 0, or -1 indicating how date1 compares to date2:

def compare_dates(date1, date2):

date1_parsed = parse_octopus_date(date1["Created"])

date2_parsed = parse_octopus_date(date2["Created"])

if date1_parsed < date2_parsed:

return -1

if date1_parsed == date2_parsed:

return 0

return 1Querying Octopus resources

A common pattern used in this script (and most scripts working with the Octopus API) is to lookup the ID of a named resource. The get_space_id function takes the name of an Octopus space and queries the API to return the space ID:

def get_space_id(space_name):The /api/spaces endpoint returns a list of the spaces defined in the Octopus server. The partialName query parameter limits the result to spaces whose name includes the supplied value, while the take parameter is set to a large number so you don’t need to loop over any paged results:

url = args.octopus_url + "/api/spaces?partialName=" + space_name.strip() + "&take=1000"A GET HTTP request is made against the endpoint, including the Octopus authentication headers, and the JSON result is parsed into Python nested dictionaries:

response = get(url, headers=headers)

spaces_json = response.json()The returned results could match any space whose name is, or includes, the supplied space name. This means that the spaces called MySpace and MySpaceTwo would be returned if we searched for the space called MySpace.

To ensure you return the ID of the space with the correct name, a list comprehension filters the returned spaces to just those that exactly match the supplied space name:

filtered_items = [a for a in spaces_json["Items"] if a["Name"] == space_name.strip()]The function will return None if none of the spaces match the supplied space name:

if len(filtered_items) == 0:

sys.stderr.write("The space called " + space_name + " could not be found.\n")

return NoneIf there is a matching space, return the ID:

first_id = filtered_items[0]["Id"]

return first_idSpaces are top-level resources in Octopus, while all other resources you interact with in this script are children of a space. Just as you did with the get_space_id function, the get_resource_id function converts a named Octopus resource to its ID. The only difference here is the endpoint being requested includes the space ID in the path, and the resource type is supplied to build the second element in the path. Otherwise get_resource_id follows the same pattern described for the get_space_id function:

def get_resource_id(space_id, resource_type, resource_name):

if space_id is None:

return None

url = args.octopus_url + "/api/" + space_id + "/" + resource_type + "?partialName=" \

+ resource_name.strip() + "&take=1000"

response = get(url, headers=headers)

json = response.json()

filtered_items = [a for a in json["Items"] if a["Name"] == resource_name.strip()]

if len(filtered_items) == 0:

sys.stderr.write("The resource called " + resource_name + " could not be found in space " + space_id + ".\n")

return None

first_id = filtered_items[0]["Id"]

return first_idYou need access to the complete Octopus release resource to examine the build information metadata. The get_resource function uses the resource IDs returned by the functions above to return a complete resource definition from the Octopus API:

def get_resource(space_id, resource_type, resource_id):

if space_id is None:

return None

url = args.octopus_url + "/api/" + space_id + "/" + resource_type + "/" + resource_id

response = get(url, headers=headers)

json = response.json()

return jsonThe get_deployments function returns the list of deployments performed to a given environment for a given project. The list of deployments is sorted to ensure the latest deployment is the first item in the list:

def get_deployments(space_id, environment_id, project_id):

if space_id is None or environment_id is None or project_id is None:

return None

url = args.octopus_url + "/api/" + space_id + "/deployments?environments=" + environment_id + "&take=1000"

response = get(url, headers=headers)

json = response.json()

filtered_items = [a for a in json["Items"] if a["ProjectId"] == project_id]

if len(filtered_items) == 0:

sys.stderr.write("The project id " + project_id + " did not have a deployment in " + space_id + ".\n")

return None

sorted_list = sorted(filtered_items, key=cmp_to_key(compare_dates), reverse=True)

return sorted_listCalculating lead time for changes

You now have the code in place to query the Octopus API for deployments and releases, and use the information to calculate the DORA metrics.

The first metric to tackle is lead time for changes, which is calculated in the get_change_lead_time function:

def get_change_lead_time():Calculate the lead time for each deployment and capture the values in an array. This lets you calculate the average lead time:

change_lead_times = []The space and environment names are converted to IDs:

space_id = get_space_id(args.octopus_space)

environment_id = get_resource_id(space_id, "environments", args.octopus_environment)The projects argument is a comma separated list of project names. So you must loop over each individual project, and convert the project name to an ID:

for project in args.octopus_project.split(","):

project_id = get_resource_id(space_id, "projects", project)Collect the list of deployments to the project for the space and environment:

deployments = get_deployments(space_id, environment_id, project_id)This is where you calculate the lead time for each deployment:

for deployment in deployments:The lead time for changes metric is concerned with the time it takes for a commit to be deployed to production. You must find the earliest commit associated with the deployment:

earliest_commit = NoneA deployment represents the execution of a release in an environment. It’s the release that contains the build information metadata, which in turn contains the details of the commits associated with any packages included in the release. You must get the release resource from the release ID held by the deployment:

release = get_resource(space_id, "releases", deployment["ReleaseId"])Releases contain an array with zero or more build information objects. You must loop over this array to return the details of the commits:

for buildInfo in release["BuildInformation"]:Each build information object contains zero or more commits. You must loop over this array looking for the earliest commit:

for commit in buildInfo["Commits"]:When working with GitHub commits, the URL associated with each commit links back to a page you can open with a web browser. These links look like: https://github.com/OctopusSamples/OctoPub/commit/dcaf638037503021de696d13b4c5c41ba6952e9f.

GitHub maintains a parallel set of URLs that expose the API used to query GitHub resources. The URLs used by the API are usually similar to the publicly browsable URLs. In this case the API URL looks like: https://api.github.com/repos/OctopusSamples/OctoPub/commits/dcaf638037503021de696d13b4c5c41ba6952e9f. So you convert the browsable link to the API link:

api_url = commit["LinkUrl"].replace("github.com", "api.github.com/repos") \

.replace("commit", "commits")You then query the details of the commit:

commit_response = get(api_url, auth=github_auth)You need to return the commit date as a Python datetime object:

date_parsed = parse_github_date(commit_response.json()["commit"]["committer"]["date"])The date of the earliest commit is then saved:

if earliest_commit is None or earliest_commit > date_parsed:

earliest_commit = date_parsedAssuming the code above found a commit date, the difference between the deployment date and the commit date is calculated:

if earliest_commit is not None:

change_lead_times.append((parse_octopus_date(deployment["Created"]) - earliest_commit).total_seconds())Assuming any commits are found in the build information metadata, the average time between the earliest commit and the deployment date is calculated and returned:

if len(change_lead_times) != 0:

return sum(change_lead_times) / len(change_lead_times)If no commits are found, or the release has no associated build information, None is returned:

return NoneCalculating time to restore service

The next metric to calculate is time to restore service.

As noted in the introduction, this metric is assumed to be measured by the time it takes deploy a release with a resolved issue. The get_time_to_restore_service function is used to calculate this value:

def get_time_to_restore_service():Again, you maintain an array of values to allow you to calculate an average:

restore_service_times = []The space and environment names are converted to their IDs:

space_id = get_space_id(args.octopus_space)

environment_id = get_resource_id(space_id, "environments", args.octopus_environment)Projects are supplied as a comma separated list, which you loop over:

for project in args.octopus_project.split(","):The project name is converted to an ID, and the list of deployments to the project for the environment are returned:

project_id = get_resource_id(space_id, "projects", project)

deployments = get_deployments(space_id, environment_id, project_id)Return the release associated with each deployment, loop over all the build information objects, and then loop over all the work items (called issues in GitHub) associated with each package in the release:

for deployment in deployments:

deployment_date = parse_octopus_date(deployment["Created"])

release = get_resource(space_id, "releases", deployment["ReleaseId"])

for buildInfo in release["BuildInformation"]:

for work_item in buildInfo["WorkItems"]:The URL to the browsable issues is converted to an API URL, the issue is queried via the API, and the creation date is returned:

api_url = work_item["LinkUrl"].replace("github.com", "api.github.com/repos")

commit_response = get(api_url, auth=github_auth)

created_date = parse_github_date(commit_response.json()["created_at"])The time between the creation of the issue and the deployment is calculated:

if created_date is not None:

restore_service_times.append((deployment_date - created_date).total_seconds())If any issues are found, the average time between the creation of the issue and the deployment is calculated:

if len(restore_service_times) != 0:

return sum(restore_service_times) / len(restore_service_times)If no issues are found, None is returned:

return NoneCalculating deployment frequency

Deployment frequency is the easiest metric to calculate as it’s simply the average time between production deployments. This is calculated by the get_deployment_frequency function:

def get_deployment_frequency():Deployment frequency is calculated as the duration over which deployments have been performed divided by the number of deployments:

deployment_count = 0

earliest_deployment = None

latest_deployment = NoneThe space, environment, and project names are converted to IDs:

space_id = get_space_id(args.octopus_space)

environment_id = get_resource_id(space_id, "environments", args.octopus_environment)

for project in args.octopus_project.split(","):

project_id = get_resource_id(space_id, "projects", project)The deployments performed to an environment are returned:

deployments = get_deployments(space_id, environment_id, project_id)The number of deployments are counted:

deployment_count = deployment_count + len(deployments)The earliest and latest deployments are found:

for deployment in deployments:

created = parse_octopus_date(deployment["Created"])

if earliest_deployment is None or earliest_deployment > created:

earliest_deployment = created

if latest_deployment is None or latest_deployment < created:

latest_deployment = createdAssuming any deployments are found, the duration between deployments is divided by the number of deployments.

Note: you can measure this value a few different ways. The uncommented code measures the average time between deployments from the earliest deployment until the current point in time.

An alternative is to measure the average time between the earliest and the latest deployment:

if latest_deployment is not None:

# return average seconds / deployment from the earliest deployment to now

return (datetime.now(pytz.utc) - earliest_deployment).total_seconds() / deployment_count

# You could also return the frequency between the first and last deployment

# return (latest_deployment - earliest_deployment).total_seconds() / deployment_count

return NoneCalculating change failure rate

The final metric is change failure rate.

As noted in the introduction, this code measures the number of deployments that fix issues rather than the number of deployments that introduce issues. The former measurement is trivial to calculate with the information captured by build information, while the latter requires far more metadata to be exposed by issues.

Despite the technical differences, you can assume measuring deployments that fix issues is a good proxy for deployments that introduce issues. Reducing production issues improves both scores, and while “bad” deployments are underrepresented by this logic where a single release fixes many issues, “bad” deployments are then overrepresented where multiple deployments are required to fix the issues from a single previous deployment.

The get_change_failure_rate function is used to calculate the change failure rate:

def get_change_failure_rate():The failure rate is measured as the number of deployments with issues compared to the total number of deployments:

releases_with_issues = 0

deployment_count = 0The space, environment, and project names are converted to IDs:

space_id = get_space_id(args.octopus_space)

environment_id = get_resource_id(space_id, "environments", args.octopus_environment)

for project in args.octopus_project.split(","):

project_id = get_resource_id(space_id, "projects", project)The deployments performed to an environment are returned, and the total number of deployments is accumulated:

deployments = get_deployments(space_id, environment_id, project_id)

deployment_count = deployment_count + len(deployments)You then need to find any issues associated with deployments, and if any are found, you increment the count of deployments with issues:

for deployment in deployments:

release = get_resource(space_id, "releases", deployment["ReleaseId"])

for buildInfo in release["BuildInformation"]:

if len(buildInfo["WorkItems"]) != 0:

# Note this measurement is not quite correct. Technically, the change failure rate

# measures deployments that result in a degraded service. We're measuring

# deployments that included fixes. If you made 4 deployments with issues,

# and fixed all 4 with a single subsequent deployment, this logic only detects one

# "failed" deployment instead of 4.

#

# To do a true measurement, issues must track the deployments that introduced the issue.

# There is no such out of the box field in GitHub actions though, so for simplicity

# we assume the rate at which fixes are deployed is a good proxy for measuring the

# rate at which bugs are introduced.

releases_with_issues = releases_with_issues + 1If any deployments with issues are found, the ratio of deployments with issues to total number of deployments is returned:

if releases_with_issues != 0 and deployment_count != 0:

return releases_with_issues / deployment_countIf no deployments with issues are found, None is returned:

return NoneMeasuring deployment performance

The measurement of each metric is broken down into four categories:

- Elite

- High

- Medium

- Low

The DORA report describes how each measurement is categorized, and the get_change_lead_time_summary, get_deployment_frequency_summary, get_change_failure_rate_summary, and get_time_to_restore_service_summary functions print the results:

def get_change_lead_time_summary(lead_time):

if lead_time is None:

print("Change lead time: N/A (no deployments or commits)")

# One hour

elif lead_time < 60 * 60:

print("Change lead time: Elite (Average " + str(round(lead_time / 60 / 60, 2))

+ " hours between commit and deploy)")

# Every week

elif lead_time < 60 * 60 * 24 * 7:

print("Change lead time: High (Average " + str(round(lead_time / 60 / 60 / 24, 2))

+ " days between commit and deploy)")

# Every six months

elif lead_time < 60 * 60 * 24 * 31 * 6:

print("Change lead time: Medium (Average " + str(round(lead_time / 60 / 60 / 24 / 31, 2))

+ " months between commit and deploy)")

# Longer than six months

else:

print("Change lead time: Low (Average " + str(round(lead_time / 60 / 60 / 24 / 31, 2))

+ " months between commit and deploy)")

def get_deployment_frequency_summary(deployment_frequency):

if deployment_frequency is None:

print("Deployment frequency: N/A (no deployments found)")

# Multiple times per day

elif deployment_frequency < 60 * 60 * 12:

print("Deployment frequency: Elite (Average " + str(round(deployment_frequency / 60 / 60, 2))

+ " hours between deployments)")

# Every month

elif deployment_frequency < 60 * 60 * 24 * 31:

print("Deployment frequency: High (Average " + str(round(deployment_frequency / 60 / 60 / 24, 2))

+ " days between deployments)")

# Every six months

elif deployment_frequency < 60 * 60 * 24 * 31 * 6:

print("Deployment frequency: Medium (Average " + str(round(deployment_frequency / 60 / 60 / 24 / 31, 2))

+ " months between deployments)")

# Longer than six months

else:

print("Deployment frequency: Low (Average " + str(round(deployment_frequency / 60 / 60 / 24 / 31, 2))

+ " months between commit and deploy)")

def get_change_failure_rate_summary(failure_percent):

if failure_percent is None:

print("Change failure rate: N/A (no issues or deployments found)")

# 15% or less

elif failure_percent <= 0.15:

print("Change failure rate: Elite (" + str(round(failure_percent * 100, 0)) + "%)")

# Interestingly, everything else is reported as High to Low

else:

print("Change failure rate: Low (" + str(round(failure_percent * 100, 0)) + "%)")

def get_time_to_restore_service_summary(restore_time):

if restore_time is None:

print("Time to restore service: N/A (no issues or deployments found)")

# One hour

elif restore_time < 60 * 60:

print("Time to restore service: Elite (Average " + str(round(restore_time / 60 / 60, 2))

+ " hours between issue opened and deployment)")

# Every month

elif restore_time < 60 * 60 * 24:

print("Time to restore service: High (Average " + str(round(restore_time / 60 / 60, 2))

+ " hours between issue opened and deployment)")

# Every six months

elif restore_time < 60 * 60 * 24 * 7:

print("Time to restore service: Medium (Average " + str(round(restore_time / 60 / 60 / 24, 2))

+ " hours between issue opened and deployment)")

# Technically the report says longer than six months is low, but there is no measurement

# between week and six months, so we'll say longer than a week is low.

else:

print("Deployment frequency: Low (Average " + str(round(restore_time / 60 / 60 / 24, 2))

+ " hours between issue opened and deployment)")The final step is calculating the metrics and passing the results to the functions above:

print("DORA stats for project(s) " + args.octopus_project + " in " + args.octopus_environment)

get_change_lead_time_summary(get_change_lead_time())

get_deployment_frequency_summary(get_deployment_frequency())

get_change_failure_rate_summary(get_change_failure_rate())

get_time_to_restore_service_summary(get_time_to_restore_service())Running the script in a runbook

The first step is exposing 2 variables that are passed to the script:

GitHubTokenis a secret holding the GitHub personal access token used to authenticate GitHub API calls.ReadOnlyApiKeyis an Octopus API key assigned to an account with read only access to the Octopus server (because this script only queries the API, and never modifies any resources).

The runbook is a single Run a script step with the following bash script:

cd DoraMetrics

echo "##octopus[stdout-verbose]"

python3 -m venv my_env

. my_env/bin/activate

pip --disable-pip-version-check install -r requirements.txt

echo "##octopus[stdout-default]"

python3 main.py \

--octopusUrl https://tenpillars.octopus.app \

--octopusApiKey "#{ReadOnlyApiKey}" \

--githubUser mcasperson \

--githubToken "#{GitHubToken}" \

--octopusSpace "#{Octopus.Space.Name}" \

--octopusEnvironment "#{Octopus.Environment.Name}" \

--octopusProject "Products Service, Audits Service, Octopub Frontend"There are some interesting things going on in this script, so let’s break it down.

You enter the directory where Octopus extracted the package containing the Python script:

cd DependencyQueryPrinting the service message ##octopus[stdout-verbose] instructs Octopus to treat all subsequent log messages as verbose:

echo "##octopus[stdout-verbose]"A new Python virtual environment called my_env is created and activated, and the script dependencies are installed:

python3 -m venv my_env

. my_env/bin/activate

pip --disable-pip-version-check install -r requirements.txtThe service message ##octopus[stdout-default] is printed, instructing Octopus to treat subsequent log messages at the default level again:

echo "##octopus[stdout-default]"You then execute the Python script. Some of the arguments, like octopusUrl, githubUser, octopusProject, need to be customized for your specific use case. Setting the octopusSpace and octopusEnvironment arguments to the space and environment in which the runbook is executed lets you calculate DORA metrics in any environment the runbook is run in:

python3 main.py \

--octopusUrl https://tenpillars.octopus.app \

--octopusApiKey "#{ReadOnlyApiKey}" \

--githubUser mcasperson \

--githubToken "#{GitHubToken}" \

--octopusSpace "#{Octopus.Space.Name}" \

--octopusEnvironment "#{Octopus.Environment.Name}" \

--octopusProject "Products Service, Audits Service, Octopub Frontend"Executing the runbook

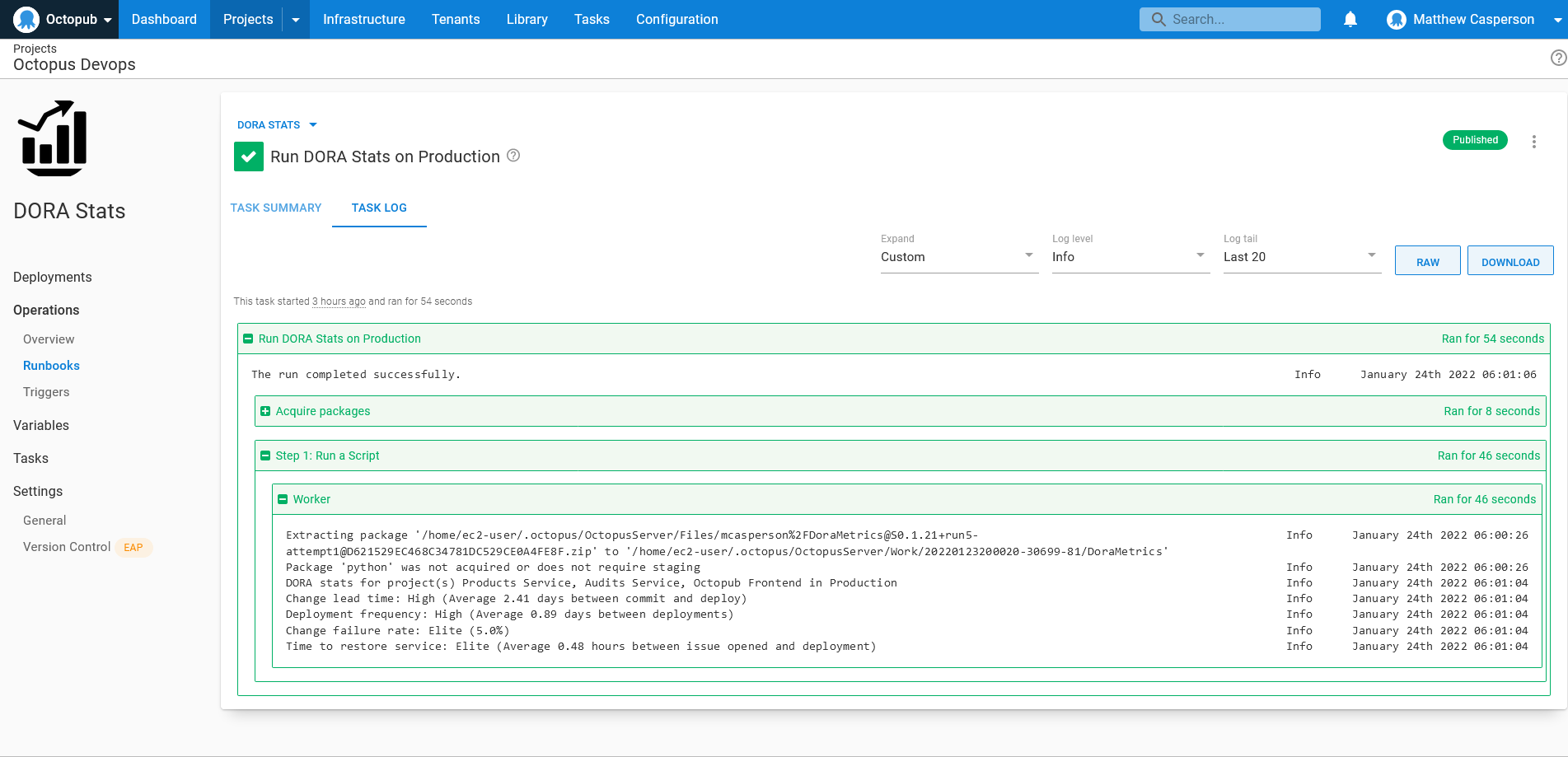

When the runbook is executed, it scans each project for the latest deployment to the current environment, finds the GitHub Action run link from the build information, downloads the dependencies artifact, extracts the artifact, and scans the text files for the search text.

With a single click of the RUN button, you can quickly measure the performance of your projects:

DORA metrics in Octopus

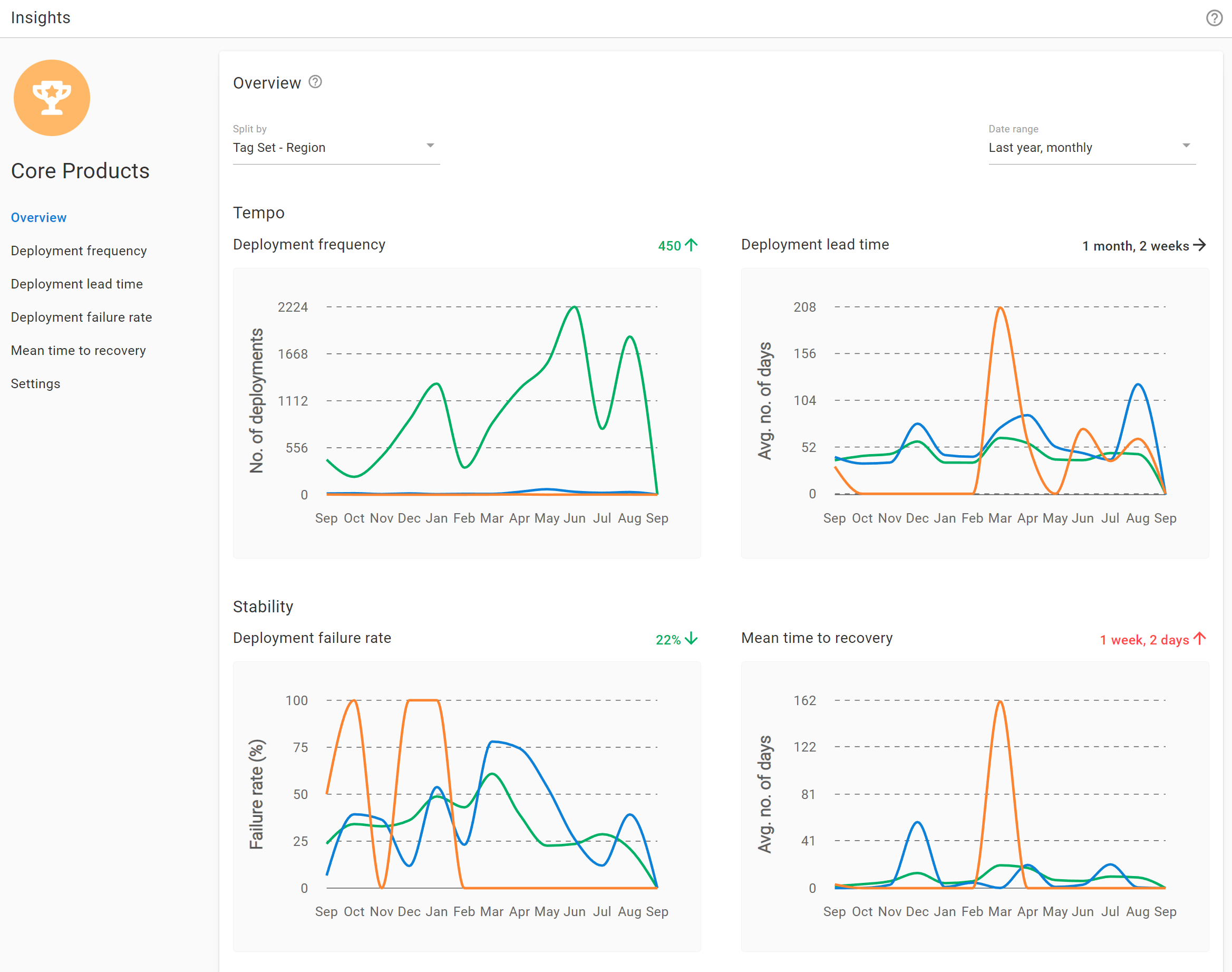

Octopus Deploy 2022.3+ includes support for DevOps Insights with built-in DORA metrics. This built-in reporting gives you better visibility into your company’s DevOps performance by surfacing the 4 key DORA metrics:

- Deployment Lead Time

- Deployment Failure Rate

- Deployment Frequency

- Mean Time to Recovery

These metrics help you make informed decisions on where to improve and celebrate your results.

Project insights are available for all Octopus projects, including existing projects.

Space level insights are available for Enterprise customers at a space level and cover all projects in that space.

Space level insights are available via the Insights tab and provide actionable DORA metrics for more complex scenarios across a group of projects, environments, or tenants. This gives managers and decision makers more insight into the DevOps performance of their organization in line with their business context, such as team, portfolio, or platform.

Space level insights:

- Aggregate data across your space so you can compare and contrast metrics across projects to identify what’s working and what isn’t

- Inform better decision making: identify problems, track improvements, and celebrate successes

- Help you quantify DevOps performance based on what’s actually happening as shown by data

Together these metrics help you qualify the results of your DevOps performance across your projects and portfolio.

Learn more about DevOps Insights.

Conclusion

The DORA metrics represent one of the few rigorously researched insights available to measure your team’s DevOps performance. Between the information captured by Octopus build information packages and issue tracking platforms like GitHub Actions, you can rank your performance against thousands of other software development teams around the globe.

In this post, you saw a sample Python script that queried the Octopus and GitHub APIs to calculate the four DORA metrics, and then ran the script as an Octopus runbook. The sample script can be easily applied to any team using GitHub Actions, or modified to query other source control and issue tracking platforms.

Read the rest of our Runbooks series.

Happy deployments!