Introduction

This is the second part in a series focused on the evolution of software delivery, divided into 3 eras. The first article covered the early phased models created between 1950 and 1990. This part covers the rise of lightweight software methods, from 1990 to 2010. Three examples are used to illustrate some different approaches during these 2 decades:

- Scrum

- Extreme Programming (XP)

- Kanban Method

Early software delivery models were created in response to specific problems that arose when ad-hoc code and fix software development was pitched against large-scale software projects. Many factors played a part in the early processes, but beneath the heavyweight phased sequence of steps, documents, and controls there were already a number of key ideas that would inspire the next era of lightweight methods.

When lightweight methods were introduced in the 1990s they were discussed as solving the problems of current models. Most of the problems weren’t inherent in the models themselves, but were introduced in their application. Some issues were caused by misunderstandings about the models and much of the “heavy” in “heavyweight” came from industry procurement requirements, certifications, and maturity models.

Organizations were producing documentation that served no useful purpose other than to tick off a check list. However, they were essential to the sales process as they were commonly a requirement for government and enterprise contracts.

Despite Benington, Royce, and Boehm recommending iterative, incremental, and evolutionary methods of software delivery, projects were often run as one large batch of work. Benington expressed his frustration at this form of top-down programming, where the specification was fully completed before any software was written. Instead, he recommended starting with a small prototype and evolving it towards a complete system.

Similarly, Royce said the way to deliver complex software was to work in small incremental changes. Boehm encoded a constant cycle of increments into his model, encouraging a review between each cycle to adjust the direction of the software and to take a risk-based approach to the process being used.

Lightweight methods weren’t a new idea but represented a re-discovery that smaller batches offered many benefits.

What was changing

The software revolution followed Thomas Kuhn’s description of scientific revolutions. A revolution requires 2 ingredients:

- A set of prior assumptions.

- Some new discovery that forces a re-evaluation and the construction of a new set of assumptions.

The prior assumptions were established in the early phased software delivery models. There were plenty of drivers that contributed to the re-evaluation of established processes.

Higher-order languages that enabled multi-paradigm approaches were becoming common and object-oriented programming was growing in popularity.

Both Microsoft and Borland made acquisitions to add version control tools to their developer offerings. More than 20 version control systems were launched between 1990 and 2010, including Subversion, SourceSafe, ClearCase, Mercurial, Git, Bitkeeper, and Team System Version Control.

Programmers were working on their own dedicated machine, rather than being allocated time on shared machines. They could now compile code and run tests locally. They were also using IDEs (Integrated Development Environments) that combined key developer tools like a text editor and compiler in a single user interface. Instead of waiting for compilation to happen out-of-band, the programmer loop was providing fast feedback.

A more diverse range of organizations were creating software and deploying it to general purpose machines. Attitudes to software were changing from deterministic approaches inspired by construction metaphors to adaptive modes of thought. The speed of information was also increasing with both the World Wide Web and Wikis landing in the 1990s.

Following Toyota’s partnership with General Motors in the US, ideas from the Toyota Production System were becoming established in the west. Taiichi Ohno’s book on the subject was translated into English in 1988 and this was followed by James P. Womack’s The Machine That Changed The World in 1990, spreading the concepts of lean production and inspiring a lean software development movement.

The constraints and economic models of software delivery were fundamentally changed. New progressive ideas on the management of people and work were providing new ways to approach collaboration on software delivery.

In response to the heavyweight processes that had become firmly established, the language that emerged for software delivery in the 1990s was about being light, lean, agile, and adaptable.

Scrum

Possibly the most famous lightweight method, Scrum was created when Jeff Sutherland and Ken Schwaber collaborated to combine the approaches they had each been using at Easel Corporation and Advanced Development Methods. It was likely inspired by Honda’s Hiroo Watanabe, who used a rugby metaphor to describe how he wanted groups to collaborate when developing new products. Watanabe wanted teams to be cross-functional, rather than arranged in a sequence. He said:

I am always telling the team members that our work is not a relay race in which my work starts here and yours there. Everyone should run all the way from start to finish. Like rugby, all of us should run together, pass the ball left and right, and reach the goal as a united body.

This quote was used in product development and knowledge management works by Ikujiro Nonaka and Hirotaka Takeuchi, who identified 2 styles of phased or sequenced development:

- Relay, where the baton is passed from one group to the next.

- Saishimi, where the adjacent phases are overlapped, like the presentation of food in the Japanese dish of the same name.

They said that instead of these approaches, organizations should use a rugby-style process with all groups working together continuously, incrementally, and spirally. This would allow the product direction to be changed in response to the environment. This was the foundation for the design of Scrum.

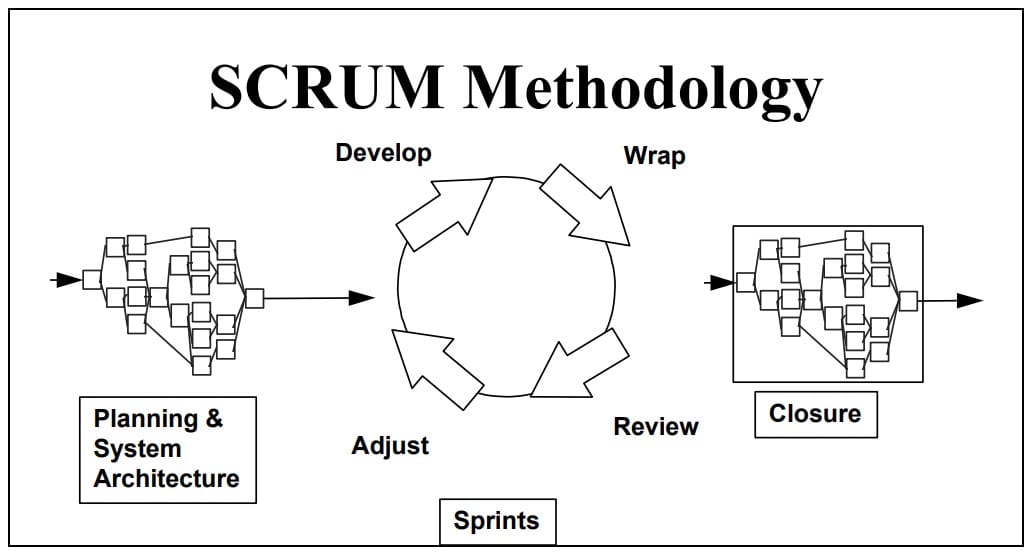

Source: SCRUM Development Process. Schwaber. 1995, revised in 1996.

Schwaber and Sutherland shared their Scrum methodology at the OOPSLA (Object-Oriented Programming, Systems, Languages and Applications) ‘95 conference in Austin, Texas. The original design had an empirical process of short sprint cycles with a planning phase at the start and a closure phase at the end. Importantly, a review followed each cycle that enabled the plan to be adjusted frequently. Scrum has since been revised multiple times and is generally referred to as a framework, rather than a method.

Perhaps the most valuable insight from Scrum is that it recognized the complexity of software delivery and used empirical controls to manage it rather than attempt to remove risk with a detailed deterministic plan. As Ken Schwaber said in the original paper:

An approach is needed that enables development teams to operate adaptively within a complex environment using imprecise processes. Complex system development occurs under rapidly changing circumstances. Producing orderly systems under chaotic circumstances requires maximum flexibility. The closer the development team operates to the edge of chaos, while still maintaining order, the more competitive and useful the resulting system will be.

In the 2020 edition of The Scrum Guide, the framework is defined through a set of values, roles, and events. The framework largely defines a communication structure around time-boxed sprints that each deliver an increment of work. Scrum is an adaptive variation of the evolutionary methods that were emerging at the time the spiral model was written. Scrum is designed to allow more flexibility to adapt to change.

Extreme Programming

Developed in parallel to Scrum during the mid-1990s, Extreme Programming (XP) was inspired by object-oriented programming and the demand for shorter product cycles. Kent Beck was leading a project at Chrysler and invited Ron Jeffries to the project to help refine the concept.

In a break from traditional models and most other methods at the time, Beck defined XP through an economic model, a set of values, a process template, and a collection of technical practices.

The goal of XP was to flatten the cost curve, making software cheaper and easier to change. Updating software created through a large heavyweight project was considered prohibitively expensive and the alternative, rewriting the software, was also too costly.

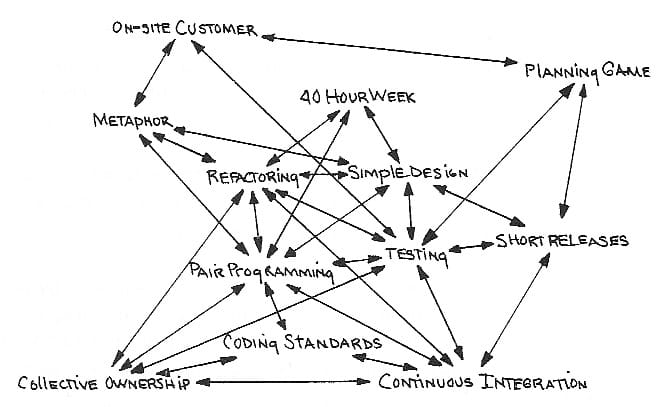

The technical practices were considered inter-dependent, so the full set were required to achieve the goal of flattening the cost curve. The “extreme” part of Extreme Programming refers to the maximal application of each practice.

Source: Extreme Programming Explained. Beck. 2000.

The process part of XP is designed to prevent unilateral decision making by the business or technical people, requiring decisions to be made by an informed partnership. The planning game is used to determine what is in the next small release of the software, with a guiding principle of creating the smallest release possible to complete the most valuable business requirement.

In this process, the values, principles, and technical practices ensure the cost of change is kept under control, which allows the frequency of deployments to be kept consistent over time.

Kanban Method

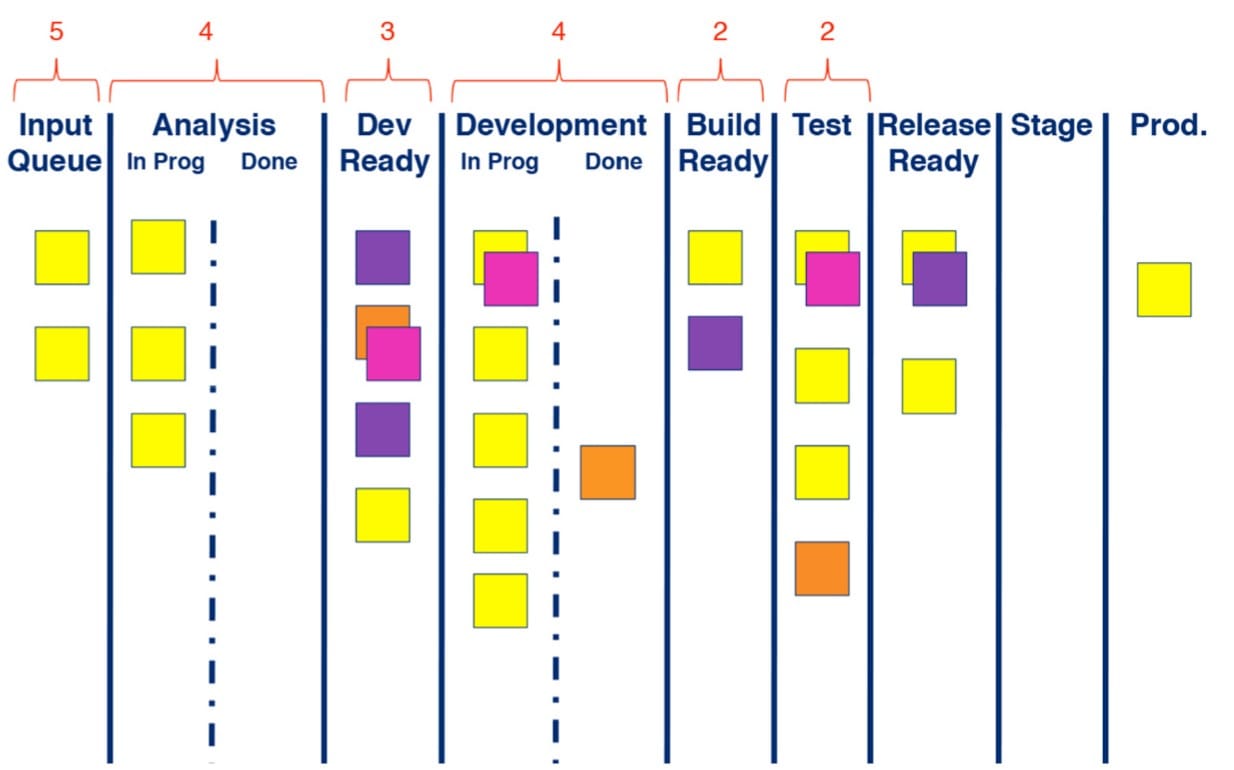

David Anderson combined ideas from the lean movement with Eli Godratt’s Theory of Constraints and drum-buffer-rope concept as part of a project at Microsoft in 2004. Anderson reported the results in a paper named From Worst to Best in 9 Months, which was presented at various conferences. He later met with Donald Reinertsen who convinced him that he could turn these ideas into a full kanban system, a concept originally created by Taiichi Ohno at Toyota.

Kanban isn’t a set of proscribed steps. Instead, it is a technique for mapping your existing process as a value stream and applying techniques to continuously improve the flow of work. The value stream map is typically implemented as a visual board with columns representing steps, states, or queues. For example, an organization may create a board with columns for “analysis”, “coding”, “testing”, and “deployment” to visualize their current process. From this starting point, the organization embarks upon a process of continuous improvement using tools like work in process (WIP) limits to discover bottlenecks and improve flow efficiency. The effectiveness of improvements are tracked with metrics like lead times, queue size, and throughput to understand the performance of the system.

The Kanban Method has no model for phases or steps, but is built around a set of 5 core practices:

- Visualize

- Limit WIP (Work in Process)

- Manage flow

- Make policies explicit

- Improve collaboratively and evolve experimentally

Instead of optimizing for “resource use”, Kanban optimizes for flow. This requires smaller batches and shorter lead times.

Source: Kanban. David J. Anderson. 2010.

This example board shows a sequence of stages and queues, with limits on each one. The stages are specific to the team that created this visual map. Work boards like this have become commonplace, but in Kanban the board must be an accurate reflection of the work taking place, there must be limits to the work in progress, and the board must be kept updated each time the process is adjusted and improved.

David Anderson wrote about the path to maturity in his original book on Kanban:

First, learn to build high-quality code. Then reduce the work-in-progress, shorten lead times, and release often. Next, balance demand against throughput, limit work-in-progress, and create slack to free up bandwidth, which will enable improvements. Then, with a smoothly functioning and optimizing software development capability, improve prioritization to optimize value delivery.

Summary

In the earlier phased models, there were calls to start with a small prototype and evolve incrementally towards a full system. With major technological progress and decades more experience understanding software development, the new lightweight methods encoded small batches and embraced the natural complexity of software delivery by building discovery into the core of the process. Rather than attempting to gather all the required information before creating the system, the process of writing the software was used as an information generator that allowed the early detection of problems, rather than delaying work to perform a lengthy analysis of potential future problems.

Following the example set at Honda, the lightweight methods were based on cross-functional teams working together to deliver software, rather than work being passed between functional groups.

The methods developed in these 2 decades were primarily empirical, based on experience and measured in a handful of case studies. Some of authors of the new wave of lightweight methods came together to write The Agile Manifesto, which was a shared set of values and principles for software development that greatly increased adoption of processes such as Scrum and Extreme Programming. The lean software movement took inspiration from the Toyota Production System and inspired methods such as Kanban.

Because the methods were a mixture of discovery activities, work management, and technical practices, organizations often combined more than one method to tailor their own software delivery process. Organizations could combine a product discovery process with a collaborative specification technique to generate options that would flow through a Kanban system, in which a team would apply a custom set of technical practices.

Like the early software delivery models, it was common to find the authors frustrated that people weren’t applying their approaches correctly. Progressive organizations were fast to successfully adopt new software delivery methods, but the latecomers commonly tried to change as little as possible when the pressure mounted to adopt these new ways of working.

In organizations where roles and procedures were firmly established, Kanban would have been the ideal approach, allowing them to start by mapping what they were already doing. Instead, many of these late adopters preferred to seek out a prescriptive process, as this is what they were used to with the maturity models and certificated standards they normally used. This allowed a foothold for top-down approaches to be re-invented with new terminology.

The most successful methods provided either a statistical approach to work management or a set of technical practices that enabled early delivery of small batches.

Extreme Programming, in particular, shifted focus from documentation and control steps towards technical capabilities and Kanban provided a clear statistical way to measure the software delivery process.

The relationship between practices in Extreme Programming was empirical, but provided a starting hypothesis for the research and analysis that emerged in the next era of modern research-backed software delivery.

Help us continuously improve

Please let us know if you have any feedback about this page.