Introduction

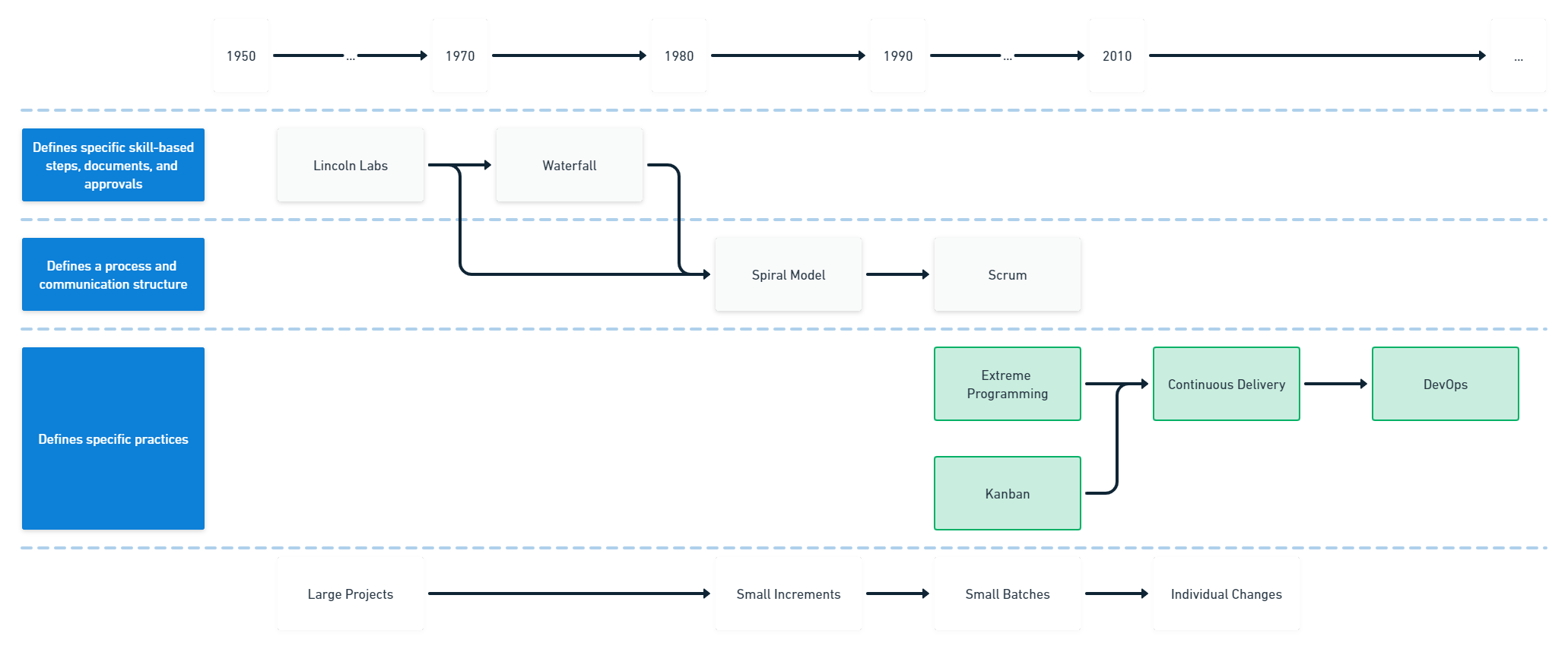

This third and final part in the software delivery series, which has covered the early era of model-based phased methods from 1950-1990, and the subsequent rise of lightweight methods from 1990-2010.

Now it’s time to look at the modern era, which has put specific practices to the test using research backed methods. There are 2 examples included to illustrate the 2 parts of the trend:

- Continuous Delivery

- DevOps

The pioneering organizations that tackled software delivery in the 1950s discovered plenty of difficulties scaling a development team from one independent expert to large-scale development teams. Adding phases, documents, and gateways ultimately failed to move the needle on these challenges. Recognizing that the additional overheads weren’t helping, the lightweight era stripped back the process and elevated collaboration on small batches of work, minimizing documentation and process.

Organizations using both the phased models and the lightweight methods recognized similar risks, but took different approaches to managing them. The original idea was to make the required software more deterministic by refining the plan, but there was a mass pivot to more adaptive planning that responded to change when the business benefits became clear.

The change from heavyweight models to lightweight methods was a highly visible and sometimes disruptive change, but the transition into the modern era was more subtle. Instead of a major course correction, there were 2 major new threads:

- What can we do to deliver faster and more frequently?

- How do we test that these things really work?

What was changing

Many ideas from the lightweight era remained as the modern era arrived. Primarily, progress was made by adding scientific methods of research and analysis to the previously empirical methods. However, there were other developments that contributed to the acceleration and direction of travel:

By 2010, most software development contracts were flexible and aligned to customer demand for partners who could deliver software incrementally. Drip-feeding investment into software development allowed the organizations buying software delivery to set a burn rate for the capital and gave them visible regular progress in the form of working software that would drive decision making, such as changing the requirements or stopping the project.

E-commerce became an essential channel for organizations. The early adopters of web-based stores such as Amazon, eBay, and Zappos were joined by practically every retailer with a physical store-front and streaming services. By 2010, worldwide e-commerce sales had reached 572 billion US dollars, according to JP Morgan and reported in Tech Crunch, with no signs of year-on-year growth slowing.

Cloud computing had arrived in 2002, but Microsoft, IBM, and Google had just launched offerings to compete with Amazon’s AWS. Self-service and on-demand elastic computing capabilities were now available to more organizations than ever.

Developer tool sets aligned to modern software delivery were emerging. These allowed the full application development life cycle to be managed, including integration, builds, deployments, and monitoring.

Continuous Delivery

Continuous Delivery was created by Jez Humble and Dave Farley, and was named after the first principle of The Agile Manifesto:

Our highest priority is to satisfy the customer through early and continuous delivery of valuable software.

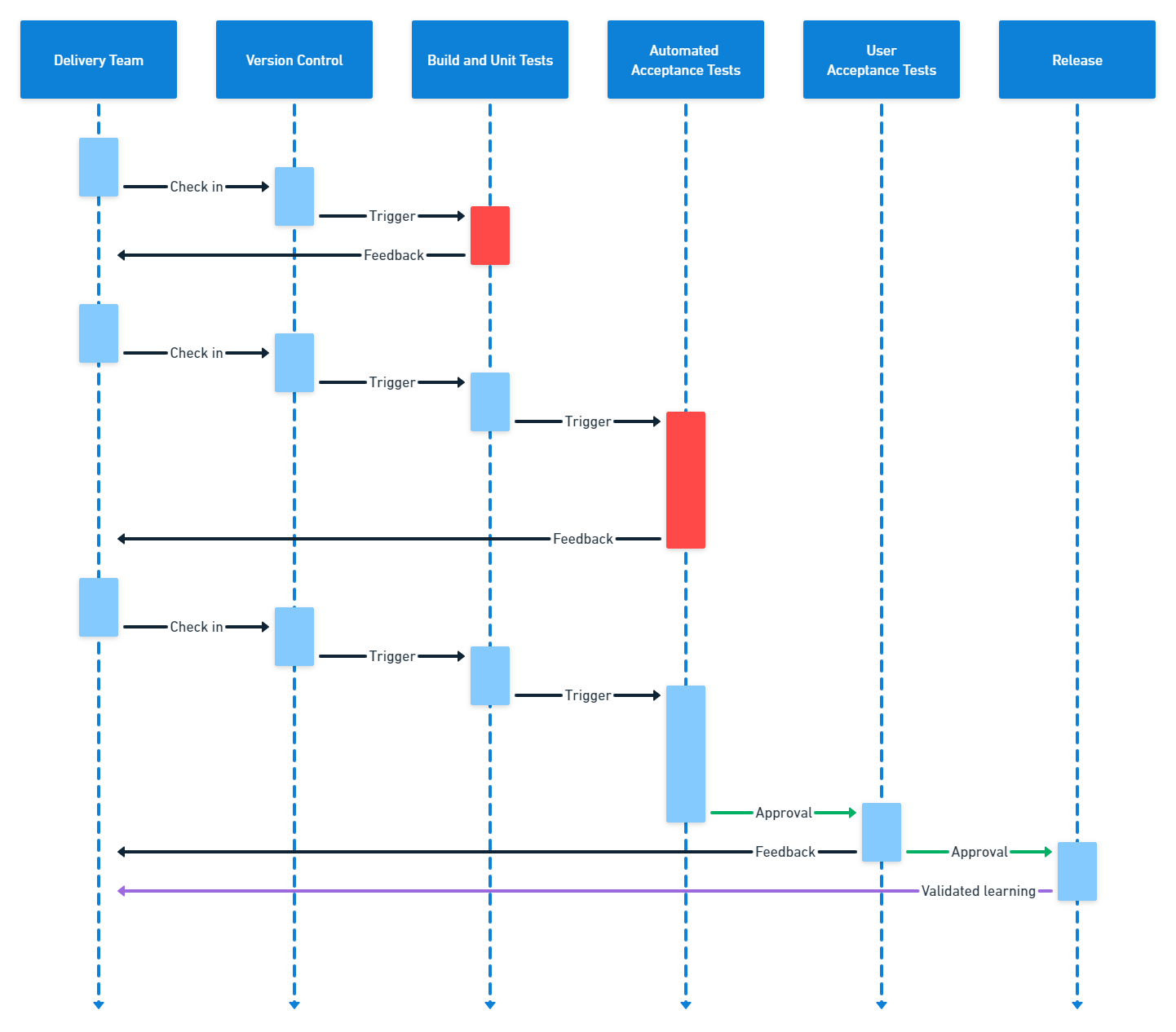

In Continuous Delivery, the deployment pipeline is used as a focusing lens to arrange and automate steps to ensure the full pipeline can run for each change made to the software. When a change is made, each step in the deployment pipeline is run until a validation step fails, or the software makes it to the production environment. It should be possible for a valid change to go live in an hour, with the initial build and test phase providing feedback in under 5 minutes.

The example deployment pipeline below, based on an illustration by Jez Humble shows a series of changes entering the deployment pipeline, with feedback being received from different stages. The stages for your own process, and whether they’re automatically triggered or wait for approval, may look different to this example.

Like Extreme Programming, Continuous Delivery is centered around technical practices, rather than process steps and, like Kanban, it allows the steps and sequence to map your specific value stream. The deployment pipeline provides a view over your software process that can be used to continually improve how you deliver software.

Continuous Delivery encourages the early discovery of problems and the use of automation throughout the deployment pipeline, including tests, infrastructure, and deployments. Octopus Deploy has a blue paper on the importance of Continuous Delivery in their resource center.

DevOps

Initially, DevOps was quite a vague concept that encouraged development teams and operations teams to work more closely. In the confusion, 2 things happened. People started adding a new third team to their organization called “DevOps”, and organizations started inventing terms to add more things to the collaboration, like “DevSecOps”, which included security.

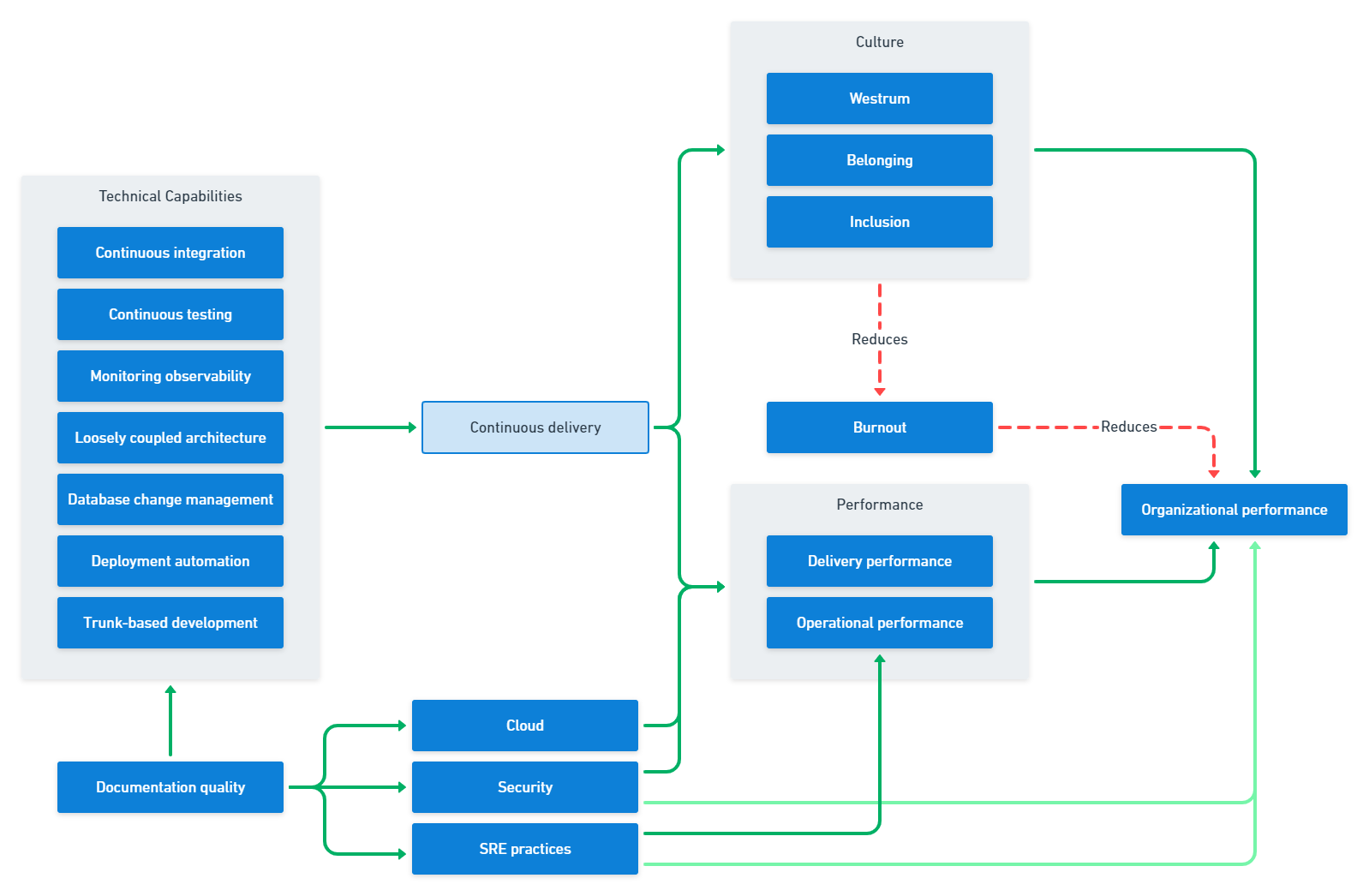

The introduction of the State of DevOps report has brought structure to DevOps in the form of a concrete set of capabilities across technical and cultural disciplines. Additionally, the report uses a research-backed analysis to test the relationships between these practices.

The structural equation model above shows statistically proven drivers between capabilities and the performance of an organization. The technical capabilities are closely linked to Continuous Delivery, which forms the basis of the software delivery. The cultural capabilities are just as important and are also significant drivers of the organization achieving its goals.

The DevOps organization includes all skill sets required to deliver the software, including security, architecture, and compliance; so there’s no need to extend the title from “DevOps” to “DevSecArchOps” as all these characteristics are already included.

The best known software delivery method

As of today, Continuous Delivery and DevOps are the best way to deliver software that the industry knows of. As the research continues, it’s likely that the relationship between practices will become clearer. Some additional capabilities will emerge and be tested, such as the Team Topologies concepts mentioned in the most recent State of DevOps report. Some of these will be proven to be useful and others will be left behind. The key to this modern era is that we can be guided by the research, but ultimately have to design how we move from where we are today to where we want to be.

For this reason, the combination of Kanban as a value stream mapping tool, with the technical capabilities of Continuous Delivery, and the full set of practices described in DevOps is the best known software delivery approach. With Kanban, an organization can map their current value stream as the starting point for implementing the specific capabilities described in Continuous Delivery and DevOps.

The trend for software delivery has been away from defining the specific steps, documents, and approvals an organization should use, providing instead the specific elements of successful software delivery. It has become obvious over the course of many decades that controlling risk with more and more process fails, where a simple concept such as small batch sizes is hugely successful. In the spirit of Extreme Programming, if small batch sizes are better, why not make them ultimately small; the size of a single code change.

Delivering frequently minimizes risk and encourages the automation of mundane tasks. When an organization removes rote work, people are able to do work that fully uses their abilities, which is highly motivating. When a person is following a checklist to perform a repetitive task, they’re less engaged and mistakes are more likely.

Reduce batch size first

The CHAOS report was designed to highlight the state of the software industry and is often cited as an encouragement to move from heavyweight models to lightweight methods. An often overlooked finding in the report is that batch size has more impact than process:

| Project size | Waterfall | Agile |

|---|---|---|

| Large | 8% | 19% |

| Medium | 9% | 34% |

| Small | 45% | 59% |

Success rates by size and project method 2016-2020.

The above table, from the 2020 CHAOS report, shows that project size has more impact than the specific process used to deliver software even though projects in their agile category outperform those in the waterfall category. Essentially, making projects small has 3x more impact than making them agile.

The CHAOS report is based on projects meeting original forecasts and doesn’t adjust for organizational bias, such as unusually positive or negative forecasting methods compared to the industry average. This is another reason the State of DevOps Report has become so important to the industry, as it publishes its method and uses statistical techniques to generate the best possible insights from the data.

The modern era of software delivery doesn’t require an organization to be first to market. Instead, it provides the tools to be quick to market with a product that provides real value to its customers. It is possible to win by being second to market with a better product.

The future of software delivery

Once we embrace the research-backed modern era of software delivery, it’s possible for competing research to emerge that creates conflicts with our current assumptions. Organizations need to treat this process scientifically, checking sources and designing their own experiments rather than blindly following thought-leaders. The structure of scientific revolutions will apply, which means some organizations won’t be able to respond to emerging data until they cycle through a generation of staff who are set in their ways. This could prove fatal to some organizations.

Internally, each organization should use the continuous improvement process to find marginal gains that combine to provide a competitive advantage. As process and capability improvements provide diminishing returns, the cultural aspects will become of key importance, as attracting and retaining the best people becomes the biggest marketplace advantage. Having employees who are advocates and promoters of the organization will be essential to winning talent in the hiring market.

Summary

One of the most stunning findings during this investigation into software delivery has been from the very first model, the authors have all strongly advised delivering a small system and then evolving it incrementally into something larger. John Gall summed this up perfectly in his 1975 book Systemantics, when he said:

A complex system that works is invariably found to have evolved from a simple system that works. The inverse proposition also appears to be true: A complex system designed from scratch never works and cannot be made to work.

Even if your organization isn’t ready to execute on all the capabilities of Continuous Delivery and DevOps, one change that could be made today is reducing the batch size, which will require you to look at how you can streamline the flow of work through your delivery pipeline.

We are building out content on DevOps and Continuous Delivery to help you adopt the principles and practices that will improve your software delivery performance.

Help us continuously improve

Please let us know if you have any feedback about this page.