What is a CI/CD pipeline?

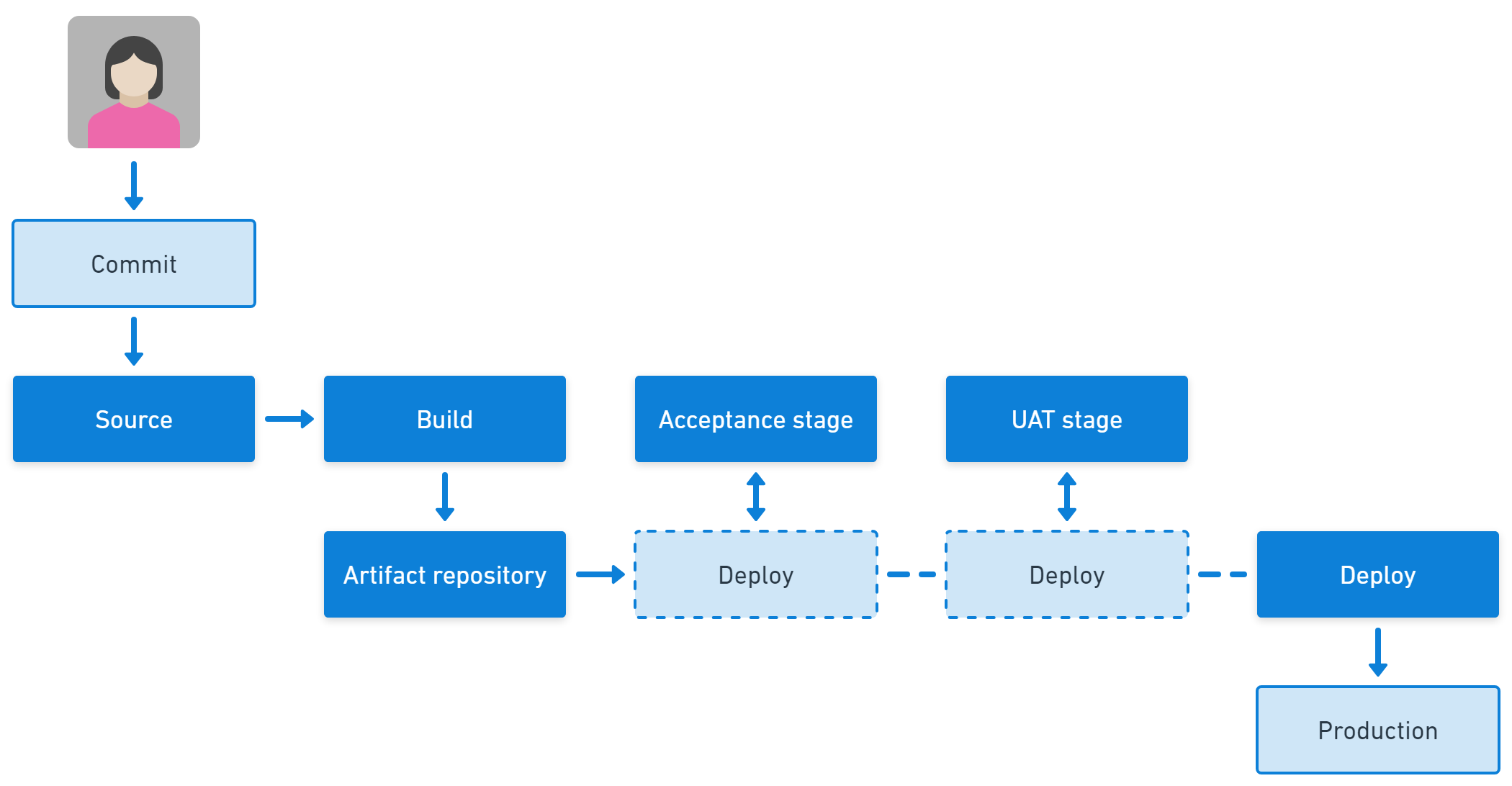

A CI/CD pipeline is an automated sequence of steps to build and test an artifact (Continuous Integration) and progress it through all the stages required to make it available to users in production (Continuous Delivery). The pipeline starts when a developer commits code to a shared repository, which triggers automated builds, tests, and deployments. The main objectives of CI/CD pipelines are to increase throughput, reduce manual errors, and deliver software updates more frequently and reliably.

Continuous Integration (CI) emphasizes regularly merging smaller code changes from multiple contributors to the main branch, followed by a build process and automated testing. Continuous delivery (CD) extends CI by progressing the output of the CI process and progressing it through all the steps to validate it, ultimately deploying it to production. This streamlines the development cycle, allowing rapid and reliable delivery of high-quality software.

Benefits of CI/CD pipelines

Here are some of the key advantages of implementing a CI/CD pipeline:

Reducing deployment times through automation

A software version may be deployed many times as it progresses through development, test, staging, and production environments. Automated deployments reduce the elapsed time of deployments and eliminate handling time for the deployment process. This means team members can spend more time on high-value tasks, rather than toil.

Automated deployments also make sure the process is consistent across environments. The same steps will be done in the same order every time, eliminating deployment failures caused by steps being missed or applied in the wrong sequence. This makes the software more stable as deployment failures caused by “checklist errors” are eliminated.

Continuous feedback for improvement

Continuous feedback is crucial for modern software teams. The ultimate goal is to get feedback from real users frequently and while the features are being actively developed, rather than finding out months later that a feature doesn’t solve the intended problem. Real world feedback is the only way to reduce the market risk of a feature. CI/CD pipelines help you get the software to users sooner to get feedback while it can still be acted on.

Intermediate feedback is baked into CI/CD pipelines and is used to tell developers as quickly as possible if there’s a problem with their changes. When a developer commits code, they should know within 5 minutes if the build and test stage has a problem, so they can resolve it with their changes fresh in their mind. Longer running automated tests are deferred, but still run before any human spends time validating or using the software.

Improving team collaboration

Continuous feedback encourages healthy collaboration and helps teams resolve problems early and cheaply. Teams can review issues discovered in the software to ensure they can be detected automatically in the future, at the earliest possible feedback stage. With fast feedback, developers can work on small, manageable code changes that are integrated frequently into the main branch in version control. This removes the need to pause feature development to integrate large change sets at the end of long development cycles.

If there are manual validation steps in the CI/CD pipeline, the use of automated tests to prevent bad versions making it into manual testing stages minimizes time wasted on software versions with basic problems. When a fault is detected, the automation of the pipeline reduces the chance of it being a deployment or configuration error and the small batches make it easier to find the cause.

Phases of a CI/CD pipeline

Commit stage

The commit stage marks the first step in a CI/CD pipeline, where developers submit their code changes to a centralized version control repository like Git. Before committing, a developer might need to integrate changes from others into their working copy, although making frequent commits helps minimize the risk of conflicts.

During this stage, pre-commit checks like linting, static code analysis, and security scans are run to catch syntax errors, enforce coding standards, and identify vulnerabilities early on. These checks ensure that only high-quality, secure code advances through the pipeline. Additionally, some pipelines include peer code reviews at this stage, providing an extra layer of scrutiny before the developer merges code into the main branch.

Source stage

The commit from the developer triggers the pipeline to fetch the latest code from version control and downloads the dependencies, tools, and configuration needed to ensure the environment is ready for the build stage.

Modern pipelines often prepare an isolated container environment that has all the required code and tools, and which can be discarded and recreated reliably. Older pipelines may use a pre-configured agent, which can be more difficult to reproduce on demand.

Build stage

In the build stage, the code is compiled into an artifact, such a binary, package, or container, with fast-running tests to detect problems with the new software version. Automated tools like Jenkins typically handle these processes, ensuring that builds are reproducible and consistent.

Successful builds result in deployable artifacts, while failures trigger alerts that notify the team, enabling quick issue resolution. This stage validates the correctness of code, ensuring that it integrates well and performs as expected. By standardizing the build process, teams can easily trace back to the source of any issues that arise, facilitating quicker fixes.

Artifact repository stage

For a successful build, the resulting artifact should be stored in a repository, like JFrog Artifactory, or Nexus. This artifact serves as the definitive version of the software, and your CI/CD pipeline should consistently reference this version to ensure that the artifact validated is the same one deployed to end users. Additionally, storing this artifact allows for easy redeployment if needed later.

Artifact repositories maintain the chain of custody for software versions, preventing issues arising from rebuilding the same code with different dependencies or updated build settings. Preserving the output of a successful build is always simpler than attempting to recreate the exact conditions under which it was originally produced.

Acceptance stage

In the acceptance test stage, the software version is deployed to an environment where automated smoke tests and acceptance tests run. While the build stage ensures the system functions correctly on a technical level, the acceptance test stage verifies it on both functional and non-functional levels.

A software version that passes this stage should inspire high confidence that it behaves as users expect and aligns with the customer’s requirements. Conversely, if a version fails this stage, it prevents the software from advancing to time-consuming stages like manual testing or potentially affecting users.

User acceptance and capacity testing stage

Many organizations perform additional testing like exploratory testing, user acceptance testing, or capacity testing. When manual testing is necessary, it should be done after the user acceptance stage to avoid spending time on software versions that fail on automated criteria. Testers should have the ability to deploy to their test environments independently.

If issues are discovered during this stage, consideration should be given to how these could be detected earlier in an automated stage. Conversely, if no issues are found for an extended time, it may be possible to reduce or eliminate tasks in this stage.

Deploy stage

The deploy stage is the final and often most critical step in the CI/CD pipeline. Here, the validated and tested artifacts are released into the production environment, where users will interact with the application.

Deployment involves several complex steps, including provisioning infrastructure, setting up databases, configuring environment variables, and deploying the application code. In modern DevOps practices, this stage is fully automated to ensure consistency and reduce the likelihood of human error.

For instance, in a Kubernetes environment, the deploy stage might involve updating Kubernetes manifests, applying them to the cluster, and managing the deployment rollout process, such as performing rolling updates or rollbacks. The goal of the deploy stage is to ensure a seamless, reliable deployment that can be executed repeatedly with minimal risk.

4 Success pillars for CI/CD pipelines

1. Speed

A good CI/CD pipeline reduces toil, eliminates waits, and uses automation to increase process speed and reliability. You can optimize the pipeline with parallel processing of builds or tests and by selecting best-in-class CI and CD tools. Fast feedback from a quick pipeline allows for rapid iterations and reduces batch sizes, which increases software delivery performance.

A slow pipeline forces developers to compromise good practices to remain productive. If the pipeline runs slowly, they will naturally commit less often and incur the penalties of merge problems and later feedback. Fast pipelines make it possible to commit small low-risk changes frequently.

2. Reliability

An effective CI/CD pipeline needs to be robust and reliable. A flaky automated test ends up being ignored or commented out, and this applies throughout the pipeline. A key indicator of a good pipeline is that people trust it to work and understand that when it reports a failure they need to take action.

The CI pipeline should fail fast when there’s a problem and the CD pipeline should make it easy to resolve problems to unblock a deployment when there are transient faults. Applying the right failure-handling strategy to different pipeline stages is crucial.

3. Accuracy

Automation usually brings a high degree of accuracy to the CI/CD pipeline. Making sure steps are performed in the right sequence and in the same way every time reduces errors unrelated to the software version, such as configuration errors or environment mismatches.

As a software version is progressed through different environments, confidence increases in the deployment process, too. Using the same process to deploy to all environments means the deployment process is tested at least as often as the software you’re deploying.

4. Compliance

A solid CI/CD pipeline will make it easier for developers to meet compliance and auditing requirements. Your pipeline should help you evidence adherence to the process and capture any sign off requirements. The CI and CD tools you use will also provide an audit trail of pipeline runs and changes made to the pipeline process.

You can also use automated checks in the pipeline to make sure coding standards are followed, third party dependencies comply with license requirements, and appropriate reviews have taken place.

CI/CD pipelines remove many reasons for privileged access to infrastructure, meaning you can reduce the need to use administrative access to terminals or machines. Within the tools, role-based access control makes sure people have the lowest access levels required to perform their role. Granting access to trigger a deployment through a CI/CD pipeline is less risky than giving administrative access to run commands.

5. Security throughout

Bringing security into the CI/CD pipeline moves organizations from an audit-based security mindset to a continuous security model. Security practices can be embedded into the pipeline at all stages from code commit to deployment.

Automated security testing and static code analysis can be used to increase security without slowing down the pipeline. This maintains developer productivity and fast feedback loops. If specific manual tasks are required, they should be added to the user acceptance stage, so only good software versions are manually checked.

CI/CD pipeline challenges and solutions

Long-lived branches

Long-lived branches in a CI/CD pipeline can pose significant challenges, especially in terms of integration and maintaining code quality. These branches are typically used for features or versions that require extended development time. However, they can diverge significantly from the main branch, leading to complex merge conflicts and integration issues when it’s time to merge back.

The longer a branch exists without integration into the main branch, the higher the risk of conflicts, duplicated work, and integration difficulties. Additionally, long-lived branches can slow down the feedback loop, as the code in these branches may not benefit from Continuous Integration processes like automated testing and code reviews.

To mitigate the challenges associated with long-lived branches, teams can adopt strategies like feature toggles or keystoning so they can practice trunk-based development, or frequent merges into the main branch. Feature toggles allow incomplete features to be merged into the main branch without being activated in production, reducing the lifespan of branches. The keystoning technique adds testable functionality to the system without making it visible, with the final commit being the keystone that makes it available to users. Trunk-based development encourages frequent integration into the main branch, minimizing the divergence between branches.

Environment limitations

Environment limitations often arise from the differences between development, testing, and production setups. These discrepancies can lead to the software behaving inconsistently across different stages. For instance, a feature might work perfectly in the development environment but fail in production due to variations in operating systems, database versions, or configurations.

Addressing these challenges involves using tools like Docker and Kubernetes to create consistent and isolated environments. Containerization allows developers to define environment specifications in code, ensuring uniformity across all stages.

Additionally, implementing infrastructure as code (IaC) with tools like Terraform can help manage and replicate environments accurately. Resource constraints, such as limited hardware or network bandwidth, must also be managed carefully. Scaling infrastructure dynamically using cloud services can alleviate these limitations, ensuring that the CI/CD pipeline has the necessary resources to operate smoothly.

Integration with legacy workflows

CI/CD pipelines require a set of complementary practices. The Continuous Delivery process adds supporting tools and techniques that make it possible to work in small batches with high confidence. Though this represents a complex socio-technical system, research has proven that adopting cultural styles and technical practices plays a large role in successful software delivery.

Practices such as test automation, Continuous Integration of code into the main branch, loosely coupled teams, and documentation quality make CI/CD pipeline more likely to succeed. Though this demands an investment in tools and skills, the return on investment comes from increased software delivery performance, which also translates into improved performance against organizational goals.

The DORA Core Model is a good starting point for understanding the capabilities required and mature teams can review extended models from the annual reports to find additional optimizations that solve problems they find during retrospectives and other continuous improvement processes.

Organizational design

To enable loosely coupled architectures, organizations must amplify communication where it’s needed and dampen the unnecessary communication caused by poor team design. Team Topologies can be used to make deliberate choices about team types and their interactions. Stream aligned teams can focus on delivering value with support from enabling, platform, and complicated subsystem teams.

When teams are designed well, information can flow, assisted by clear communication channels like Slack and informal huddles. Work can be visualized through collaborative tools like Jira, Trello, or Notion, so everyone can see the work in progress and help unblock tasks that have stalled.

Quality documentation, measured in terms of availability, clarity, and usefulness and stored in a well organized searchable location is a predictor of high performance teams. CI/CD practices can have over 10x more impact when documentation quality is above average.

Best practices for building CI/CD pipelines

Work in small batches

Working in small batches is a fundamental principle for efficient CI/CD pipelines. By breaking down work into smaller, manageable pieces, teams can integrate, test, and deploy code more frequently. This approach reduces the complexity of changes, making it easier to identify and fix issues. It also allows for faster feedback, as smaller changes can be tested and reviewed more quickly than larger, more complex updates.

In a CI/CD context, small batches are needed for Continuous Integration, where developers frequently merge small pieces of code into the main branch. This minimizes the risk of integration conflicts and ensures that code changes are regularly validated by automated tests. Tools like Git encourage small, frequent commits, which can then be automatically built and tested in the CI pipeline. Working in small batches also supports Continuous Deployment, as smaller, well-tested increments can be deployed to production with greater confidence and less risk.

Automate practically everything

Automation is the cornerstone of an effective CI/CD pipeline. By automating toil (repetitive and manual tasks), teams can significantly reduce errors, speed up the development process, and ensure consistency across environments. Automation should extend across all stages of the pipeline, including code builds, testing, deployment, and even infrastructure provisioning.

In practice, this means using tools like Jenkins to automate the build and test processes, ensuring that every code commit triggers a series of automated actions, such as compiling the code, running tests, and generating artifacts. Deployment automation is equally important, with tools like Octopus providing re-usable automated deployment processes with sophisticated variables management to ensure the correct configuration is applied to different environments, machines, or tenants.

Beyond the core CI/CD processes, automation can also be applied to monitoring, alerting, and documentation. For instance, automated monitoring with tools like Prometheus can track the health and performance of applications post-deployment, while automated alerts ensure that issues are promptly addressed.

Build once, deploy multiple times

Once you’ve built a software version, you should use the same artifact across all environments to ensure consistency and reliability. Re-building the same code doesn’t result in the same output if something changes in the process, tools, or dependencies.

Build artifacts should be stored in an artifact repository, like JFrog Artifactory, and used for all deployments of that software version. This makes sure the exact artifact you validate throughout the CI/CD pipeline is the one that gets deployed to production.

Test early, test often

Implementing a “test early, test often” approach ensures that defects are detected and resolved as early as possible. Incorporating automated tests at various stages, including unit tests, integration tests, and acceptance tests, helps maintain high software quality. Unit tests validate individual components, integration tests ensure that different parts of the application work together, and acceptance tests verify the application against user requirements.

Tools like JUnit, Selenium, and Cucumber can automate these tests, providing continuous validation of the codebase. Continuous testing throughout the development lifecycle allows for early detection of issues, minimizing the cost and effort required for later-stage bug fixes. Test-driven development (TDD) and behavior-driven development (BDD) practices further enhance this approach by integrating testing into the development process.

Implement continuous feedback

Continuous feedback is crucial for maintaining a responsive and adaptive CI/CD pipeline. Automated feedback mechanisms, such as real-time alerts, dashboards, and reporting tools, help teams stay informed about the pipeline’s status and performance. Real user feedback makes sure the features being developed solve the intended problem.

Tools like Prometheus and Grafana can monitor the pipeline and provide real-time insights into its health and performance. This feedback loop enables quick identification and resolution of issues, fosters a culture of continuous improvement, and ensures that the software remains reliable and efficient.

Feedback mechanisms should also include detailed logging and traceability, allowing developers to diagnose and fix issues promptly. Collecting user feedback through automated surveys or usage analytics can further enhance the quality and relevance of the software.

Parallel and efficient builds

Optimizing build processes to run in parallel can significantly reduce the time required for builds and tests. Using parallel processing, where different parts of the codebase are built and tested simultaneously, helps speed up the pipeline. This can be achieved by splitting tests into smaller batches and running them on multiple agents or using distributed build systems.

Efficient builds also involve minimizing dependencies, using caching mechanisms, and employing fast and scalable infrastructure. Tools like Bazel and Gradle can optimize build processes by reusing existing artifacts and only rebuilding what is necessary. Ensuring that the build pipeline is scalable and can handle increased workloads without degradation in performance is also critical for maintaining efficiency.

Clear documentation

Keeping up-to-date, clear, and discoverable documentation helps people understand how the CI/CD pipeline works. Because you don’t update the pipeline as often as you update code, it’s easy to forget how something works, or why a particular decision was made. You can use documentation to capture the pipeline architecture, configuration options, and troubleshooting steps to make it easier to make changes or fix problems later, as well as helping others to contribute.

The documentation doesn’t need to repeat details that are self-evident, but instead help people navigate the components of the pipeline. Adequate documentation that is regularly updated is more valuable than comprehensive documentation that isn’t maintained. Tools like Confluence or GitHub Pages can reduce the documentation burden and help people find what they need.

Related content: Read our guide to CI/CD best practices

CI/CD with Octopus

Octopus is a leading Continuous Delivery tool designed to manage complex deployments at scale. It enables software teams to streamline Continuous Delivery, accelerating value delivery. With over 4,000 organizations worldwide relying on our solutions for Continuous Delivery, GitOps, and release orchestration, Octopus ensures smooth software delivery across multi-cloud, Kubernetes, data centers, and hybrid environments, whether dealing with containerized applications or legacy systems.

We empower Platform Engineering teams to enhance the Developer Experience (DevEx) while upholding governance, risk, and compliance (GRC). Additionally, we are dedicated to supporting the developer community through contributions to open-source projects like Argo within the CNCF and other initiatives to advance software delivery and operations.

Get started with Octopus

Make complex deployments simple

Help us continuously improve

Please let us know if you have any feedback about this page.