What is cloud deployment?

Deploying applications in the cloud involves provisioning the necessary infrastructure, configuring environments, and deploying code using cloud-native or cloud-agnostic tools. Organizations can choose from various deployment methods, including virtual machines, containers, serverless computing, and managed platform-as-a-service (PaaS) solutions.

The deployment process is often automated using Continuous Integration/Continuous Deployment (CI/CD) pipelines to ensure fast, reliable releases. Cloud deployment also requires careful planning around scalability, security, and cost optimization.

Cloud providers offer auto-scaling features, identity and access management (IAM), and cost-monitoring tools to help manage these aspects. Infrastructure-as-code (IaC) tools help teams consistently define and deploy cloud infrastructure across multiple environments.

Deploying workloads in different cloud models

Public cloud

Applications in a public cloud are deployed on infrastructure owned and managed by cloud providers like AWS, Google Cloud, or Microsoft Azure. Deployment options include:

- Virtual machines (VMs): Applications are hosted on cloud-based virtual servers. Developers configure instances, install necessary software, and deploy applications as they would on physical servers.

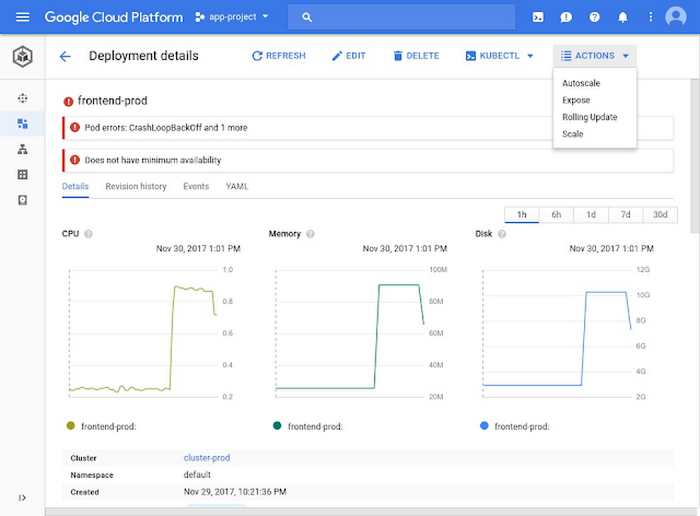

- Containers: Platforms like Kubernetes or Docker enable containerized deployments, allowing applications to run consistently across different environments. Cloud providers offer managed container services like AWS ECS, Google Kubernetes Engine, and Azure Kubernetes Service.

- Serverless computing: Applications are broken into functions executed on demand, using services like AWS Lambda, Azure Functions, or Google Cloud Functions. No server management is required, and resources automatically scale with usage.

- Managed PaaS solutions: Cloud providers offer fully managed environments for application deployment, such as AWS Elastic Beanstalk or Google App Engine, where developers focus only on code while the platform handles infrastructure.

Private cloud

A private cloud is dedicated to a single organization and hosted either on-premises or in a private data center. Deployment methods include:

- Virtual machines (VMs) with hypervisors: Organizations use hypervisors like VMware vSphere, Microsoft Hyper-V, or KVM to create and manage VMs, ensuring isolation and resource control.

- Containerization with private orchestration: Tools like Kubernetes or OpenShift allow deploying applications in containers within a private cloud, offering better resource use and scalability.

- Bare metal deployment: Applications are installed directly on dedicated servers without virtualization, maximizing performance but requiring manual configuration and management.

- Private PaaS solutions: Platforms like Cloud Foundry or OpenShift provide a managed environment for deploying applications without handling the underlying infrastructure.

Hybrid cloud

Hybrid cloud deployment integrates private and public cloud environments for flexibility and optimization. Common deployment approaches include:

- Multi-cloud Kubernetes orchestration: Kubernetes clusters span both private and public clouds, allowing applications to run seamlessly across environments. Services like Anthos or Azure Arc simplify hybrid Kubernetes management.

- Cloud bursting: Applications run primarily in a private cloud but scale out to a public cloud during peak demand. Load balancers and hybrid networking solutions manage traffic between clouds.

- Hybrid API gateways: APIs and microservices are deployed across both clouds, ensuring seamless integration using tools like AWS API Gateway, Azure API Management, or Kong.

- Data replication and syncing: Databases and storage systems replicate between private and public clouds, ensuring high availability and disaster recovery. Services like AWS Storage Gateway or Azure Hybrid Benefit facilitate this.

Community cloud

A community cloud is shared among multiple organizations with similar security, compliance, or operational requirements. Deployment methods include:

- Shared virtualized infrastructure: Organizations deploy applications on jointly managed VMs or hypervisors, using shared governance models for access control and security.

- Multi-tenant containers: Applications are deployed in containerized environments, with role-based access and security policies managed collectively among participants.

- Federated cloud services: Workloads are distributed across different community cloud providers, ensuring redundancy and compliance with industry regulations.

- Collaborative PaaS deployment: A shared platform-as-a-service environment allows organizations to develop and deploy applications while maintaining compliance with community policies.

Key cloud deployment strategies

Blue/green deployment

In the context of cloud applications, blue/green deployment ensures high availability and smooth updates by leveraging the scalability and flexibility of cloud platforms. Cloud environments, such as AWS or Azure, allow users to spin up identical infrastructure for both environments (blue and green) with minimal overhead.

Steps for cloud apps:

- Provision environments: Use infrastructure-as-code (e.g., AWS CloudFormation, Terraform) to create identical blue (current) and green (new) environments.

- Deploy new version: Deploy the updated application version to the green environment using container orchestration tools like Kubernetes or serverless platforms like AWS Lambda.

- Test and validate: Conduct functional and load testing in the green environment using cloud-native tools (e.g., AWS CodePipeline or Azure DevOps).

- Switch traffic: Use cloud-native load balancers (e.g., Elastic Load Balancing) to reroute traffic from the blue to the green environment.

- Rollback if needed: If issues arise, switch traffic back to the blue environment with minimal disruption.

Advantages for cloud apps:

- Automation: Cloud platforms make it possible to automatically spin up green and blue environments and switch traffic between them.

- Minimal downtime: The blue/green pattern makes it possible to deploy new application versions with low risk of downtime or disruption.

- Global reach: Multi-region deployments ensure minimal latency for users worldwide.

Canary deployment

For cloud applications, canary deployment leverages cloud-native monitoring and scaling features to test updates with a small subset of users before full rollout.

Steps for cloud apps:

- Initial deployment: Deploy the new version to a small percentage of instances (e.g., 5%) using a service like AWS App Runner or Azure App Services.

- Configure traffic splitting: Use cloud traffic management tools (e.g., AWS App Mesh, Google Traffic Director) to direct a fraction of user traffic to the canary instances.

- Monitor in real-time: Use cloud-based observability tools like AWS CloudWatch or Azure Monitor to track performance, errors, and user feedback.

- Expand gradually: Increase the percentage of traffic directed to the canary version based on performance metrics.

- Rollback if necessary: Use automated rollback triggers based on pre-defined error thresholds.

Benefits for cloud apps:

- Granular control: Traffic routing is fine-tuned at the DNS or load balancer level.

- Integrated monitoring: Real-time metrics from cloud-native tools simplify troubleshooting.

- Serverless ready: Suitable for serverless applications where incremental scaling is automatic.

Rolling deployment

For cloud applications, rolling deployment uses the inherent elasticity of cloud infrastructure to update instances incrementally while maintaining overall application availability.

Steps for cloud apps:

- Divide instances: Group application instances managed by a cloud orchestration tool, such as Kubernetes or AWS Elastic Beanstalk.

- Incremental updates: Update one group of instances at a time, ensuring others remain active to handle traffic.

- Leverage auto-healing: Use features like Kubernetes health checks or AWS Auto Scaling to replace failing instances automatically.

- Monitor each phase: Continuously monitor performance using cloud-native logging and metrics services.

- Complete rollout: Repeat the process until all instances are updated.

Cloud-specific advantages:

- Scaling: Cloud platforms balance traffic across updated and non-updated instances.

- Fault tolerance: Redundancy built into cloud services prevents disruptions.

- Global consistency: Updates can be applied region by region for global applications.

A/B testing

For cloud applications, A/B testing provides a data-driven approach to evaluate new features or performance improvements by leveraging advanced cloud traffic routing and analytics tools.

Steps for cloud apps:

- Deploy variants: Deploy both the control (A) and experimental (B) versions in separate environments or instances.

- Set traffic distribution: Use cloud services like AWS Route 53 weighted routing or Google Cloud Load Balancing to allocate traffic between the two versions.

- Measure key metrics: Integrate with cloud analytics tools (e.g., AWS Amplify Analytics, Google BigQuery) to track user engagement, error rates, and response times.

- Analyze results: Use machine learning services (e.g., AWS SageMaker) to identify statistically significant differences between versions.

- Deploy winning version: Roll out the preferred version globally.

Cloud-specific features:

- Real-time insights: Cloud analytics provide instant feedback on user behavior.

- Scalable testing: Run tests at scale across diverse regions and device types.

- Integration with CI/CD: Cloud-native CI/CD pipelines automate the testing and deployment process.

Deploying applications on popular cloud platforms: Built-in cloud provider services

The major cloud providers offer multiple services that enable deployment of applications—either directly, using a serverless model, or within containers. Let’s review the primary deployment services in AWS, Azure, and Google Cloud.

Amazon Web Services

AWS offers a suite of services to support application deployment for use cases ranging from traditional applications to serverless architectures.

Key services for deployment:

- Elastic Beanstalk: A Platform-as-a-Service (PaaS) solution that simplifies application deployment by managing infrastructure provisioning, load balancing, and scaling. It supports multiple languages, including Java, .NET, PHP, Python, and Node.js.

- AWS Lambda: Suitable for serverless applications, Lambda enables deployment of code without provisioning or managing servers. Code is triggered by events, such as API requests or changes in data.

- ECS and EKS: Amazon Elastic Container Service (ECS) and Elastic Kubernetes Service (EKS) are container orchestration tools for deploying, managing, and scaling containerized applications.

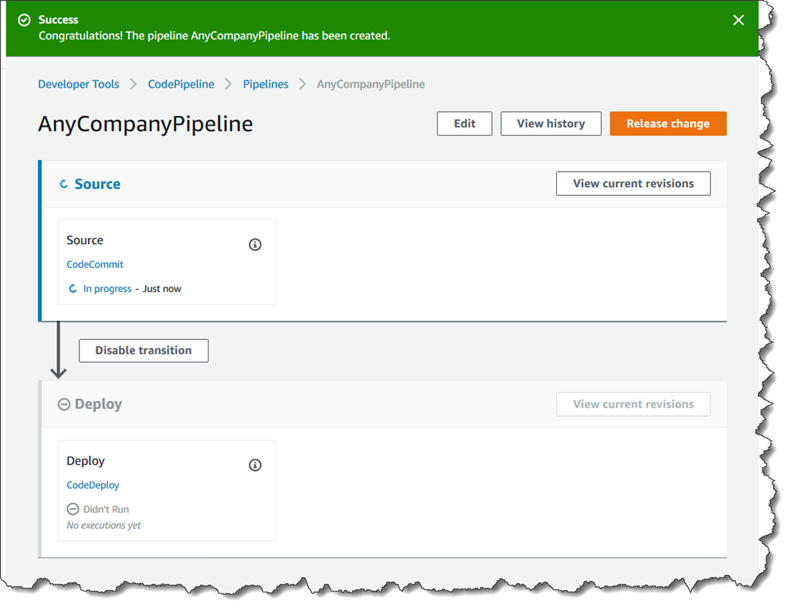

- AWS CodePipeline: An automated CI/CD service that integrates with other AWS tools, enabling seamless application build, test, and deployment workflows.

Source: Amazon

Microsoft Azure

Microsoft Azure provides an ecosystem for deploying and managing applications across diverse environments, including web apps, containers, and serverless computing.

Key services for deployment:

- App Service: A fully managed platform for building and hosting web apps, mobile backends, and RESTful APIs in multiple programming languages.

- Azure Kubernetes Service (AKS): A managed Kubernetes service that simplifies container orchestration and scaling.

- Azure Functions: Serverless computing for deploying event-driven applications without managing servers.

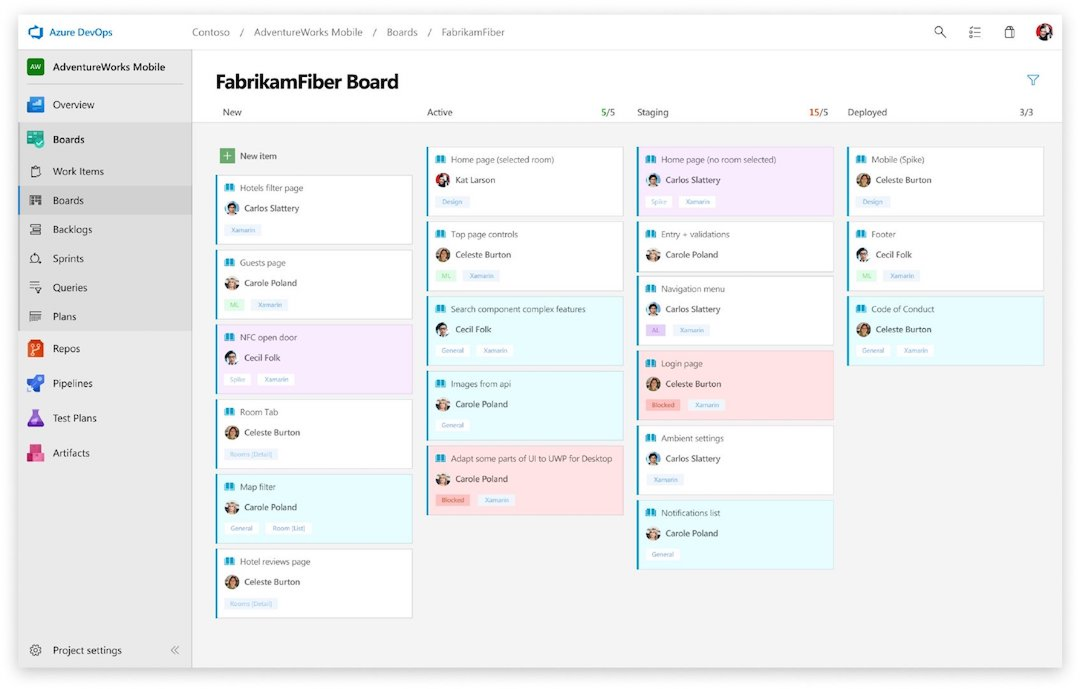

- Azure DevOps: An integrated CI/CD platform for building, testing, and deploying applications.

Source: Microsoft

Google Cloud

Google Cloud supports deploying scalable, high-performance applications by leveraging its expertise in distributed systems and AI.

Key services for deployment:

- App Engine: A fully managed PaaS that supports automatic scaling and common programming languages like Java, Python, and Go.

- Google Kubernetes Engine (GKE): A managed Kubernetes platform optimized for deploying containerized applications.

- Cloud Functions: A serverless platform for lightweight, event-driven applications.

- Cloud Build: A CI/CD service that automates builds, tests, and deployments directly on GCP.

Source: Google Cloud

Cloud-agnostic deployment tools

In addition to built-in services offered by the cloud providers, there are multiple cloud-agnostic tools that enable application deployment across multiple cloud platforms. This allows organizations to avoid vendor lock-in and maintain flexibility. These tools are especially useful for hybrid or multi-cloud strategies.

Here are a few widely used cloud-agnostic deployment tools:

- Octopus Deploy: A deployment automation tool that supports multi-cloud, hybrid, and on-premises environments. Offers blue/green and canary deployments, RBAC security, and CI/CD pipeline integration.

- Codefresh: A Kubernetes-native CI/CD platform with GitOps support, parallel pipelines, and deep integrations with Docker, Helm, and ArgoCD. Optimized for containerized application deployment.

- Terraform: Terraform, developed by HashiCorp, is an open-source Infrastructure-as-Code (IaC) tool that supports the provisioning and management of resources on various cloud platforms, including AWS, Azure, and Google Cloud. With its declarative configuration language, users can define infrastructure and deploy it consistently across environments.

- Ansible: Ansible is an open-source automation tool for configuration management, application deployment, and orchestration. It supports deployment across multiple clouds using simple, YAML-based playbooks to define tasks. Its agentless architecture makes it easy to manage hybrid cloud setups.

- Kubernetes: Kubernetes is a container orchestration platform that runs on any cloud or on-premises infrastructure. By abstracting the underlying infrastructure, Kubernetes provides a unified way to manage, deploy, and scale containerized applications.

- Docker: Docker enables the creation and deployment of containerized applications. While it is not specific to any single cloud provider, Docker allows applications to run consistently across various cloud environments by packaging them with their dependencies.

5 tips for effective cloud deployments

Here are some of the ways that organizations can ensure successful deployment in the cloud.

1. Use Continuous Deployment pipelines

Implementing Continuous Deployment pipelines automates the process of building, testing, and deploying applications. This reduces human intervention, minimizes errors, and accelerates release cycles.

Cloud platforms offer services like Azure DevOps and Google Cloud Build to streamline CD workflows. These services integrate with version control systems (e.g., GitHub, GitLab) and testing frameworks to ensure code quality before deployment.

To maximize efficiency, teams should adopt infrastructure-as-code (IaC) tools like Terraform or AWS CloudFormation to manage infrastructure changes as part of the deployment pipeline. Monitoring and rollback mechanisms should also be included to detect failures and revert to stable versions when needed.

2. Use blue/green or canary deployments

Using blue-green or canary deployment strategies reduces downtime and mitigates risk when deploying updates.

- Blue-Green Deployment: Two identical environments (blue and green) are maintained, with the active version running in blue. Updates are deployed to green and tested before switching traffic over. Cloud load balancers (e.g., AWS ALB, Azure Traffic Manager) facilitate this transition.

- Canary Deployment: A small percentage of users are routed to the new version first. Performance is monitored using cloud-native observability tools (e.g., AWS CloudWatch, Azure Monitor). If no issues arise, traffic is gradually increased until full deployment is achieved.

3. Region and zone selection

Selecting the appropriate region and zone is essential for optimizing performance and regulatory compliance. Cloud providers offer numerous regional data centers, enabling organizations to reduce latency and improve user experiences by locating resources closer to users. Compliance with data sovereignty laws also requires careful region selection.

Evaluating geopolitical factors, pricing, and availability of services in different regions supports informed decision-making. Organizations should balance cost considerations with performance objectives. Zone redundancy within regions can provide additional resilience, mitigating potential outages or service disruptions.

4. Implement zero-downtime deployment strategies

Zero-downtime deployments ensure users experience no service disruption when updates are pushed to production.

Cloud-native tools like Kubernetes rolling updates, AWS Elastic Beanstalk immutable updates, and Azure App Service deployment slots allow gradual replacement of application instances without downtime. Database migrations should be handled carefully using techniques like feature flags, schema versioning, and blue-green database deployments to prevent inconsistencies.

Using feature toggles (e.g., LaunchDarkly) allows teams to turn new features on and off without redeploying code, further reducing risks associated with releases.

5. Containerize for portability and scalability

Containerization improves application portability and simplifies cloud deployments by encapsulating dependencies and runtime configurations.

Tools like Docker and Kubernetes enable applications to run consistently across different cloud environments. Kubernetes orchestration (e.g., GKE, AKS, or EKS) automates scaling, networking, and self-healing.

Containerized workloads can be deployed using managed services like AWS Fargate, Azure Container Apps, or Google Cloud Run for serverless execution, reducing operational overhead. Solutions like Anthos, OpenShift, or HashiCorp Nomad ensure consistent management across platforms for hybrid or multi-cloud deployments.

Automating cloud deployments end-to-end with Octopus

Octopus handles complex deployments at scale. You can capture your deployment process, apply different configurations, and automate the steps to deploy a new software version, upgrade a database, or perform operations tasks.

With Octopus, you can manage all your deployments across multiple public, private, on premises, or hybrid clouds. You can use Octopus for modern cloud-native applications and traditional deployment to virtual machines. This means you can see the state of all your deployments in one place and use the same tools to deploy all your applications and services.

Get started with Octopus

Make complex deployments simple

Help us continuously improve

Please let us know if you have any feedback about this page.