What is Kubernetes CI/CD?

Kubernetes CI/CD refers to the integration of Continuous Integration (CI) and Continuous Deployment (CD) practices within a Kubernetes environment. CI/CD pipelines automate the process of application delivery: from code changes to deployment in a production environment. Using Kubernetes as the orchestrator, CI/CD pipelines can manage scaling, zero-downtime deployments, and facilitate rolling updates or rollbacks. This ensures reliability and consistency in application releases.

In a Kubernetes CI/CD setup, each stage is optimized to take advantage of Kubernetes features such as container orchestration, service discovery, and self-healing. Kubernetes automates the deployment, scaling, and management of containerized applications, which simplifies the CI/CD process. These capabilities allow teams to continuously deliver and improve their applications with less manual intervention and greater speed.

Benefits of Kubernetes for your CI/CD pipeline

Kubernetes brings several benefits to CI/CD pipelines. First, it ensures scalability. Kubernetes automates scaling tasks based on demand, reducing manual intervention. This means as your application scales up or down, Kubernetes handles it smoothly, ensuring that your pipeline remains efficient regardless of load.

Second, Kubernetes offers high availability and fault tolerance. If a node fails, Kubernetes automatically replaces it without downtime. This increases the reliability of your CI/CD pipeline, making sure that your deployments are resilient. Other benefits include improved resource use and the ability to integrate with other tools and services, boosting overall productivity.

Stages of Kubernetes app delivery

The Kubernetes app delivery pipeline consists of multiple stages: code, build, test, and deploy. Each stage plays a crucial role in ensuring that the application is delivered reliably and consistently. By breaking down the delivery process into these stages, teams can isolate and address issues at each step, improving the overall quality of the deployment.

Using Kubernetes for app delivery allows each stage to leverage Kubernetes-native features such as automated deployment, service discovery, and monitoring. This integration simplifies pipeline management and increases the efficiency and speed of the delivery process.

Code

The coding stage is the initial phase where developers write the application’s code. Within a Kubernetes CI/CD environment, code changes trigger pipeline runs. Source code repositories like GitLab, GitHub, and Bitbucket are often used for version control and collaboration. These repositories can directly integrate with Kubernetes to initiate builds and deployments automatically when new code is committed.

Effective code management practices, such as reviewing and merging code through pull requests, are vital in this stage. This process ensures that only vetted code progresses further in the pipeline. Integration with tools like Kubernetes-native IDEs can further streamline the coding process, making it easier for developers to commit high-quality code that is CI/CD ready.

Build

The build stage transforms the application code into executable artifacts. In Kubernetes CI/CD, this usually means creating container images using Docker or similar technologies. These images are stored in container registries like Docker Hub or Google Container Registry for later use. Kubernetes can then use these images to create application pods and services.

Automating the build process is crucial. Tools like Jenkins, CircleCI, and GitHub Actions integrate with Kubernetes to automate building and pushing container images. This reduces the risk of human error and speeds up the pipeline. Automated builds also ensure that artifacts are built consistently every time, adhering to the same standards and configurations.

Test

Testing within the Kubernetes CI/CD pipeline involves validating the application to ensure it functions as expected. This can include unit tests, integration tests, and end-to-end tests. Kubernetes facilitates automated testing by spinning up isolated test environments using namespaces. These environments can easily be created and torn down as needed for testing purposes.

The automation of tests is made more efficient with tools like Helm, which can deploy complex applications and their dependencies in a test environment. Kubernetes’ ability to create consistent and reproducible test environments ensures that tests are performed under the same conditions every time. This enhances the reliability of test results, making it easier to identify and resolve issues.

Deploy

The deploy stage involves moving tested application artifacts to the production environment. Kubernetes CI/CD pipelines leverage Kubernetes’ deployment controllers, such as deployments and statefulsets, to manage this step. These controllers facilitate zero-downtime deployments, rolling updates, and rollback mechanisms if issues are detected post-deployment.

Tools like Argo CD and Helm charts simplify and enhance the deployment process. Kubernetes also uses service meshes and operators to improve deployment efficiency and reliability. With automated Continuous Delivery tools like Octopus, teams can manage progressive deployment, gradually promoting releases from development environments to testing, staging, and production, while running the appropriate tests at every stage of the process.

Common techniques for Kubernetes deployment

GitOps

GitOps is a Kubernetes deployment technique that leverages Git repositories as the single source of truth for application and infrastructure configurations. In a GitOps model, configuration changes are pushed to a Git repository, ideally via an automated Continuous Delivery pipeline, which triggers automated deployment processes. Tools like Argo CD and Flux monitor Git repositories and automatically apply changes to Kubernetes clusters, ensuring that the live environment always matches the desired state defined in Git. This approach brings several advantages, including improved version control, transparency, and auditability. Since all configurations are stored and managed in Git, teams can track changes, roll back to previous states, and collaborate more effectively. GitOps also enhances security by enabling controlled access to production environments through Git pull requests, reducing the need for direct access to the Kubernetes cluster.

Helm

Helm is a Kubernetes package manager that simplifies deployment and management of applications by using Helm charts. A Helm chart is a collection of files that define the necessary components, configurations, and dependencies for an application, packaged in a way that makes it easy to deploy on Kubernetes. By using Helm charts, teams can install and update applications with a single command, enabling streamlined and consistent deployments across different environments. Helm charts also support configuration customization, which allows applications to be deployed with environment-specific settings. Additionally, Helm handles versioning of releases, making it easy to roll back to a previous version if needed, supporting an end-to-end CI/CD process. Helm allows configuration templating, so one Helm chart can be used for multiple microservices. It also enables a separation of concerns: One team, for example a platform team, can manage the Helm chart, while another team, such as an application team, can manage values files or application-specific Helm charts referencing the template. This makes it easier to manage multiple applications with Helm.

Replicable environments with IaC

Infrastructure as Code (IaC) is a technique for managing and provisioning Kubernetes environments using code, allowing for consistent and replicable environments across development, testing, and production. Using tools like Terraform or Kubernetes-native IaC tools like Kustomize, teams can define infrastructure configurations in code, which can be versioned and reused across environments. IaC simplifies creating new environments, enabling developers to provision identical environments with minimal manual effort. This consistency across environments reduces discrepancies between development and production, leading to more predictable deployments and easier troubleshooting. Additionally, IaC promotes collaboration, as configuration files can be reviewed, tested, and managed in version control systems.

Notable tools for CI/CD with Kubernetes

1. Octopus

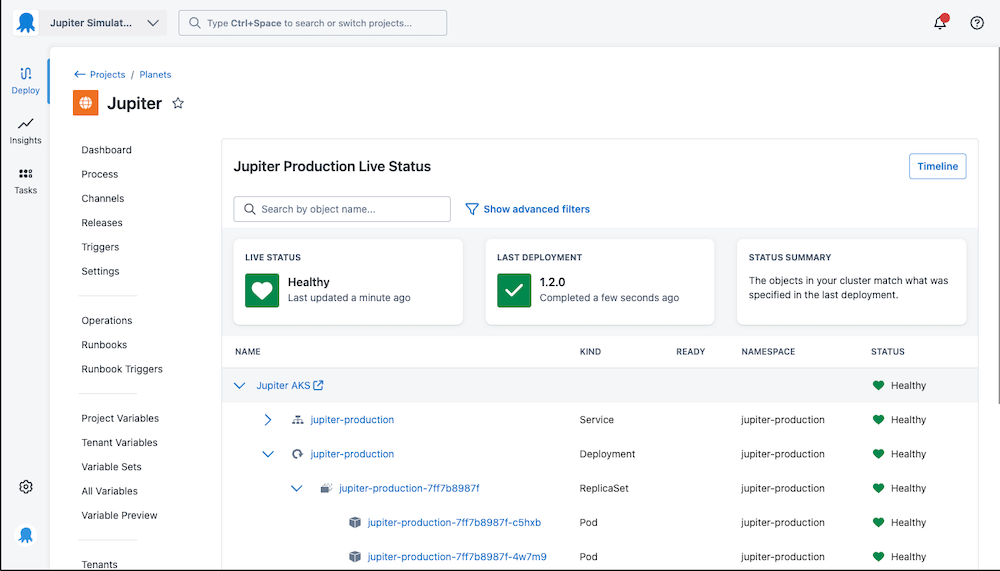

Octopus Deploy is a comprehensive Continuous Delivery platform designed to automate complex deployment scenarios for all types of applications. It allows teams to model scenarios like change management, multi-cluster deployments, post-deployment tests, and notifications and, at the same time, follow best practices for Kubernetes deployments.

Key features include:

- Declarative approach: With Octopus Deploy, you can manage your configurations in Git, including Kubernetes manifests, variables, and pipeline configurations.

- Advanced CD engine: Octopus Deploy simplifies modeling complex deployment scenarios across multiple environments, with various promotion flows and comprehensive promotion rules.

- Kubernetes agent: Access Kubernetes clusters securely with an agent running inside a cluster. The agent establishes a secure tunnel with the Octopus Server and executes deployments within the cluster.

- Live status, events, and logs for applications deployed to Kubernetes: Octopus offers real-time information about applications deployed on Kubernetes and provides tools for troubleshooting them.

- Deployment automation: Octopus Deploy can monitor your container image or Helm chart repositories and Git to create new releases and automatically start deployments.

- Enterprise compliance and security: Octopus Deploy features a detailed permission control system, audit, change management tools integrations, and certifications, making it easy to satisfy enterprise compliance requirements within your Continuous Deployment pipelines.

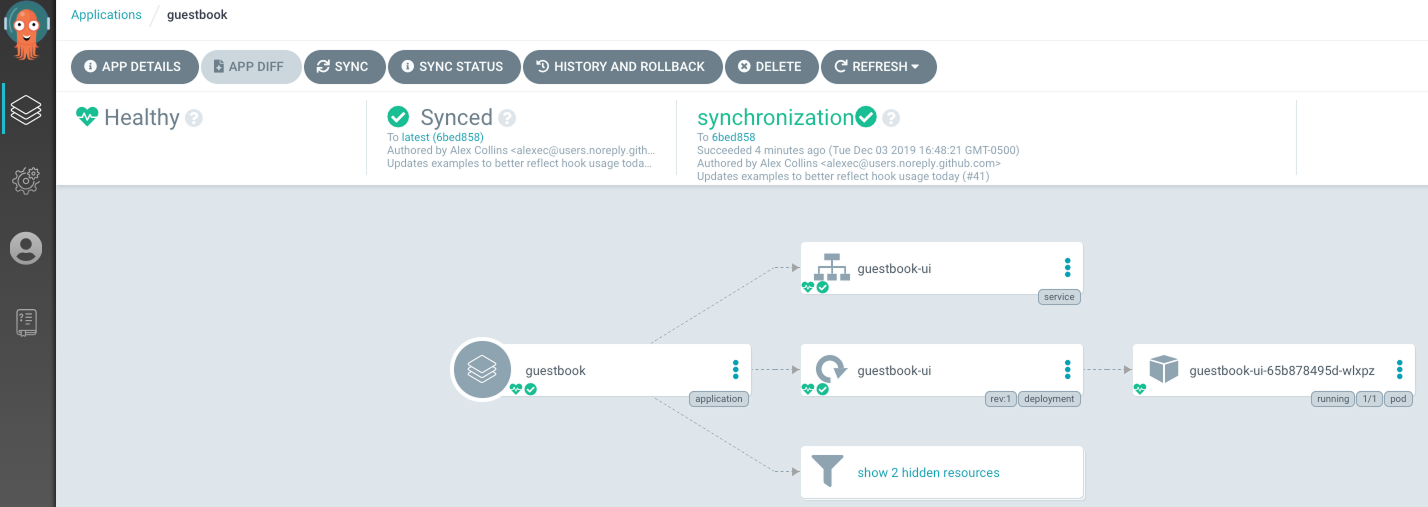

2. Argo CD

Argo CD is a declarative, GitOps platform that enables deployment and lifecycle management of Kubernetes applications. It ensures that the live state of your applications matches the desired state defined in your Git repositories. This alignment reduces configuration drift and promotes consistency. However, it’s important to note that Argo must be combined with additional tools to achieve full Continuous Delivery in Kubernetes.

Key features include:

- Declarative GitOps approach: Argo CD treats Git repositories as the single source of truth for the desired application state. This ensures that any changes made to the Git repository are reflected in the Kubernetes cluster.

- Automated synchronization: The tool continuously monitors the live state of applications and synchronizes them with the desired state defined in the Git repository. This can be set to either automatic or manual synchronization.

- Multi-cluster support: Argo CD can manage and deploy applications across multiple Kubernetes clusters, making it ideal for organizations with complex environments.

- RBAC and SSO integration:* It supports role-based access control (RBAC) and integrates with single sign-on (SSO) providers, ensuring secure access management.

![]()

Source: Argo CD

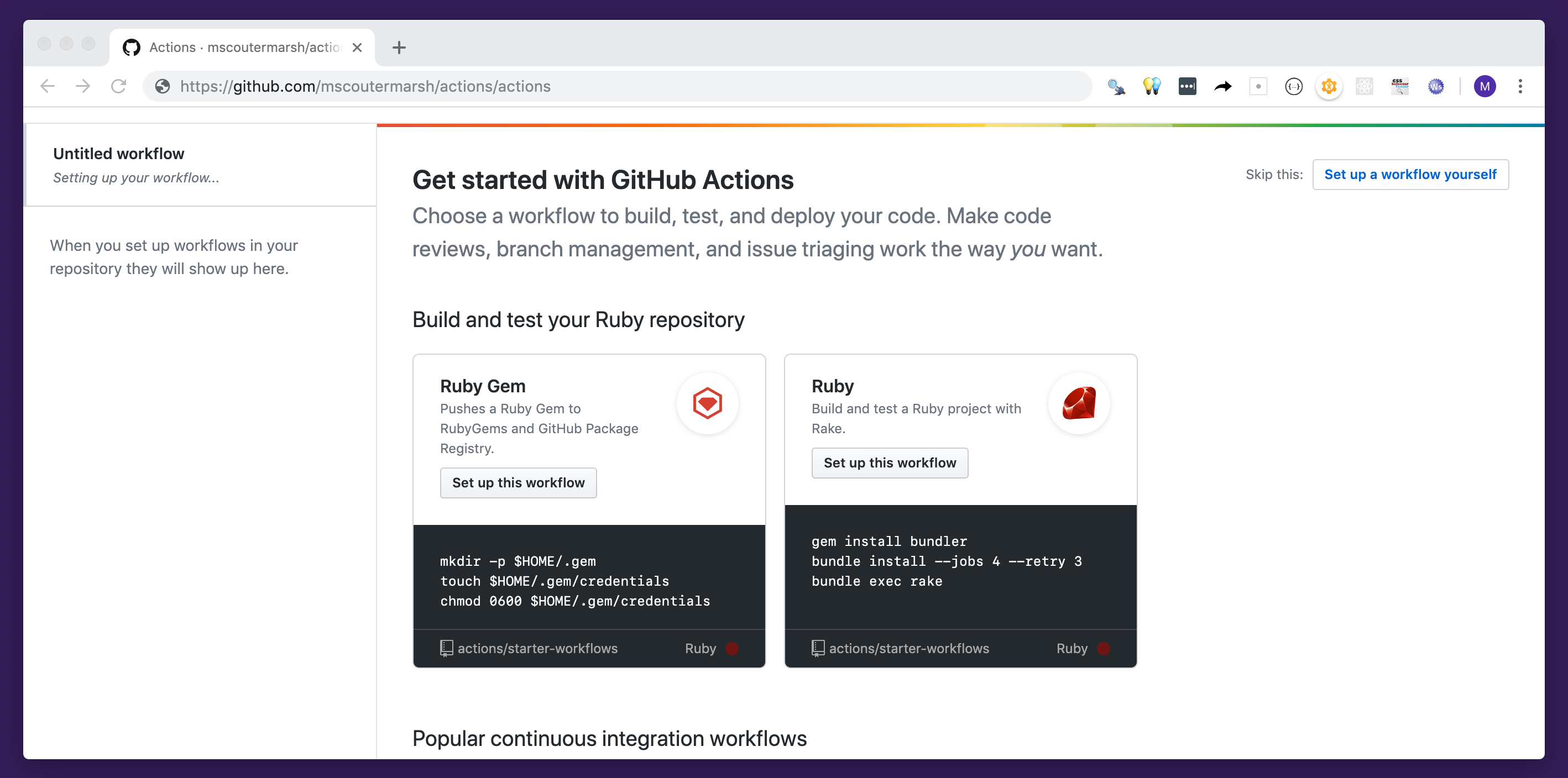

3. GitHub Actions

GitHub Actions is an automation tool that allows you to create custom workflows directly from your GitHub repositories. It supports deployment to Kubernetes environments.

Key features include:

- Workflow automation: GitHub Actions enables you to automate the entire software development lifecycle, including build, test, and deployment workflows.

- Containerized runners: It supports the use of custom containerized runners, providing isolated environments to run your workflows. This is particularly useful for Kubernetes, as it ensures consistency across different stages.

- Event-driven: Workflows can be triggered by various GitHub events such as code pushes, pull requests, and issue creation. This ensures that your CI/CD pipelines are always in sync with your repository activities.

- Integration with Kubernetes: GitHub Actions provides integration with Kubernetes, allowing you to deploy applications to your clusters directly from your workflows.

![]()

Source: GitHub Actions

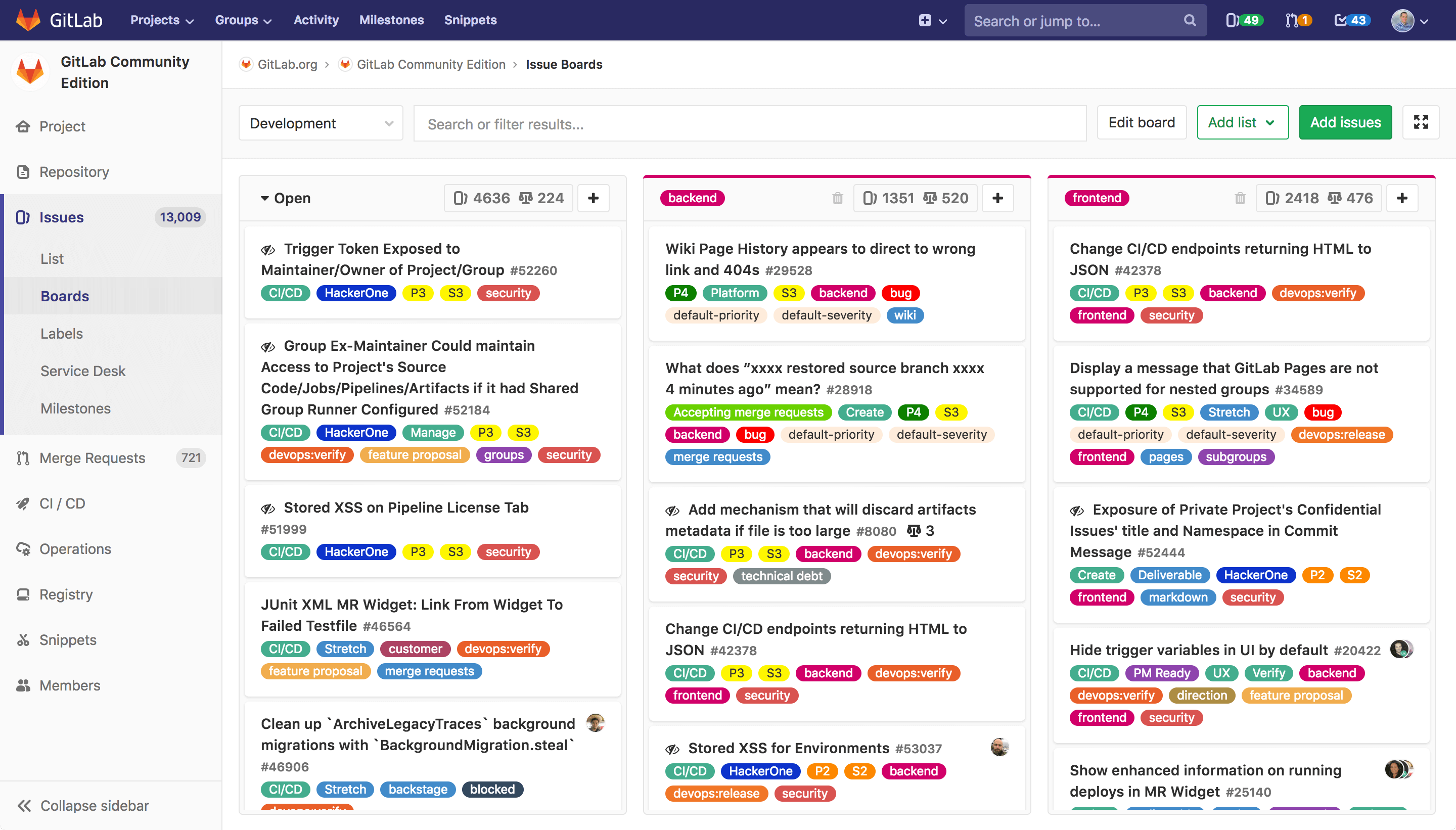

4. GitLab CI/CD

GitLab is a DevOps platform that integrates source control, Continuous Integration, Continuous Delivery, and project management. Like GitHub Actions, its CI/CD capabilities are compatible with Kubernetes, making it a popular choice for managing complex deployments.

Key features include:

- Integrated CI/CD: GitLab offers built-in CI/CD pipelines that support Docker and Kubernetes. This integration ensures that you can build, test, and deploy your applications within the same platform.

- Auto DevOps: GitLab’s Auto DevOps feature automates the setup of CI/CD pipelines with best practices and Kubernetes deployment configurations. This reduces the time and effort required to get started.

- Container registry: The platform includes a built-in container registry for storing and managing Docker images. This registry is integrated with GitLab CI/CD, streamlining the build and deployment process.

- Kubernetes integration: GitLab integrates with Kubernetes clusters, allowing for straightforward deployment and management of containerized applications.

![]()

Source: GitLab

Best practices for CI/CD and Kubernetes

Here are a few best practices that can help your team effectively practice CI/CD in a cloud-native environment.

Define Operational/Security Boundaries and Environments

Establishing clear operational and security boundaries in your CI/CD pipelines ensures that deployments remain secure and compliant. Set up separate Kubernetes namespaces or clusters for each environment (e.g., development, staging, production) to isolate resources and limit access based on role or team responsibility. Use role-based access control (RBAC) to assign permissions granularly, preventing unauthorized access to production environments.

Network policies, secrets management, and encrypted communications should also be configured to protect sensitive data and resources. By defining strict boundaries and security protocols, teams can reduce the risk of accidental cross-environment access and ensure compliance with organizational or regulatory standards.

Automate Environment Progression

Environment progression refers to deploying code changes through a series of stages (e.g., development, testing, staging, production), allowing teams to validate changes incrementally. In a Kubernetes CI/CD setup, this progression can be managed by automated pipelines that promote artifacts from one environment to the next once they meet predefined criteria. For example, a Continuous Delivery tool like Octopus can run a series of integration tests, and if a critical subset of the tests passes, the release can be promoted from dev to staging.

Implementing environment progression ensures that issues are detected early, reducing the likelihood of production outages. It also allows teams to evaluate the impact of updates in a controlled manner, using each environment to catch specific types of errors (e.g., development for unit tests, staging for integration tests). Automating this process helps maintain consistency across deployments and promotes smooth transitions between environments.

Define Common Base Services for Your Clusters

Establishing a set of base services across Kubernetes clusters simplifies maintenance and standardizes your environments. Base services typically include logging, monitoring, ingress controllers, and authentication. By defining and maintaining these core services, teams can create consistent, reusable environments that simplify application deployment and troubleshooting.

Kubernetes operators and Helm charts can be used to deploy these services across clusters, ensuring they are always configured correctly. This approach reduces setup time for new environments, improves resource use, and makes it easier to maintain uniform security and performance practices across multiple clusters.

Automatically Test and Update your Base Services

Automating testing and updates for base services is critical for reliability. Regular testing ensures that changes to core services (e.g., logging or monitoring) do not introduce errors, and that they perform as expected across clusters. Implement automated pipelines that validate base service configurations, and consider rolling update strategies for smooth upgrades.

For updates, use tools like Argo CD or Flux to manage configuration changes in Git, allowing for version-controlled updates and rollbacks if issues arise. Automated testing and updating of base services ensures operational stability, enhances consistency, and reduces the risk of unexpected issues in the production environment.

Use Templating for Common Artifacts Across Environments

Templating helps standardize deployment configurations across different environments. Tools like Helm and Kustomize allow teams to define templates for Kubernetes manifests, which can then be customized with environment-specific values. This approach ensures consistency while providing the flexibility to adapt configurations for each environment.

Using templates reduces the complexity of managing multiple configurations and helps avoid errors that can occur with manual edits. Templates also make it easier to apply updates across environments simultaneously, as changes can be made in a single template and propagated across environments through automated CI/CD pipelines.

Related content: Read our guide to CI/CD tools

CI/CD with Octopus

Octopus is a leading Continuous Delivery tool designed to manage complex deployments at scale. It enables software teams to streamline Continuous Delivery, accelerating value delivery. With over 4,000 organizations worldwide relying on our solutions for Continuous Delivery, GitOps, and release orchestration, Octopus ensures smooth software delivery across multi-cloud, Kubernetes, data centers, and hybrid environments, whether dealing with containerized applications or legacy systems.

We empower Platform Engineering teams to enhance the Developer Experience (DevEx) while upholding governance, risk, and compliance (GRC). Additionally, we are dedicated to supporting the developer community through contributions to open-source projects like Argo within the CNCF and other initiatives to advance software delivery and operations.

Get started with Octopus

Make complex deployments simple

Help us continuously improve

Please let us know if you have any feedback about this page.