A Kubernetes deployment YAML file (also called a deployment manifest) is a configuration document used to declare the desired state for applications in a Kubernetes cluster. These files allow developers to specify details like the number of application instances, the container images to use, and other settings.

Written in YAML (Yet Another Markup Language), a simple text-based format, deployment files provide a human-readable way to manage configurations. By defining the application state in a declarative manner, Kubernetes can manage and automate the deployment, scaling, and various lifecycle stages of the application.

Below is a simple example of a Kubernetes Deployment YAML file. This file will create a Deployment that manages a Pod running a single instance of an NGINX container. Deployments can get much more complex, with capabilities like automatically launching additional application instances, limiting the computing resources containers are allowed to use, and defining health checks to improve reliability—see the examples section below.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80Anatomy of a Kubernetes deployment YAML file

A typical Kubernetes deployment YAML file comprises the following sections: apiVersion, kind, metadata, spec, replicas, selector, and template. Each component has a specific role in defining the deployment’s behavior and characteristics.

apiVersion

The apiVersion field specifies the version of the Kubernetes API to use for a given resource. It’s critical to ensure compatibility between the YAML file and the Kubernetes cluster. For deployments, the API version is typically apps/v1.

kind

The kind field defines the type of Kubernetes resource being created or modified. In the case of deployments, the value is deployment, signifying that the YAML file is intended to manage a deployment resource. This field helps Kubernetes route the configuration request to the appropriate handler.

metadata

The metadata section contains data that helps uniquely identify the object within the Kubernetes cluster. This section typically includes attributes like:

- name: A unique identifier for the deployment

- namespace: Scopes the deployment within a particular namespace in the Kubernetes cluster.

- labels: key-value pairs useful for organizing and selecting resources.

Using proper metadata is crucial for managing resources, debugging issues, and maintaining the overall organization of the cluster. Consistent and descriptive labeling practices can significantly simplify tasks such as monitoring, scaling, and updating applications.

spec

The spec field outlines the desired state of the deployment, describing the configuration in detail. This includes defining the number of replicas, the desired state of each pod, the container specifications, and other configurations. The spec is the blueprint that Kubernetes uses to implement and maintain the desired state for the deployment.

spec:replicas

The replicas field within the spec section specifies the number of pod instances that should be running at any given time. This ensures high availability and scalability of the application by maintaining the specified number of replicas. Adjusting this value allows administrators to scale the application up or down based on demand.

Kubernetes continuously monitors the deployment to see if the required number of replicas are active, and if not, it starts more replicas or shuts down existing replicas, until the current state of the deployment matches the desired state.

spec:selector

The selector field is used to define a set of criteria used to identify the set of pods managed by the deployment. This usually involves specifying a match for certain labels assigned to the pods. By using selectors, Kubernetes can manage the lifecycle of specific pod groups, ensuring that the right pods are created, updated, or deleted as per the deployment’s specifications.

spec:template

The template field within the spec section defines the blueprint for creating pods. It includes nested fields such as metadata, spec, and others that outline the configuration for each pod, including the container images to use, resource requests and limits, and environment variables. Essentially, the template provides a reusable pod definition that ensures consistency when scaling up the deployment.

Learn more in our detailed Kubernetes deployment strategy guide.

K8s deployment YAML: 3 examples explained

1. Deployment YAML with multiple ReplicaSet

Below is an example of a Kubernetes deployment YAML file that creates a ReplicaSet to bring up three NGINX pods. This example illustrates how a deployment manages multiple replicas of a pod to ensure high availability and scalability of the application.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.26.1

ports:

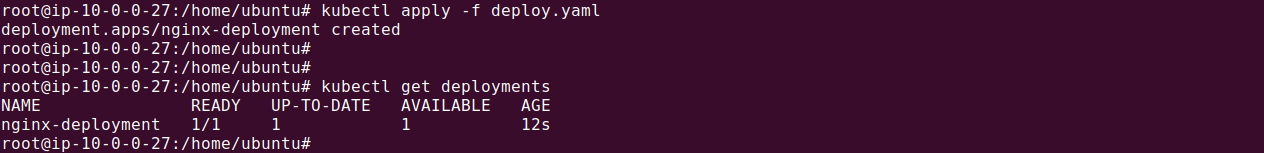

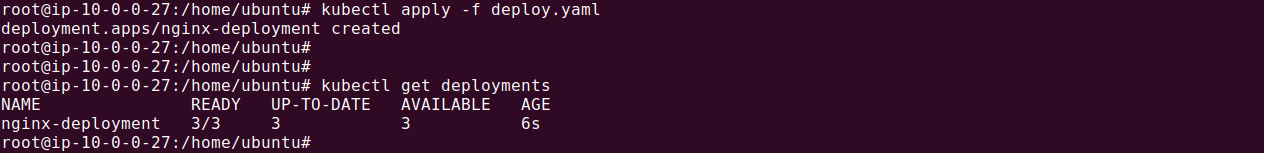

- containerPort: 80Let’s save above configuration in a file called deploy.yaml and apply it using the following command:

kubectl apply -f deploy.yaml

We can check if three pods replicas are created using:

kubectl get deployments

Let’s walk through the details of this configuration:

- apiVersion: This specifies the version of the Kubernetes API being used. In this case,

apps/v1. - kind: This declares the type of Kubernetes resource,

Deploymentin this case. - metadata: This section includes data that uniquely identifies the deployment within the cluster. It includes:

name: Sets the name of the deployment tonginx-deployment.labels: Provides labels that can be used to organize and select resources, withapp: nginxindicating this deployment is for the nginx application.

- spec: This section outlines the desired state of the deployment. Key components include:

replicas: Specifies that three instances (pods) of the nginx application should be running at all times.selector: Defines the criteria for selecting the pods managed by this deployment. It matches pods with the labelapp: nginx.template:metadata: Labels for the pods, withapp: nginx.template:spec:containers: Describes the desired state of the pods, with details of the containers to be run. For each container, it specifies thename(in this case the container is namednginx), theimage(in this case, thenginx:1.26.1image from Docker Hub), andports(in this case, the container’s port 80 should be exposed).

Working with the deployment

To create the above deployment, save the YAML content to a file named deploy.yaml and run the following command:

kubectl apply -f deploy.yamlVerify deployment creation by running:

kubectl get deployments

The output will show the deployment details, including the number of replicas that are up-to-date and available.

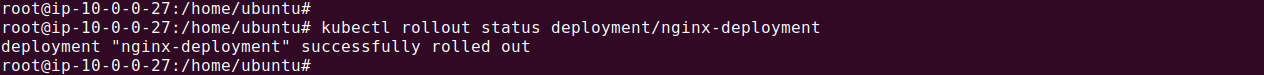

To check the status of the deployment rollout, use:

kubectl rollout status deployment/nginx-deployment

This command will provide real-time updates on the rollout process, ensuring that all specified replicas are correctly deployed.

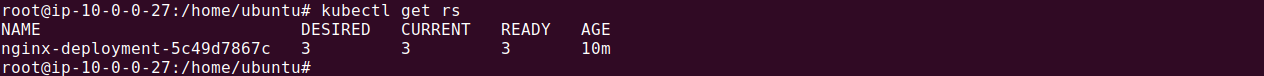

To see the ReplicaSet created by the deployment, run:

kubectl get rs

This command displays details such as the desired number of replicas, current running replicas, and ready replicas.

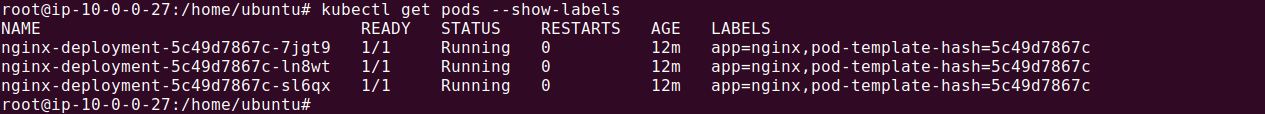

To view the labels automatically generated for each pod, execute:

kubectl get pods --show-labels

This output will show the labels assigned to each pod, which helps in identifying and managing the pods created by the deployment.

2. Kubernetes deployment YAML with resource limits

Including resource limits in a Kubernetes deployment YAML file helps manage the resources allocated to each container, ensuring they do not exceed the specified limits and can be scheduled on appropriate nodes. This improves the stability and efficiency of the cluster.

Below is an example of a Kubernetes deployment YAML file that sets resource limits for an nginx deployment.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

resources:

limits:

memory: "256Mi" # Maximum memory allowed

cpu: "200m" # Maximum CPU allowed

requests:

memory: "128Mi" # Initial memory request

cpu: "100m" # Initial CPU request

Let’s walk through the details of this configuration:

- apiVersion: This specifies the version of the Kubernetes API being used. Here, it is

apps/v1. - kind: This declares the type of Kubernetes resource,

Deploymentin this case. - metadata: This section includes data that uniquely identifies the deployment within the cluster.

name: Sets the name of the deployment tonginx-deployment.

- spec: This section outlines the desired state of the deployment.

replicas: Specifies that three instances (pods) of the nginx application should be running at all times.selector: Defines the criteria for selecting the pods managed by this deployment. It matches pods with the labelapp: nginx.template:metadata: Defines labels for the pods, withapp: nginx.template:spec:containers: The container to be run in each pod, with aname,imageandports, like in the previous example.resourceswithintemplate:spec:containers: This section is used to define resource requests and limits for the container.limits: Specifies the maximum amount of CPU and memory that the container is allowed to use. In this example, the container is limited to a maximum of 256 MiB of memory and 200 milliCPU (0.2 CPU cores).requests: Specifies the initial amount of CPU and memory that the container requests when it starts. In this example, the container requests 128 MiB of memory and 100 milliCPU (0.1 CPU cores) initially.

Get started with Octopus for free

Standardize and automate Kubernetes deployments across all your teams and environments.

3. Kubernetes deployment YAML with health checks

Health checks are crucial for maintaining the reliability and availability of applications running within a Kubernetes cluster. They help ensure that applications are running correctly and are ready to serve traffic.

Below is an example of a Kubernetes deployment YAML file that includes liveness and readiness probes for health checks.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

livenessProbe:

httpGet:

path: / # Path to check for liveness probe

port: 80 # The port to check on

initialDelaySeconds: 15 # Seconds to wait before starting probe

periodSeconds: 10 # Check the probe every 10s

readinessProbe:

httpGet:

path: / # Path to check for readiness probe

port: 80 # The port to check on

initialDelaySeconds: 5 # Seconds to wait before starting probe

periodSeconds: 5 # Check the probe every 5s

Note: Initially there are no pods available (prior to health check). As soon as a successful health check is received, pods are marked as AVAILABLE.

The first fields in the configuration are the same as in the previous examples. Let’s focus on the settings that define a liveness probe and readiness probe:

spec:containers:livenessProbe: The liveness probe checks whether the container is still alive. It uses an HTTP GET request to the / path on port 80 of the container. It is instructed to wait 15 seconds to allow the container to start, and after that, check the probe every 10 seconds. If the probe fails, Kubernetes will restart the container.spec:containers:readinessProbe: The readiness probe checks whether the container is ready to serve traffic. It also uses an HTTP GET request to the/path on port 80 of the container. It is instructed to wait 5 seconds to allow the container to initialize, and after that, check the probe every 5 seconds. If the probe fails, the container is marked asnot ready, and Kubernetes won’t send traffic to it.

Note: The appropriate values for livenessProbe and readinessProbe depends on specific characteristics of your application.

Learn more in our guide to Kubernetes deployment tools.

Continuous Delivery for Kubernetes with Octopus

Octopus Deploy is a powerful tool for automating your deployments to Kubernetes. Octopus addresses many of the challenges you would experience running applications on Kubernetes, but for the sake of this article, we’ll focus on just two you’ll meet at the very beginning.

Configuration templating

Most likely, you’ll need to deploy your application to more than one environment. You’ll likely want to slightly alternate your deployment.yaml between environments. As a bare minimum, you’ll need to have different container image tags. Likely, you’ll want different labels, replica sets, and resource limits. With Octopus, you won’t have to keep multiple versions of the configuration file or update them manually. Instead, you can create a configuration once and modify just selected values with variables. Moreover, Octopus can help you to create your first deployment.yaml in a UI.

Deployment and verification

With Octopus, you won’t have to run kubectl apply to deploy a configuration. Octopus will manage it for you. Octopus will also show you the status of your deployment. You will see what objects were deployed and if the cluster managed to run the configuration as intended.

Learn more from our first Kubernetes deployment guide

Get started with Octopus for free

Standardize and automate Kubernetes deployments across all your teams and environments.

Help us continuously improve

Please let us know if you have any feedback about this page.