What Is KubeEdge?

KubeEdge is an open-source system extending native containerized application orchestration and device management capabilities to edge computing environments. Developed under the Kubernetes umbrella, it allows operations and resource management across edge nodes. By enabling the same Kubernetes API at the edge, KubeEdge facilitates workload distribution and management between centralized data centers and decentralized edge environments.

KubeEdge integrates cloud computing with edge computing, providing a platform for building, deploying, and managing edge applications. This simplifies many of the challenges of edge computing, such as intermittent network connections and resource-constrained environments. By leveraging Kubernetes’ strengths, KubeEdge ensures that edge devices benefit from the same orchestration and management that Kubernetes provides in traditional cloud environments.

KubeEdge is licensed under the Apache 2.0 license. It is backed by the Cloud Native Computing Foundation (CNCF) and moved to the Incubating maturity level in September, 2020. It has over 6K stars on GitHub and over 300 contributors. You can get KubeEdge from the official GitHub repo: https://github.com/kubeedge/kubeedge

This is part of a series of articles about Kubernetes deployment

Why use Kubernetes at the edge?

Kubernetes at the edge provides several advantages. It unifies operations across cloud and edge environments, ensuring consistent management and deployment strategies. This uniformity reduces the complexity associated with maintaining separate systems for cloud and edge.

Using Kubernetes at the edge also enhances resource efficiency and reliability. Kubernetes’ inherent capabilities like self-healing, load balancing, and automated rollouts translate well to edge environments, providing resilient edge solutions. Leveraging these features, businesses can deploy scalable applications at the edge with confidence in their stability and performance.

KubeEdge features and benefits

Kubernetes native API at edge

One significant feature of KubeEdge is the extension of the Kubernetes native API to edge environments. This feature allows developers and operators to use familiar Kubernetes tools and practices, whether they are deploying applications in cloud data centers or at the edge. It ensures that the same orchestration and management strategies are consistent across all environments, promoting efficiency and reducing the learning curve.

Extending Kubernetes’ ecosystem to the edge means enterprises can leverage a wide array of existing Kubernetes tools and solutions. This approach reduces the need for bespoke solutions tailored solely for edge deployments.

Resiliency

Resiliency is a critical attribute in edge computing, and KubeEdge addresses this need. It ensures that workloads continue to operate even in the face of network disruptions or hardware failures. By handling disconnections gracefully and ensuring continuity through local cache mechanisms, KubeEdge provides a resilient operational environment.

Additionally, KubeEdge incorporates health checking and self-healing mechanisms. These capabilities enable automatic detection and remediation of issues, maintaining the reliability and stability of the edge deployments. This ensures that services remain available and performant, a requirement for mission-critical edge applications.

Low-resource footprint

KubeEdge is designed to operate efficiently in resource-constrained environments. From minimizing compute resource requirements to optimizing memory usage, KubeEdge ensures that edge applications can run effectively even on devices with limited capabilities. This low-resource footprint makes it suitable for a variety of edge devices, from IoT sensors to edge servers.

Scalability

Scalability in edge computing environments is crucial. KubeEdge supports horizontal scaling of applications across multiple edge nodes, ensuring that as demand grows, resources can be added accordingly.

KubeEdge’s architecture also supports efficient resource management across a distributed set of edge nodes. This facilitates scaling operations, enabling the deployment of large-scale edge applications with ease.

Deployment to the edge

KubeEdge simplifies the deployment of applications to edge environments. By integrating with the native Kubernetes API, KubeEdge allows developers to use familiar tools and manifests to deploy workloads across distributed edge nodes.

This feature ensures that applications are consistently managed and orchestrated, whether they are running in centralized cloud data centers or at the edge. The ability to use Kubernetes’ standard deployment tools, such as kubectl, provides a unified approach to managing applications.

Optimized for edge environments

Another significant benefit of KubeEdge’s deployment capabilities is its optimization for the unique demands of edge environments. KubeEdge supports edge-specific configurations, such as node affinity and local storage, to ensure that workloads perform efficiently even in resource-constrained settings.

Additionally, its architecture is designed to handle network interruptions and low-latency requirements, ensuring that applications remain operational and responsive. This combination of familiar deployment processes and edge-focused optimizations makes KubeEdge a powerful tool for extending Kubernetes’ reach to the edge.

KubeEdge versus K3s versus Kind

KubeEdge, K3s, and Kind are all Kubernetes-based solutions, but they cater to different use cases and environments.

KubeEdge is specifically designed for edge computing. It extends Kubernetes to edge environments, allowing for the management of applications on devices with limited resources and intermittent network connectivity. KubeEdge supports features such as offline operation, local resource management, and communication between edge and cloud. . K3s is a lightweight Kubernetes distribution that can also be used for IoT and edge computing, but it is more focused on simplifying Kubernetes itself for resource-constrained environments rather than extending it to the edge. K3s reduces the memory and CPU footprint by removing non-essential components and consolidating the control plane, making it suitable for smaller clusters or single-node deployments where minimal resource usage is critical.

Kind (Kubernetes in Docker) is primarily a tool for running local Kubernetes clusters using Docker containers. It’s not designed for production environments but rather for development and testing. Kind allows developers to quickly spin up Kubernetes clusters on their local machines, facilitating testing and development without the overhead of full-fledged Kubernetes environments.

Related content: Read our guide to Kubernetes deployment strategy

KubeEdge architecture components

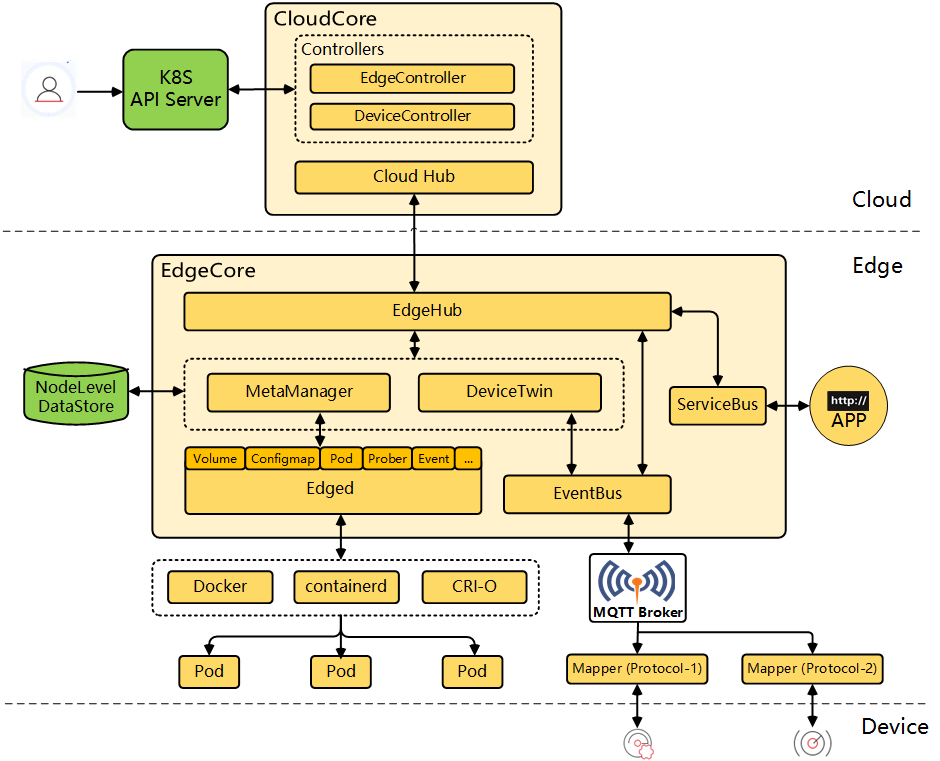

The following diagram illustrates the primary components of KubeEdge. We describe each component in more detail below.

Source: Kubeedge

Edged

Edged is the container runtime agent running on edge nodes. It manages containerized applications hosted on edge devices, similar to Kubelet in Kubernetes. Edged executes workloads and gathers status information, ensuring the effective operation of edge nodes.

Additionally, Edged handles local persistence for application data and configuration, ensuring that edge applications can continue to operate independently during network outages. This local activity management underpins the resilience capabilities that KubeEdge provides.

EdgeHub

EdgeHub is the communication interface used to connect edge nodes to the cloud. It facilitates data transfer and synchronization between edge and cloud components, ensuring that edge nodes remain connected and up-to-date. EdgeHub supports multiple communication protocols, enhancing its flexibility and adaptability in varied network environments.

EdgeHub manages secure communication channels between edge and cloud, handling tasks such as authentication and message encryption. This secure communication is crucial for maintaining data integrity and protecting sensitive information as it travels between cloud and edge.

CloudHub

CloudHub acts as the central communication point in the cloud for KubeEdge. It is responsible for receiving data and control messages from edge nodes and distributing them appropriately. CloudHub ensures that the cloud components of KubeEdge can effectively manage and orchestrate edge devices.

CloudHub plays a vital role in maintaining a global view of the edge infrastructure. It helps in aggregating status information from multiple edge nodes, enabling centralized monitoring and control, which is essential for large-scale edge deployments.

EdgeController

EdgeController manages the lifecycle of application deployment on edge nodes. It orchestrates deployments, updates, and synchronization tasks, ensuring that edge applications are appropriately managed and maintained from the cloud.

Additionally, EdgeController handles device management operations, allowing centralized control over edge devices’ configuration and status. This component ensures that both application and device states are consistent and aligned across the edge nodes and cloud.

EventBus

EventBus facilitates message passing capabilities within KubeEdge. It allows for efficient communication between edge modules and external systems, supporting various messaging frameworks. EventBus ensures that events generated by edge applications and devices are processed and relayed accurately.

Furthermore, EventBus supports bi-directional communication, meaning it can relay messages from cloud to edge and vice versa. This capability is crucial for maintaining a synchronized state between operational layers and ensuring responsive edge environments.

DeviceTwin

DeviceTwin is responsible for modeling and maintaining the state of devices connected to the edge. It creates a digital twin for each physical device, allowing for state synchronization and status monitoring. This component is key for ensuring device configurations and statuses are consistently tracked and managed.

Additionally, DeviceTwin supports rules and policies for device behavior, facilitating automated responses to changes in device states. This automated management streamlines operations and reduces manual intervention, improving overall efficiency.

MetaManager

MetaManager handles metadata management for edge applications and devices. It manages the storage, retrieval, and synchronization of metadata between edge and cloud, ensuring consistency and accuracy. MetaManager plays a vital role in maintaining the metadata’s integrity, which is crucial for effective edge operations.

MetaManager supports local caching, enabling edge applications and devices to continue operations during cloud disconnections. This capability is essential for maintaining uninterrupted service in edge computing environments where network stability can be variable.

ServiceBus

ServiceBus provides a unified communication layer for inter-service communication within KubeEdge. It supports various communication protocols, enabling interaction between different services running on edge nodes. This unified layer simplifies the complexity of managing multiple communication channels and protocols.

Additionally, ServiceBus enhances the scalability and reliability of service interactions. By providing a communication framework, it ensures that service requests and responses are managed, supporting the operation of edge applications and services.

DeviceController

DeviceController manages the lifecycle and status of devices connected to the edge infrastructure. It orchestrates device onboarding, configuration, and monitoring tasks, ensuring that devices are correctly integrated and managed from the cloud.

DeviceController supports policy-based management, enabling automated control over device states and configurations. This automated approach reduces the operational burden and enhances the consistency and reliability of device management across the edge environment.

Quick tutorial: Installing KubeEdge with Keadm

Prerequisites

To install KubeEdge using Keadm, you need super user (root) rights. Additionally, ensure Kubernetes is installed and compatible with KubeEdge by referring to the Kubernetes compatibility documentation.

Step 1: Installing Keadm

In this tutorial we’ll show how to install kubedeab by downloading it from Docker Hub and running the container (alternatively, you can download it from GitHub):

docker run --rm kubeedge/installation-package:v1.17.0 cat /usr/local/bin/keadm > /usr/local/bin/keadm

chmod +x /usr/local/bin/keadmStep 2: Setting Up the Cloud Side (KubeEdge Master Node)

Initialize Keadm:

keadm init --advertise-address="THE-EXPOSED-IP" --profile version=v1.12.1 --kube-config=/root/.kube/configReplace THE-EXPOSED-IP with your cloud node’s IP address.

Verify CloudCore Deployment:

kubectl get all -n kubeedgeStep 3: Setting Up the Edge Side (KubeEdge Worker Node)

Retrieve the Token from Cloud Side

keadm gettokenJoin the Edge node:

keadm join --cloudcore-ipport="THE-EXPOSED-IP:10000" --token=YOUR_TOKEN --kubeedge-version=v1.12.1Replace THE-EXPOSED-IP:10000 with your CloudCore IP and port, and YOUR_TOKEN with the token retrieved.

Step 4: Enabling kubectl Logs Feature

Set environment variable for CloudCore IP:

export CLOUDCOREIPS="192.168.0.139"Generate certificates for CloudStream:

sudo su

cp $GOPATH/src/github.com/kubeedge/kubeedge/build/tools/certgen.sh /etc/kubeedge/

cd /etc/kubeedge/

./certgen.sh streamConfigure iptables on the host:

iptables -t nat -A OUTPUT -p tcp --dport 10350 -j DNAT --to $CLOUDCOREIPS:10003

iptables -t nat -A OUTPUT -p tcp --dport 10351 -j DNAT --to $CLOUDCOREIPS:10003Modify CloudCore configuration file:

sudo nano /etc/kubeedge/config/cloudcore.yamlSet enable to true for cloudStream.

Modify EdgeCore configuration file:

sudo nano /etc/kubeedge/config/edgecore.yamlSet enable to true and server to the CloudCore IP.

Next, restart CloudCore. Here is how to do it in process mode:

pkill cloudcore

nohup cloudcore > cloudcore.log 2>&1 &And here is how to do it in Kubernetes deployment mode:

kubectl -n kubeedge rollout restart deployment cloudcoreFinally, restart EdgeCore:

systemctl restart edgecore.serviceLearn more in our detailed guide to Kubernetes deployment YAML

Automating Kubernetes edge deployments with Octopus

Octopus Deploy natively supports edge deployments. With Octopus, you can use centralized templates for deployment processes and Kubernetes manifests to standardize deployments across hundreds and thousands of clusters. Simultaneously, you can specify unique parameters for individual clusters or groups of clusters to customize your deployment processes and manifests on the fly. This approach provides both standardization and flexibility.

You also have the option to group your clusters into environments, allowing you to roll out new versions of your application to a select few clusters initially. Using Octopus pipelines, you can incorporate tests, infrastructure steps, change management approvals, or manual interventions into your deployment process.

Learn more about tenants in Octopus.

Help us continuously improve

Please let us know if you have any feedback about this page.