What is horizontal scalability in Jenkins?

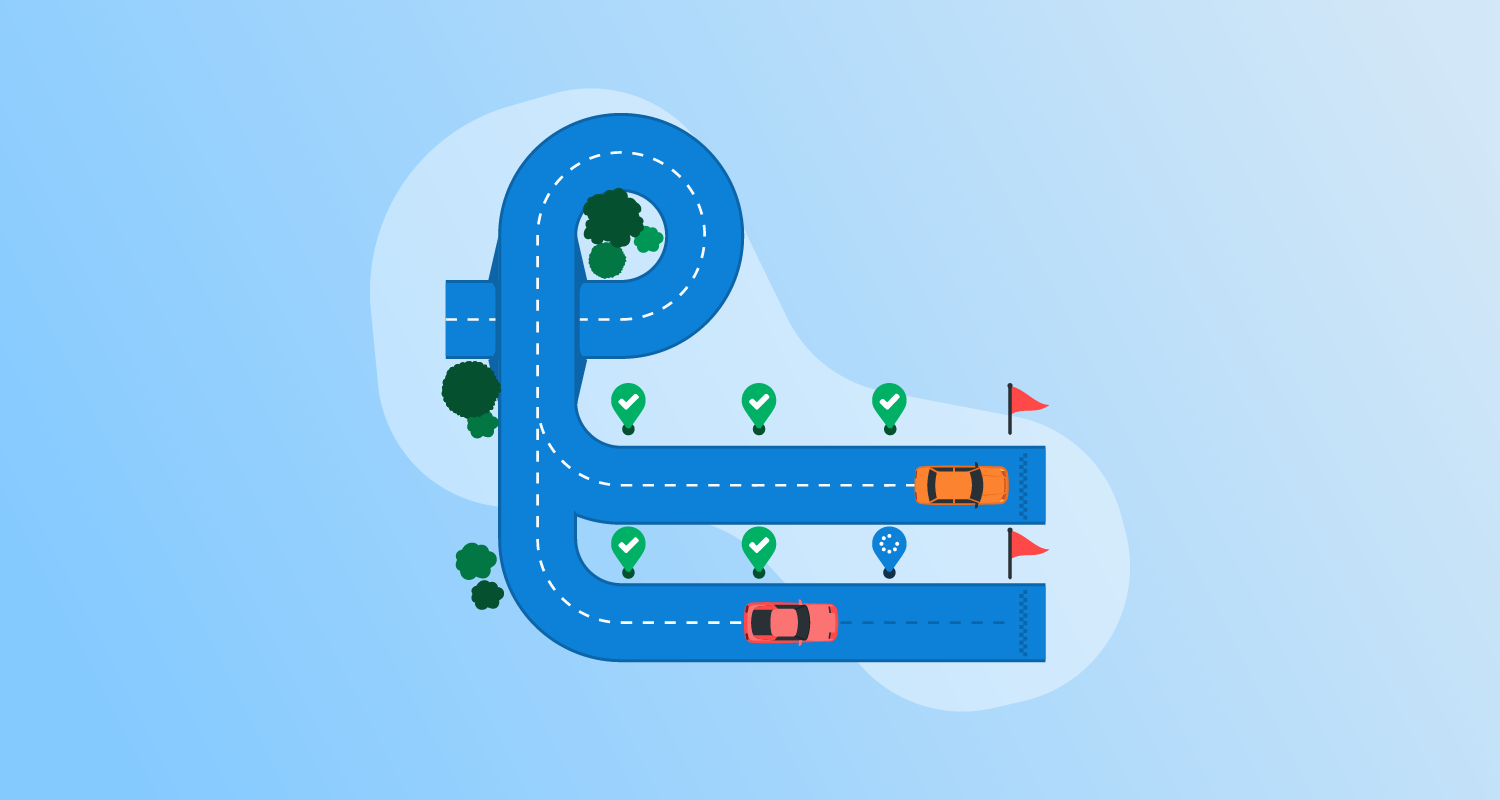

Horizontal scaling in Jenkins involves adding more Jenkins controller instances, each with its own agents, to distribute the workload and increase capacity. This contrasts with vertical scaling, which focuses on increasing the resources of a single Jenkins instance. Horizontal scaling is crucial for handling growing numbers of jobs, teams, and projects, enabling better performance, resilience, and manageability.

Horizontal scalability allows organizations to adapt as their automation needs grow. By spreading the workload across multiple controllers or agents, Jenkins can continue to function smoothly even during heavy, unpredictable workloads or scheduled spikes in usage. Horizontal scaling also minimizes downtime and helps prevent single points of failure.

Key aspects of horizontal scaling in Jenkins:

- Multiple controllers: Instead of relying on a single Jenkins instance, horizontal scaling involves deploying multiple independent Jenkins controllers, each managing its own jobs and agents.

- Distributed workloads: Each controller can be dedicated to specific teams, projects, or even different stages of the software development lifecycle.

- Scalable agents: Agents can be added to each controller to handle the execution of builds and tests, further distributing the workload and improving performance.

- Cloud-based agents: Using cloud-based agents allows for dynamic scaling, where agents are automatically provisioned and deprovisioned based on demand.

- Cloud migration: Horizontal scaling can also prepare the Jenkins environment for a smooth transition to the cloud, as users can easily deploy and manage controllers in a cloud environment.

Benefits of horizontal scaling include:

- High availability: Horizontal scaling, when combined with High Availability (HA) features, allows for seamless failover and redundancy, ensuring continuous operation even if one controller instance experiences issues.

- Reduced downtime: With multiple controllers, if one fails, the others can continue to operate, minimizing downtime and ensuring business continuity.

- Improved performance: Distributing the workload across multiple controllers and agents can significantly improve the overall performance of the Jenkins environment, especially during peak loads.

- Better manageability: Breaking down a large monolithic Jenkins instance into smaller, more manageable controllers can simplify administration and reduce the impact of plugin conflicts or other issues.

- Cost efficiency and flexibility: Provisioning cloud-based agents based on need helps eliminate waste, allowing organizations to scale out during peak demand periods and scale back again when demands subside.

- Foundation for modernization: The ability to scale horizontally in Jenkins enables modular architectures, making it easier to modernize the CI/CD workflow in increments.

Key aspects of horizontal scaling in Jenkins

Multiple controllers

Horizontal scaling in Jenkins involves deploying multiple independent controller instances, each managing its own jobs and agents. This setup allows controllers to be dedicated to specific teams, projects, or stages of the development lifecycle. Workloads are isolated between controllers, so changes, maintenance, or failures in one instance do not affect others. Administrators can apply updates, test configurations, and enforce security boundaries on a per-controller basis, simplifying operations in multi-tenant or large-scale environments.

Distributed workloads

Jenkins distributes build and test jobs across multiple controllers and their associated agents. Instead of relying on a single server to process all pipelines, workloads are balanced across available nodes, preventing bottlenecks. Jenkins can assign critical jobs to high-priority nodes or split tasks by project, ensuring efficient use of resources and smoother handling of workload spikes during peak periods.

Scalable agents

Agents are worker nodes that execute builds and tests. Scaling horizontally includes adding more agents to controllers to process jobs concurrently. Jenkins supports dynamic provisioning of agents on physical servers, virtual machines, or containerized environments. Infrastructure-as-code tools and automation scripts can be used to spin up or tear down agents as demand changes, maintaining fast build times and reducing queue backlogs.

Cloud-based agents

Jenkins can integrate with cloud platforms to deploy agents on demand. Cloud-based agents enable elastic scaling, where compute resources are provisioned automatically in response to workload fluctuations. This approach removes the limitations of on-premises hardware and allows Jenkins to handle sudden surges in activity without manual intervention. Teams can also run jobs in specific geographic regions or on specialized cloud hardware.

Cloud migration

Distributing workloads across multiple controllers and agents prepares Jenkins environments for cloud adoption. Controllers and agents can be deployed in hybrid or fully cloud-native architectures, allowing seamless transitions. This setup enables organizations to use cloud elasticity, global reach, and integration with modern DevOps toolchains while ensuring continuity of their existing pipelines.

Benefits of horizontal scaling in Jenkins

Here are the main benefits of scaling your Jenkins instances horizontally:

- High availability: With multiple controllers and agents, Jenkins can reroute jobs if a node fails, eliminating single points of failure. This redundancy supports seamless failover and allows critical pipelines to keep running during maintenance or unexpected outages.

- Reduced downtime: When one controller is taken offline for updates or troubleshooting, others continue to operate normally. This isolation minimizes the impact of failures and improves business continuity for teams relying on Jenkins for their delivery workflows.

- Improved performance: By distributing builds and tests across multiple controllers and agents, Jenkins can process more jobs in parallel. This reduces build queue times, speeds up feedback for developers, and keeps pipelines responsive even during periods of heavy usage.

- Better manageability: Breaking a monolithic Jenkins setup into smaller, purpose-focused controllers simplifies administration. Issues such as plugin conflicts or configuration errors are contained within individual instances, making troubleshooting faster and reducing the blast radius of changes.

- Cost efficiency and flexibility: Dynamic provisioning of cloud-based agents ensures resources are used only when needed. Organizations can scale out during high demand and scale back in during quiet periods, optimizing costs without sacrificing performance.

- Foundation for modernization: Horizontal scaling lays the groundwork for cloud migration and hybrid deployments. It supports a modular architecture where teams can modernize parts of their CI/CD workflows incrementally instead of overhauling the entire system at once.

Tools and plugins for horizontal scaling in Jenkins

1. Kubernetes plugin

The Kubernetes plugin enables Jenkins to dynamically provision agents in a Kubernetes cluster, supporting horizontal scalability through automated pod-based agent management. Instead of relying on statically configured nodes, Jenkins creates a new Kubernetes pod for each agent, tailored to the job’s requirements, and tears it down after the build completes.

Agents are launched as inbound agents that connect back to the Jenkins controller. The plugin automatically injects required environment variables—such as JENKINS_URL, JENKINS_SECRET, and JENKINS_AGENT_NAME—to enable secure agent registration and communication.

Key features include:

- Dynamic pod-based agents: Each Jenkins agent runs in a dedicated Kubernetes pod. Pods are created per build and destroyed afterward, minimizing resource waste and configuration drift.

- Declarative and scripted pipeline support: Users can define pod templates using UI or pipeline syntax. Pod templates can include one or more containers and can be specified inline, by YAML, or inherited and composed via nesting.

- Custom containers and toolchains: Pod templates support multiple containers, allowing users to run steps in specialized containers (e.g., for Maven, Golang). The container step enables execution in specified containers.

- Pod template inheritance and composition: Templates can inherit configuration from one or more other templates, enabling reuse and reducing duplication. Nested pod templates allow modular and flexible build environments.

- WebSocket and external cluster support: WebSocket-based agent connections simplify communication when Jenkins is outside the Kubernetes cluster or behind a proxy. This allows broader infrastructure flexibility without sacrificing connectivity.

2. Docker plugin

The Docker plugin enables Jenkins to dynamically provision containers as build agents, using Docker as an on-demand cloud resource. Each Jenkins job runs in a fresh Docker container, created from a user-defined image, and discarded once the job is complete. This setup abstracts Docker from job definitions, letting Jenkins administrators manage container provisioning while jobs remain unaware of the underlying containerization.

The plugin uses the Docker Remote API via docker-java and does not require a Docker CLI installed on Jenkins nodes. It allows multiple Docker hosts to be configured as clouds and supports various agent launch methods, including SSH, JNLP, and attached mode.

Key features include:

- Dynamic agent provisioning: Jenkins administrators define Docker templates mapped to corresponding labels. When a job requests an agent with a matching label, the plugin launches a new container from the configured image and removes it after the build.

- Multiple Docker hosts: Supports configuration of multiple Docker clouds, each pointing to different Docker daemons. This allows workloads to be distributed across several physical or virtual hosts.

- Flexible launch options:

- JNLP launch: Requires only a JDK in the image. The container connects back to the Jenkins controller using injected credentials.

- SSH launch: Requires an SSH server and JDK in the image. Jenkins connects over SSH and does not require pre-configured credentials for standard sshd.

- Attached mode: Suitable for containers that can start and run directly under Jenkins control without remote connectivity.

- Custom Docker images: Users can build tailored images by extending official Jenkins agent images and installing required tools. Templates can specify pull policies, remote FS root, environment variables, and entrypoints.

- Local and remote Docker daemons: Jenkins can connect to Docker over UNIX sockets or TCP, enabling setups where the Jenkins controller runs on a different machine from the Docker host.

3. EC2 plugin

The EC2 plugin enables Jenkins to dynamically provision build agents in Amazon EC2 based on job demand. When Jenkins identifies that additional capacity is needed, it uses the EC2 API to start instances automatically. Once demand subsides, idle instances are stopped or terminated. This elasticity makes it suitable for extending local infrastructure into the cloud.

Each agent is provisioned from a user-defined Amazon Machine Image (AMI), configured with labels and settings. These instances automatically connect to the Jenkins controller over SSH or JNLP, depending on the setup.

Key features include:

- On-demand agent provisioning: Jenkins automatically spins up EC2 instances when build load increases. Instances are connected as agents and stopped or terminated after an idle timeout.

- Multiple AMI support: Users can configure multiple AMIs with different OS and software configurations. Labels are used to match jobs to the appropriate AMI, enabling platform-specific workloads.

- Spot instances: The plugin supports AWS Spot Instances for cost savings. When enabled, Jenkins provisions spot instances using a specified bid price. If the spot instance is not available immediately, it retries or falls back to on-demand instances, depending on configuration.

- Init and startup scripts: When launching a new EC2 instance, the plugin can run a custom shell script to install required tools or configure the environment. These scripts are executed before the agent connects to Jenkins.

- Persistent instances option: Instead of terminating idle instances, the plugin can stop them. This is useful for preserving state on EBS volumes and reducing startup time when instances are reused.

4. CloudBees Jenkins Operations Center

CloudBees Jenkins Operations Center is a central component supporting horizontal scaling in Jenkins environments. It enables organizations to manage multiple Jenkins controllers from a single interface, providing centralized control and visibility across distributed workloads. This is especially beneficial in large-scale or multi-team environments where each controller may serve different use cases or departments.

Operations Center can be deployed on various platforms, including Linux, Windows, Docker, and Apache Tomcat, and is available as a standalone WAR file or OS-specific installation package. It supports installations using native package managers like apt, yum, dnf, or zypper, and can also be run directly as a service or in a Docker container. Regardless of the deployment method, the center becomes accessible through a web interface, typically at port 8888, immediately after setup.

Key features include:

- Centralized controller management: Consolidates controller configuration, monitoring, and access policies.

- Flexible deployment: Can be installed on traditional systems or run in Docker containers, allowing for environment-specific scalability.

- Multi-tenant isolation: Enables workload segmentation across teams or projects, improving security and reducing operational risk.

- Elastic infrastructure support: Pairs well with cloud and virtual environments to scale Jenkins horizontally as job loads increase.

- Update coordination: Integrates with CloudBees CI’s long-term support lifecycle to simplify controller upgrades and improve stability.

Best practices for horizontal scalability in Jenkins

Organizations should consider these practices to enable horizontal scalability in Jenkins.

1. Segment controllers for manageability

Segmenting Jenkins controllers improves system stability and operational efficiency as the number of teams, projects, or environments grows. Assigning separate controllers to distinct business units or CI/CD pipelines allows teams to configure and manage their jobs independently, reducing cross-team interference. This isolation simplifies troubleshooting by localizing failures and improves security by enforcing access controls per controller.

It also supports customization: different teams can install plugins, configure unique pipeline templates, or apply environment-specific JVM settings without affecting others. Organizations with multi-tenant CI/CD environments benefit from this approach by aligning infrastructure with team-specific workflows while maintaining centralized monitoring and governance through tools like CloudBees Operations Center.

Regularly review controller usage metrics such as job queue lengths, executor load, and memory consumption to identify when a new controller should be provisioned. This preemptive scaling ensures consistent performance and avoids degradation during high-demand periods.

2. Optimize pipeline configurations

Efficient pipeline design is crucial in horizontally scaled Jenkins environments. Long-running, monolithic pipelines are harder to parallelize and consume more agent time, leading to job queuing and inefficient resource usage. Instead, break pipelines into smaller, modular jobs that can execute independently or concurrently.

Aim to reduce job durations to under 10 minutes, which has been shown to improve build throughput by up to 30%. This allows Jenkins to run more builds per hour with the same hardware footprint. Use declarative pipeline syntax for clarity and consistency, and adopt shared libraries to centralize common logic. Teams using shared libraries report a 40% reduction in job configuration time.

3. Implement active-active configuration

An active-active configuration involves running multiple Jenkins controllers in parallel, each capable of handling production workloads. Unlike an active-passive setup—where one controller acts as a backup—active-active architectures improve fault tolerance and maximize resource use by distributing jobs across all available instances.

This configuration is particularly effective for large organizations with distributed teams or geographically separated data centers. Workload distribution can be based on job type, team ownership, or proximity to reduce latency and balance CPU and memory usage. Using labels and node affinity, Jenkins jobs can be routed intelligently to the most appropriate controller or agent.

To implement this setup effectively, integrate monitoring and alerting tools such as Prometheus and Grafana to track controller and agent health, queue times, and performance bottlenecks. These metrics help detect underuse or overloading, enabling timely adjustments. Load balancing tools and DNS routing can further improve availability across multiple controllers.

4. Deploy automated backup and recovery

As Jenkins environments scale horizontally, the risk of data loss and system misconfiguration increases. Implementing automated backup and recovery procedures is essential to protect job configurations, plugins, credentials, and environment variables across all controllers.

Schedule regular backups using built-in Jenkins tools or external scripts that store configuration files in secure cloud storage services. Include not just job definitions but also workspace data, plugin versions, and system settings to allow for full restoration in the event of a failure. Consider using versioned backups for rollback capability during plugin upgrades or configuration changes.

Automate failover processes using configuration management tools like Ansible, which can redeploy agents or even entire controllers to alternate hosts when failures are detected. Integrate monitoring tools to trigger these actions automatically based on system thresholds—such as CPU spikes, memory exhaustion, or build queue saturation.

5. Maintain clean and efficient environments

Keeping Jenkins environments clean and optimized is fundamental to effective horizontal scaling. As the number of agents, plugins, and jobs increases, resource contention and performance bottlenecks can emerge. Proactively manage this by implementing strategies that minimize waste and simplify operations.

Use caching systems like Artifactory, Nexus, or Jenkins’ built-in mechanisms to avoid repeated downloads of build dependencies. Studies show that this can reduce build times by 50% or more. Build containers with only the necessary dependencies to reduce image sizes and speed up provisioning. For containerized environments, enforce resource limits on memory and CPU to prevent jobs from affecting each other.

Audit the Jenkins setup regularly to remove unused jobs, obsolete plugins, and inactive agents. Excess plugins not only slow down startup and execution times but also increase security risks. Keeping the plugin set lean improves performance and reduces maintenance overhead.

Use monitoring tools like Grafana or Jenkins Metrics Plugin to visualize CPU usage, executor load, and queue wait times. These insights allow teams to tune resource allocation, rebalance workloads, or identify underperforming nodes, leading to a more responsive and cost-effective Jenkins environment.

Automating large-scale deployments with Octopus

Octopus is a best-of-breed Continuous Delivery platform that handles complex software delivery at any scale. Globally, over 4,000 organizations rely on Octopus to efficiently orchestrate software delivery across multi-cloud, Kubernetes, data centers, and hybrid environments, whether containerized modern apps or heritage applications.

Octopus gives you:

- Effortless scaling: Deploy consistently, quickly, and without duplicating effort to thousands of locations or customers. Tenants let you use one deployment process for thousands of customers. See all your deployments at a glance on a single dashboard.

- Faster deployments: Deploy faster and more frequently to thousands of application hosts. Use one deployment process consistently across environments. Reduce time between build and deployment by automatically promoting releases. Use the deployment strategy that works for you, like rolling, blue/green, or canary.

- Reduced risk: Reduce deployment failure rate and mean time to recovery with automation built-in. Step timeouts and retries reduce manual intervention, while guided failure mode lets you keep deployments moving when needed. Our Insights feature reveals your DevOps performance and areas that need attention based on the 4 key DORA metrics.

- Better DevEx: Simple deployments empower teams and let developers focus on building new features. Create processes easily with our friendly UI and over 500 step templates, or use command lines and scripts. Runbooks automate routine and emergency operations tasks, freeing your teams for more crucial work.

- Increased security: Built-in, flexible features to manage your security and compliance requirements. Use role-based access control (RBAC) to limit what users can do in Octopus. Configure ITSM approvals and OpenID Connect with popular providers for secure, compliant deployments.

Help us continuously improve

Please let us know if you have any feedback about this page.