Getting Started - Worker and Worker Pools

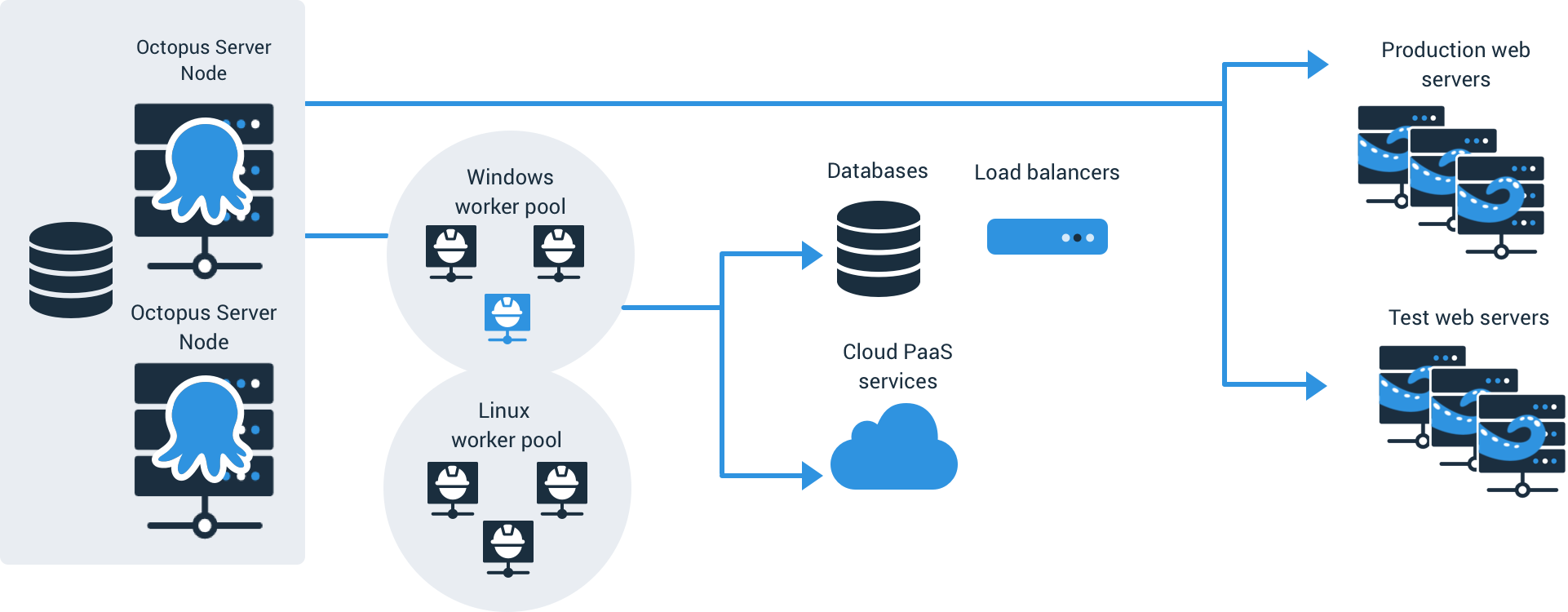

Workers are machines that can execute tasks that don’t need to be run on the Octopus Server or individual deployment targets.

You can manage your workers by navigating to Infrastructure ➜ Worker Pools in the Octopus Web Portal:

Workers are useful for the following scenarios:

- Publishing to Azure websites.

- Deploying AWS CloudFormation templates.

- Deploying to AWS Elastic Beanstalk.

- Uploading files to Amazon S3.

- Backing up databases.

- Performing database schema migrations

- Configuring load balancers.

Where steps run

The following step types and configurations run on a worker:

- Any step that runs a script (usually user supplied) or has a package that has an execution location of

Octopus Server,Octopus Server on behalf of target tags,Worker PoolorWorker Pool on behalf of target tags. - Any steps that run on a Cloud Region, an Azure Target, or any target that isn’t a Tentacle, an SSH Target, or an Offline Drop.

- All AWS, Terraform, and Azure steps.

The following steps always run inside the Octopus Server process (and do not run user-supplied code):

- Manual intervention

- Import certificate

A worker receives instruction from the Octopus Server to execute a step, it executes the step using Calamari and returns the logs and any collected artifacts to the Octopus Server.

Workers are assigned at the start of a deployment or runbook, not at the time the individual step executes.

There are three kinds of workers you can use in Octopus:

- The built-in worker - available on self-hosted Octopus

- Dynamic workers - available on Octopus Cloud

- External workers - manually configured

Octopus Cloud uses dynamic workers by default, which provides an on-demand worker running on an Ubuntu or Windows VM. Dynamic workers are managed by Octopus Cloud, and are included with your Octopus Cloud subscription.

Ignoring Workers

Octopus works out-of-the-box without setting up workers. You can run all deployment processes, run script steps on the built-in worker, deploy to Azure and run AWS and Terraform steps, without further setup. The built-in worker is available in a default Octopus set up, and Octopus workers are designed so that, if you aren’t using external workers, none of your deployment processes need to be worker aware.

The choices of built-in worker, built-in worker running in a separate account, and external workers enable to you harden your Octopus Server and scale your deployments.

Migrating to Workers

Octopus workers provide a way to move work off the built-in worker. This lets you move the work away from the Octopus Server and onto external workers without the need to update the deployment process. Learn how to use the default worker pool to move steps off the Octopus Server.

Built-in Worker

The Octopus Server has a built-in worker that can deploy packages, execute scripts, and perform tasks that don’t need to be performed on a deployment target. The built-in worker is configured by default, however, the built-in worker can be disabled by navigating to Configuration and selecting Disable for the Run steps on the Octopus Server option.

The built-in worker is executed on the same machine as the Octopus Server. When the built-in worker is needed to execute a step, the Octopus Server spawns a new process and runs the step using Calamari. The spawned process is either under the server’s security context (default) or under a context configured for the built-in worker.

Adding a worker to the default worker pool will disable the built-in worker, and steps will no longer run on the Octopus Server.

Learn about the security implications and how to configure the built-in worker.

The built-in worker is only available on self-hosted Octopus instances. Octopus Cloud customers have access to dynamic worker pools, which provides a pre-configured worker on-demand.

Dynamic Workers

Dynamic workers are on-demand workers managed by Octopus Cloud, which means you don’t need to configure or maintain additional infrastructure. Dynamic workers provides an Ubuntu or Windows VM running as a pre-configured tentacle worker.

Dynamic worker pools are included with all Octopus Cloud instances, and are the default option when creating new worker steps in your deployments and runbooks.

Learn more about configuring and using dynamic worker pools and selecting an OS image for your worker tasks.

External Workers

An External Worker is either:

- A Windows or Linux Tentacle.

- An SSH machine that has been registered with the Octopus Server as a worker.

- A Kubernetes Worker that has been installed in a Kubernetes cluster, and has self-registered with the Octopus Server

The setup of a worker is the same as setting up a deployment target as a Windows Tentacle target or an SSH target, except that instead of being added to an environment, a worker is added to a worker pool.

Using external workers allows delegating work to a machine other than the Octopus Server. This can make the server more secure and allow scaling. When Octopus executes a step on an external worker, it’s the external worker that executes Calamari; no user-provided script executes on the Octopus Server itself.

Workers have machine policies, are health checked, and run Calamari, just like deployment targets.

Octopus Cloud customers can choose to use the included dynamic worker pools (enabled by default), and/or register their own external workers.

Registering an External Worker

Once the Tentacle or SSH machine has been configured, workers can be added using the Web Portal, the Octopus Deploy REST API, the Octopus.Clients library or with the Tentacle executable. Only a user with the ConfigureServer permission can add or edit workers.

Registering Workers in the Octopus Web Portal

You can register workers from the Octopus Web portal if they are a Windows or Linux Listening Tentacle or an SSH deployment target.

You can choose between:

- Registering a Windows Listening Tentacle as a Worker.

- Registering a Linux Listening Tentacle as a Worker.

- Registering an SSH deployment target as a Worker.

- Installing a Kubernetes Worker

After you have saved the new worker, you can navigate to the worker pool you assigned the worker to, to view its status.

Registering a Windows Listening Tentacle as a Worker

Before you can configure your Windows servers as Tentacles, you need to install Tentacle Manager on the machines that you plan to use as Tentacles.

Tentacle Manager is the Windows application that configures your Tentacle. Once installed, you can access it from your start menu/start screen. Tentacle Manager can configure Tentacles to use a proxy, delete the Tentacle, and show diagnostic information about the Tentacle.

- Start the Tentacle installer, accept the license agreement, and follow the prompts.

- When the Octopus Deploy Tentacle Setup Wizard has completed, click Finish to exit the wizard.

- When the Tentacle Manager launches, click GET STARTED.

- On the communication style screen, select Listening Tentacle and click Next.

- In the Octopus Web Portal, navigate to the Infrastructure tab, select Workers and click ADD WORKER ➜ WINDOWS, and select Listening Tentacle.

- Copy the Thumbprint (the long alphanumerical string).

- Back on the Tentacle server, accept the default listening port 10933 and paste the Thumbprint into the Octopus Thumbprint field and click Next.

- Click INSTALL, and after the installation has finished click Finish.

- Back in the Octopus Web Portal, enter the hostname or IP address of the machine the Tentacle is installed on, i.e.,

example.comor10.0.1.23, and click NEXT. - Add a display name for the Worker (the server where you just installed the Listening Tentacle).

- Select which worker pools the Worker will be assigned to and click SAVE.

Registering a Linux Listening Tentacle as a Worker

The Tentacle agent will need to be installed on the target server to communicate with the Octopus Server. Please read the instructions for installing a Linux Tentacle for more details.

- In the Octopus Web Portal, navigate to the Infrastructure tab, select Workers and click ADD WORKER ➜ LINUX, and select Listening Tentacle.

- Make a note of the Thumbprint (the long alphanumerical string).

- On the Linux Tentacle Server, run

/opt/octopus/tentacle/configure-tentacle.shin a terminal window to configure the Tentacle. - Give the Tentacle instance a name (default

Tentacle) and press Enter. - Choose 1) Listening for the kind of Tentacle to configure, and press Enter.

- Configure the folder to store log files and press Enter.

- Configure the folder to store applications and press Enter.

- Enter the default listening port 10933 to use and press Enter.

- Enter the Thumbprint from the Octopus Web Portal and press Enter.

- Review the configuration commands to be run that are displayed, and press Enter to install the Tentacle.

- Back in the Octopus Web Portal, enter the hostname or IP address of the machine the Tentacle is installed on, i.e.,

example.comor10.0.1.23, and click NEXT. - Add a display name for the Worker (the server where you just installed the Listening Tentacle).

- Select which worker pools the Worker will be assigned to and click SAVE.

Registering a Worker with an SSH Connection

- In the Octopus Web Portal, navigate to the Infrastructure tab, select Workers and click ADD WORKER.

- Choose either LINUX or MAC and click ADD on the SSH Connection card.

- Enter the DNS or IP address of the deployment target, i.e.,

example.comor10.0.1.23. - Enter the port (port 22 by default) and click NEXT.

Make sure the target server is accessible by the port you specify.

The Octopus Server will attempt to perform the required protocol handshakes and obtain the remote endpoint’s public key fingerprint automatically rather than have you enter it manually. This fingerprint is stored and verified by the server on all subsequent connections.

If this discovery process is not successful, you will need to click ENTER DETAILS MANUALLY.

- Add a display name for the Worker.

- Select which worker pools the Worker will be assigned to.

- Select the account that will be used for the Octopus Server and the SSH target to communicate.

- If entering the details manually, enter the Host, Port and the host’s fingerprint.

From Octopus Server 2024.2.6856 both SHA256 and MD5 fingerprints are supported. We recommend using SHA256 fingerprints.

You can retrieve the fingerprint of the default key configured in your sshd_config file from the target server with the following command:

ssh-keygen -E sha256 -lf /etc/ssh/ssh_host_ed25519_key.pub | awk '{ print $2 }'For Octopus Server prior to 2024.2.6856 use the following:

ssh-keygen -E md5 -lf /etc/ssh/ssh_host_ed25519_key.pub | awk '{ print $2 }' | cut -d':' -f2-- Specify whether Mono is installed on the SSH target or not to determine which version of Calamari will be installed.

- Calamari on Mono built against the full .NET framework.

- Self-contained version of Calamari built against .NET Core.

- Click Save.

Registering a Windows Polling Tentacle as a Worker

Before you can configure your Windows servers as Tentacles, you need to install Tentacle Manager on the machines that you plan to use as Tentacles.

Tentacle Manager is the Windows application that configures your Tentacle. Once installed, you can access it from your start menu/start screen. Tentacle Manager can configure Tentacles to use a proxy, delete the Tentacle, and show diagnostic information about the Tentacle.

- Start the Tentacle installer, accept the license agreement, and follow the prompts.

- When the Octopus Deploy Tentacle Setup Wizard has completed, click Finish to exit the wizard.

- When the Tentacle Manager launches, click GET STARTED.

-

On the communication style screen, select Polling Tentacle and click Next.

-

If you are using a proxy see Proxy Support, or click Next.

-

Add the Octopus credentials the Tentacle will use to connect to the Octopus Server: a. The Octopus URL: the hostname or IP address. b. Select the authentication mode and enter the details: i. The username and password you use to log into Octopus, or: i. Your Octopus API key, see How to create an API key.

The Octopus credentials specified here are only used once to configure the Tentacle. All future communication is performed over a secure TLS connection using certificates.

-

Click Verify credentials, and then next.

-

On the machine type screen, select Worker and click Next.

-

Choose the Space the Worker will be registered in.

-

Give the machine a meaningful name and select which worker pool the Worker will be assigned to and click Next.

-

Click Install, and when the script has finished, click Finish.

The new Polling Tentacle will automatically show up in the Workers list.

Registering a Linux Polling Tentacle as a Worker

The Tentacle agent will need to be installed on the target server to communicate with the Octopus Server. Please read the instructions for installing a Linux Tentacle for more details.

- On the Linux Tentacle Server, run

/opt/octopus/tentacle/configure-tentacle.shin a terminal window to configure the Tentacle. - Give the Tentacle instance a name (default

Tentacle) and press Enter. - Choose 2) Polling for the kind of Tentacle to configure, and press Enter.

- Configure the folder to store log files and press Enter.

- Configure the folder to store applications and press Enter.

- Enter the Octopus Server URL (e.g. https://samples.octopus.app) and press Enter.

- Enter the authentication details the Tentacle will use to connect to the Octopus Server: i. Select 1) if using an Octopus API key, see How to create an API key or: ii. Select 2) to provide a username and password you use to log into Octopus

- Select 2) Worker for the type of Tentacle to setup and press Enter.

- Give the Space you wish to register the Tentacle in and press Enter.

- Provide a name for the Tentacle and press Enter.

- Add which worker pools the Worker will be assigned to (comma separated) and press Enter.

- Review the configuration commands to be run that are displayed, and press Enter to install the Tentacle.

The new Polling Tentacle will automatically show up in the Workers list.

Installing a Kubernetes Worker

You can install the Kubernetes Worker using Helm through the octopusdeploy/kubernetes-agent chart. This chart is hosted on Dockerhub and can be pulled directly via the Helm CLI.

To make things easier, Octopus provides an installation wizard that generates the Helm command for you to run.

Helm will use your current kubectl config, so make sure your kubectl config is pointing to the correct cluster before executing the following helm commands. You can see the current kubectl config by executing:

kubectl config view- In the Octopus Web Portal, navigate to the Infrastructure tab, select Workers, and click ADD WORKER

- Choose Kubernetes and click ADD on the Kubernetes Worker card.

- Enter a Name for the worker, and select the Worker Pools to which the worker should belong, and select NEXT

- The dialog permits for the inline creation of a worker pool via the + button.

- Click Show advanced to provide a custom Storage class or override the Octopus Server URL if required

- Select the desired shell (bash or PowerShell) and copy and the supplied command

- Execute the copied command in a terminal configured with your k8s cluster, and click NEXT

- This step is not required if the NFS driver already exists in your cluster (due to prior installs of k8s worker or deployment target)

- Select the desired shell (bash or PowerShell), then copy the supplied command

- Execute the copied command in a terminal configured with your k8s cluster.

- Installing the Helm chart will take some time (potentially minutes depending on infrastructure).

- A green ‘success’ bar will appear when the Helm Chart has completed installation, and the worker has registered with the Octopus Server.

- Click the View Worker button to display the settings of the created worker, or Cancel to return to the Add Worker page

As the display name is used for the Helm release name, this name must be unique for a given cluster. This means that if you have a Kubernetes agent and Kubernetes worker with the same name (e.g. production), then they will clash during installation.

If you do want a Kubernetes agent and Kubernetes worker to have the same name, Then prepend the type to the name (e.g. worker production and agent production) during installation. This will install them with unique Helm release names, avoiding the clash. After installation, the worker & target names can then be changed in the Octopus Server UI to the desired name to remove the prefix.

Registering Workers with the Tentacle executable

Tentacle workers can also register with the server using the Tentacle executable (version 3.22.0 or later), for example:

.\Tentacle.exe register-worker --instance MyInstance --server "https://example.com/" --comms-style TentaclePassive --apikey "API-YOUR-KEY" --workerpool "Default Worker Pool"Use TentacleActive instead of TentaclePassive to register a polling Tentacle worker.

The Tentacle executable can also be used to deregister workers, for example:

.\Tentacle.exe deregister-worker --instance MyInstance --server "https://example.com/" --apikey "API-YOUR-KEY"For information on creating an API key, see how to create an API key.

Recommendations for External Workers

We highly recommend setting up external workers on a different machine to the Octopus Server.

We also recommend running external workers as a different user account to the Octopus Server.

It can be advantageous to have workers on the same local network as the server to reduce package transfer times.

Default pools attached to cloud targets allow co-location of workers and targets, this can help make workers specific to your targets as well as making the Octopus Server more secure by using external workers.

Run Multiple processes on Workers simultaneously

Many workers may be running in parallel and a single worker can run multiple actions in parallel.

The task cap determines how many tasks (deployments or system tasks) can run simultaneously. The system variable Octopus.Action.MaxParallelism controls how much parallelism is allowed in executing a deployment action. It applies the same to deployment targets as it does to workers. For example, if Octopus.Action.MaxParallelism is set to its default value of 10, any one deployment action will:

- Deploy to at most 10 deployment targets simultaneously, or

- Have no more than 10 concurrent worker invocations running.

Parallel steps in a deployment can each reach their own MaxParallelism. Coupled with multiple deployment tasks running, up to the task cap, you can see the number of concurrent worker invocations can grow quickly.

External workers and the built-in worker have the same behavior in this regard and in that Workers can run many actions simultaneously and can run actions from different projects simultaneously. Note that this means the execution of an action doesn’t have exclusive access to a worker, which could allow one project to access the working folder of another project.

Note that if external workers are added to the default pool, then the workload is shared across those workers: a single external worker will be asked to perform exactly the same load as the built-in worker would have been doing, two workers might get half each, etc.

Run a process on a Worker exclusively

Sometimes it’s not desirable to run multiple deployments or runbooks on a Worker in parallel. Doing so can cause issues if one or more processes try to access a shared resource, such as a file.

By default, the system variable OctopusBypassDeploymentMutex is set to True when deploying to a worker . If you want to prevent workers running tasks in parallel you can set OctopusBypassDeploymentMutex to False.

If you need even more finely grained control to a shared resource, we recommend using a named mutex around the process. To learn more about how you can create a named mutex around a process using PowerShell, see this log file example.

You can see how Octopus uses this technique with the built-in IIS step in the open-source Calamari library.

Workers in HA setups

With Octopus High Availability, each node has a task cap and can invoke the built-in worker locally, so for a 4-node HA cluster, there are 4 built-in workers. Therefore if you move to external workers, it’s likely you’ll need to provision workers to at least match your server nodes, otherwise, you’ll be asking each worker to do the sum of what the HA nodes were previously doing.

Learn more

Help us continuously improve

Please let us know if you have any feedback about this page.

Page updated on Thursday, August 22, 2024